It’s Monday morning in the boardroom. Six months ago, your organization launched an AI pilot with high expectations: faster decision-making, streamlined operations, and measurable customer impact. Today, the dashboards are full of metrics accuracy, model performance, and experimental insights, but the question every executive is silently asking remains:

“What tangible business outcomes have we achieved?”

In the rush to adopt AI and gain a competitive edge, many businesses move quickly, sometimes too quickly. Though it doesn’t affect every organization, a significant number overlook foundational elements such as operational readiness, infrastructure requirements, team adoption, and clearly defined business objectives. The consequences are clear: customer complaints persist, operational bottlenecks remain, and budgets continue to rise. What initially felt like innovation can quickly turn into frustration, revealing the gap between ambition and execution.

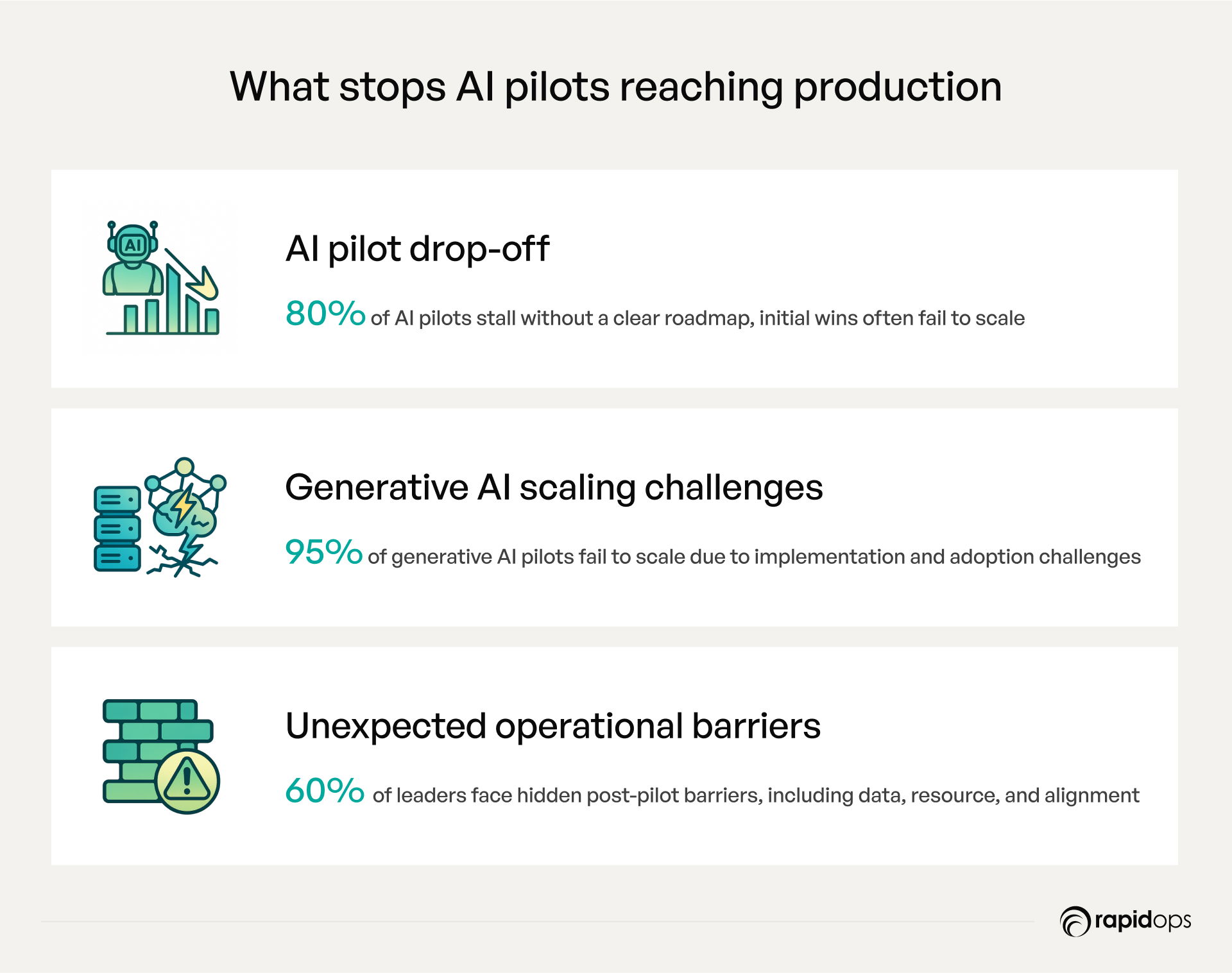

You are not alone. 88% of AI pilots fail to reach production, and it’s rarely just a technology issue. Failures are systemic, arising from gaps in strategy, uncurated data, governance, or overlooked operational and human factors. Without attention to both speed and foundational readiness, AI can become a costly experiment rather than a source of competitive advantage.

This blog explores 10 common AI mistakes and shows how leaders can turn pilots into real, measurable business value, navigating the adoption race with focus and precision.

1. Identifying the right use case

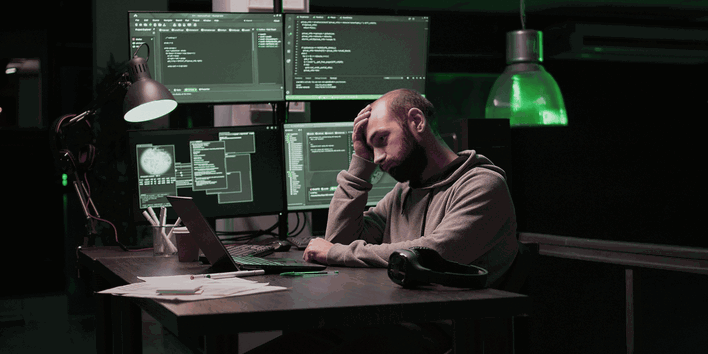

A frequent mistake in AI adoption is rushing into initiatives without clearly identifying where AI can deliver the most meaningful business impact. Organizations often expand AI projects across multiple departments prematurely, prioritizing speed over strategic alignment. This approach can lead to fragmented pilots, misaligned expectations, and resources being consumed without producing measurable results.

“The biggest mistake companies make is not focusing on the right problems to solve with AI. Technology should enable business outcomes, not drive the agenda.” Andrew Ng, AI Pioneer

The problem is compounded when initiatives focus on technical novelty rather than actual business needs. Pilots may succeed in isolated tests or demos yet fail to translate into real operational improvements.

For instance, deploying AI tools across departments without understanding which processes are high-value can result in inconsistent results, unclear accountability, and stalled adoption.

Another contributing factor is the complexity of enterprise operations. Without careful evaluation of where AI can have a tangible impact, teams can select use cases that are either too narrow to demonstrate value or too complex to execute effectively. This mismatch often frustrates leadership, erodes confidence in AI investments, and slows enterprise-scale adoption.

Ultimately, the lack of proper use-case identification illustrates a broader challenge: even sophisticated AI technologies cannot deliver value if they are applied without clarity on where they matter most. Selecting initiatives without this insight is a strategic misstep that can turn high-potential projects into stalled experiments, leaving organizations with wasted effort and unrealized expectations.

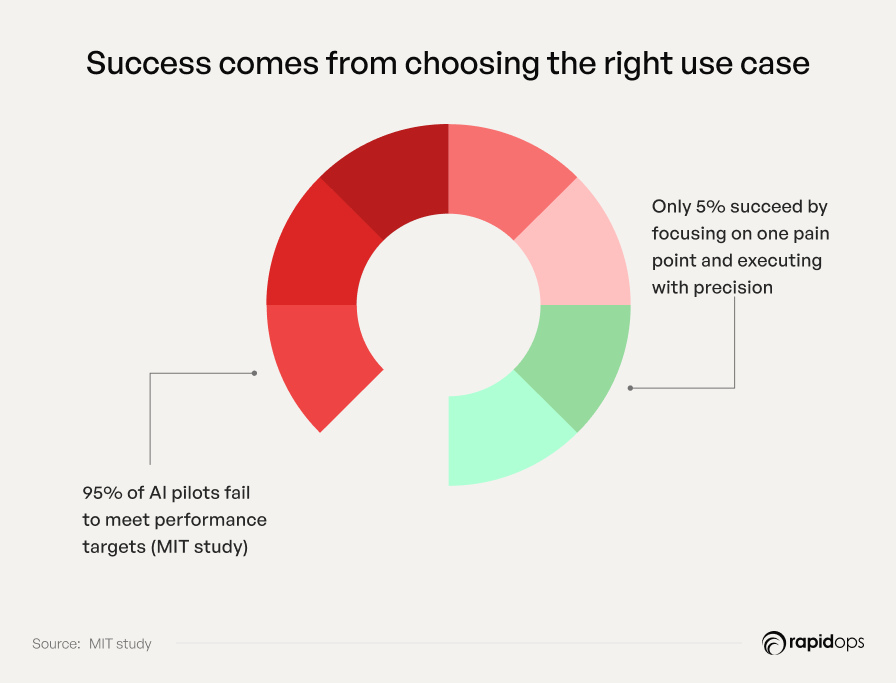

2. Problem–solution mismatch

A common pitfall in enterprise initiatives is launching pilots or projects without clearly defined business objectives. Research indicates that over 70% of AI and automation pilots fail to produce measurable business impact, often because success is tracked through technical metrics rather than outcomes that matter to the organization. Teams may highlight accuracy, system performance, or innovative features, yet leadership quickly notices the gap and asks, “What changed in the business?”

This mismatch often arises when goals emphasize novelty or technical achievement over tangible results. While a system may perform exceptionally under controlled conditions, its impact on revenue, efficiency, or customer satisfaction can remain minimal.

“AI success isn’t about perfection in models; it’s about solving meaningful problems that impact customers and operations.” Fei-Fei Li, Stanford AI Expert

The consequences are significant: investments consume resources without delivering meaningful lift, critical decisions are delayed, and executive confidence in future initiatives erodes.

The problem–solution mismatch illustrates a deeper lesson: technical success alone is insufficient if it does not translate into real-world business value. What truly determines success is whether the solution moves the needle on outcomes that matter such as revenue growth, operational efficiency, or customer experience.

3. Project, not product/program

A recurring pitfall in AI implementation is treating initiatives as isolated projects rather than ongoing products or programs. Studies show that over 60% of AI pilots fail to scale because they lack a structured roadmap, clear ownership, and defined service-level expectations. Teams may deliver initial prototypes successfully, yet without continuity, handoffs break down, release cadence slows, and accountability becomes diffuse.

This issue stems from a project-centric mindset that prioritizes short-term delivery over sustainable operational stewardship. In practice, this often leads to repeated rework, fragmented implementations, and limited adoption. For example, a global retailer deploying an AI-driven demand forecasting pilot experienced delays across regions because responsibility was not clearly assigned, forcing teams to redo integrations multiple times.

The impact extends beyond individual projects. Diffused accountability can create friction between business units, IT, and data science teams, slowing innovation and reducing overall confidence in AI initiatives. Fragmented implementations also make scaling across the enterprise challenging, limiting the potential ROI and delaying measurable business outcomes.

Project-centric thinking illustrates a critical lesson in AI adoption: without treating initiatives as products or programs, even technically successful pilots can fail to deliver enterprise-wide impact. The real challenge lies in shifting from isolated wins to sustainable, scalable outcomes that embed AI into the business's fabric.

4. Business infrastructure gaps

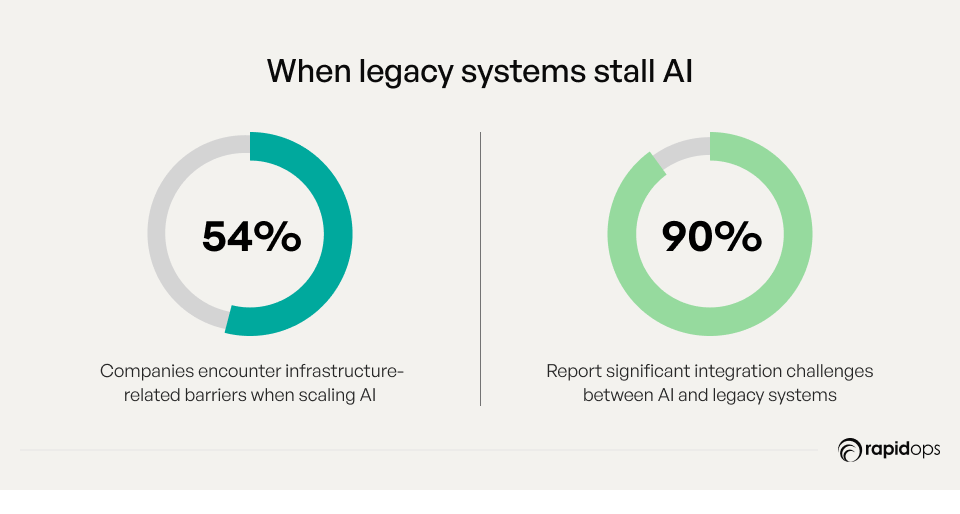

One of the most overlooked mistakes in AI adoption is attempting to scale without the right business infrastructure. Enterprises often push pilots forward on top of outdated systems, siloed data platforms, or disconnected workflows, conditions that guarantee delays and stalled impact before AI can prove its value.

The root of the issue lies in fragmented systems, a lack of integration readiness, and weak operational support. Even when data scientists and AI tools are in place, the absence of unified pipelines, interoperable platforms, and well-defined ownership prevents initiatives from moving beyond controlled environments.

In practice, this leads to costly delays, duplicated efforts, and stalled deployments that erode business confidence. For instance, a manufacturing firm piloting an AI-powered predictive maintenance system struggled to scale results because sensor data from multiple production lines was inconsistent and no single team owned deployment or monitoring.

The consequences are significant: pilots remain stuck in experimental phases, enterprise-wide rollouts slow down, and expected ROI diminishes. Over time, these gaps not only undermine trust in AI initiatives but also increase operational risk. The lesson is clear, without strong infrastructure foundations and integrated business systems, even the most promising AI solutions cannot scale into enterprise-wide capabilities.

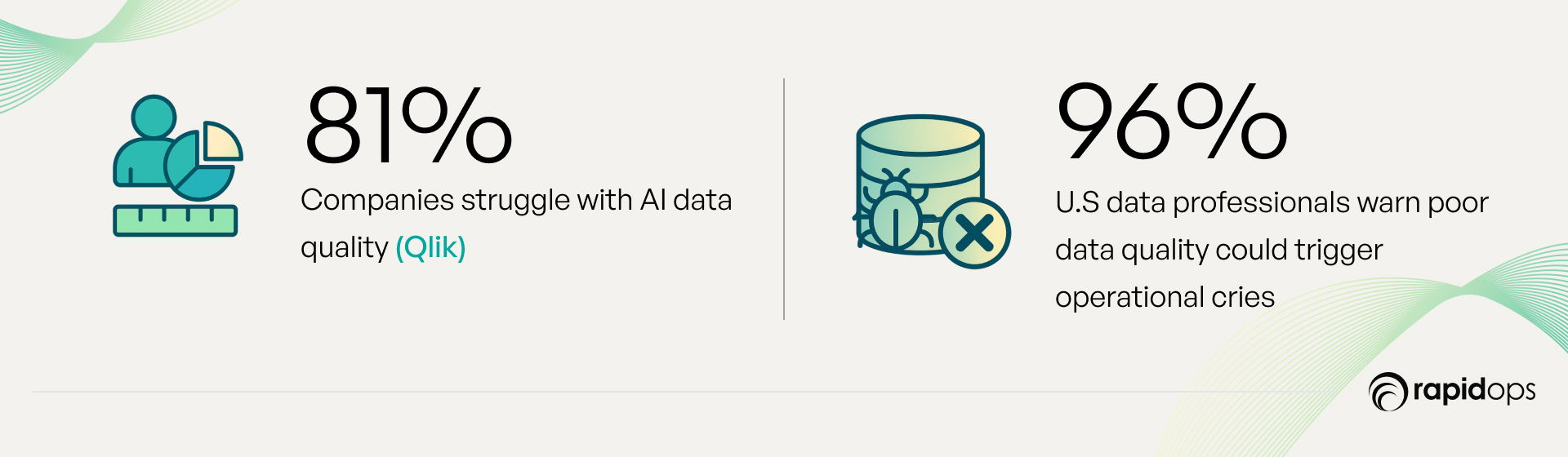

5. Data preparedness overlooked

Data is the foundation of AI; everything AI does relies on accurate, accessible, and well-governed data. Without strong data readiness, even the most advanced models can generate inconsistent, misleading, or unreliable outputs. Yet many organizations underestimate the importance of governance, lineage, and source quality when scaling AI initiatives.

“Garbage in, garbage out. Without clean, quality data, AI initiatives are doomed from the start.” Jeff Dean, Head of AI at Google

The root causes often include missing governance structures, unclear ownership, and fragmented or outdated data sources. In practice, this leads to contradictory outputs, delayed insights, and instances of AI “hallucination,” where systems produce inaccurate predictions.

A large retail chain, for example, deploying an AI-powered demand forecasting system, encountered inventory mismatches because sales and supply data were inconsistent across regions. This created stockouts in some markets, overstocking in others, and ultimately both revenue loss and customer dissatisfaction.

The consequences extend beyond technical errors. Poor data preparedness slows operations, disrupts decision-making, and weakens confidence in AI-driven initiatives. The lesson is clear: no matter how sophisticated the technology, AI can only perform as well as the data it is built upon. Organizations that fail to prioritize readiness risk turning AI into a source of inefficiency rather than a driver of enterprise value.

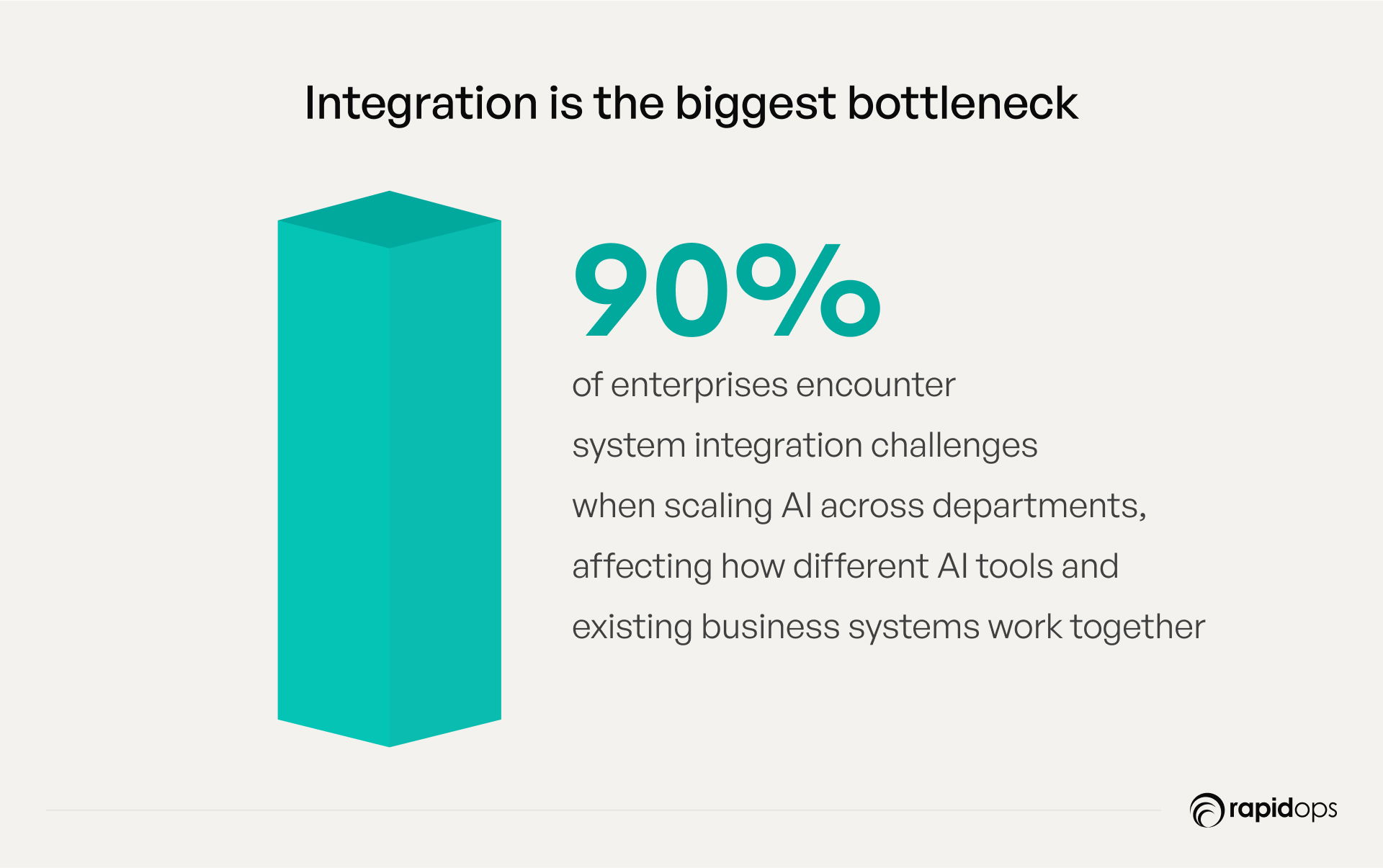

6. System integration is inadequately addressed

AI initiatives often struggle when solutions are only partially integrated into core workflows, limiting their ability to deliver full business value. Studies indicate that more than half of AI pilots fail to achieve intended efficiency gains because incomplete integration creates fragmented processes and manual workarounds.

The root cause typically lies in deferred process redesign and unclear system interfaces. Without clear alignment across systems, shadow handoffs and workflow bottlenecks emerge, forcing teams to intervene manually.

For example, a distribution company implementing an AI-driven route optimization system experienced repeated delays because the tool was not fully connected to inventory and dispatch operations, resulting in inefficiencies and missed opportunities.

The consequences are tangible: partial value capture, reduced operational efficiency, and diminished overall effectiveness of AI investments. This gap highlights a critical insight: even the most advanced AI technologies cannot deliver measurable business impact if they are not seamlessly embedded into end-to-end processes.

Without addressing this integration gap, organizations risk scaling technology that never translates into enterprise-wide outcomes.

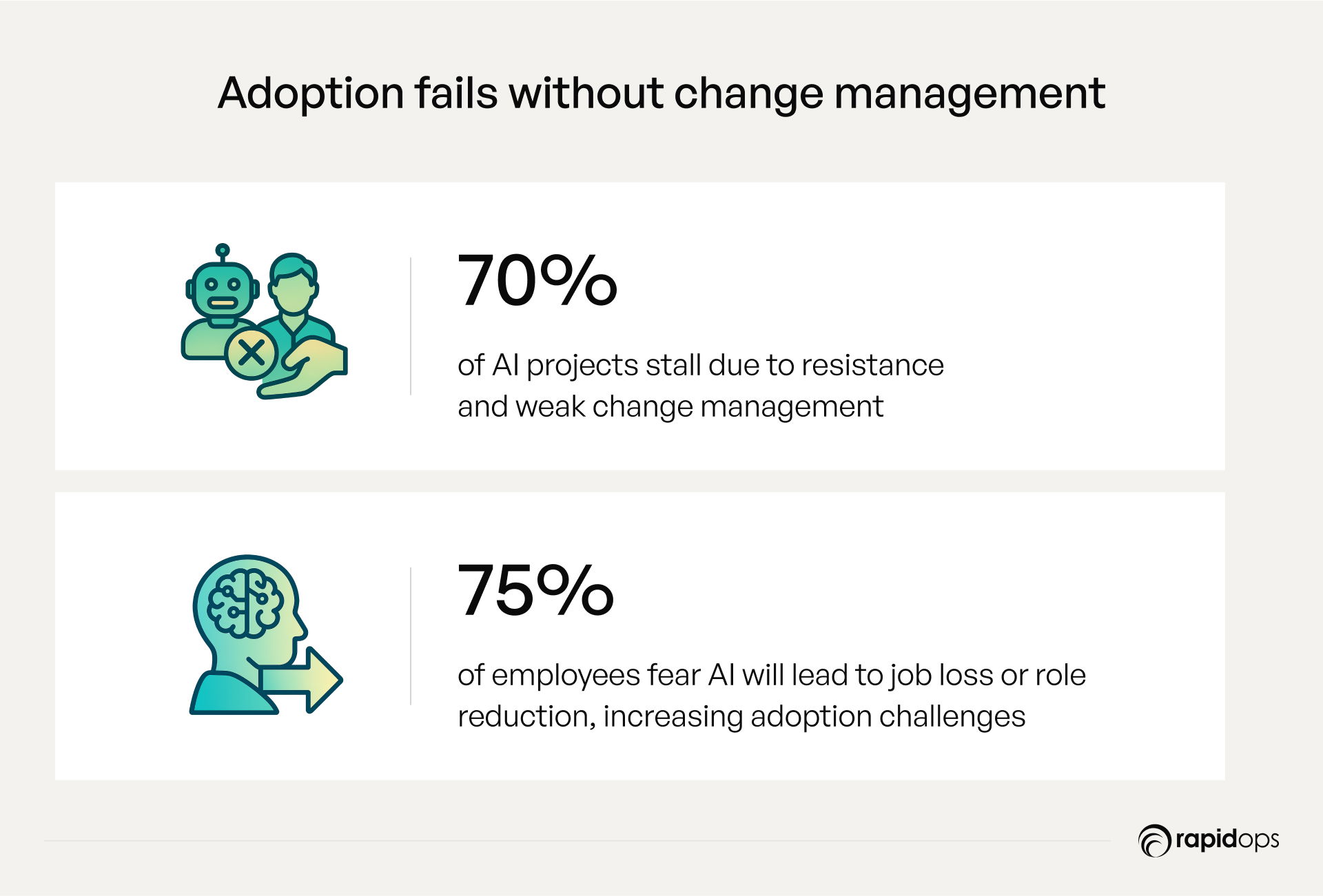

7. Change management and adoption gaps

Even the most advanced AI technologies fail to deliver value when employees struggle to adopt them effectively. Resistance to change, fear of role displacement, and weak internal frameworks can leave teams disengaged, undermining the potential of AI initiatives. Research indicates that a large majority of AI projects stall due to these adoption challenges, often driven by inconsistent execution, manual overrides, and low user engagement.

This challenge stems from insufficient training, unclear responsibilities, weak communication, and limited incentives. Without clarity on roles and confidence in AI systems, employees may bypass automation or revert to manual processes.

For instance, a distribution company implementing an AI-driven demand planning tool experienced repeated manual overrides because employees were unsure how to interpret and act on AI-generated recommendations.

“Transformation without people is just automation.” Julie Sweet, CEO, Accenture

The outcomes are clear: slowed adoption, diminished ROI, and stalled digital transformation efforts. These gaps underscore a critical insight: technical capability alone is insufficient. Human readiness, structured enablement, and clear adoption pathways are essential to unlock the full value of AI investments.

When adoption falters, the technology’s potential remains untapped, leaving enterprises with activity but no meaningful transformation.

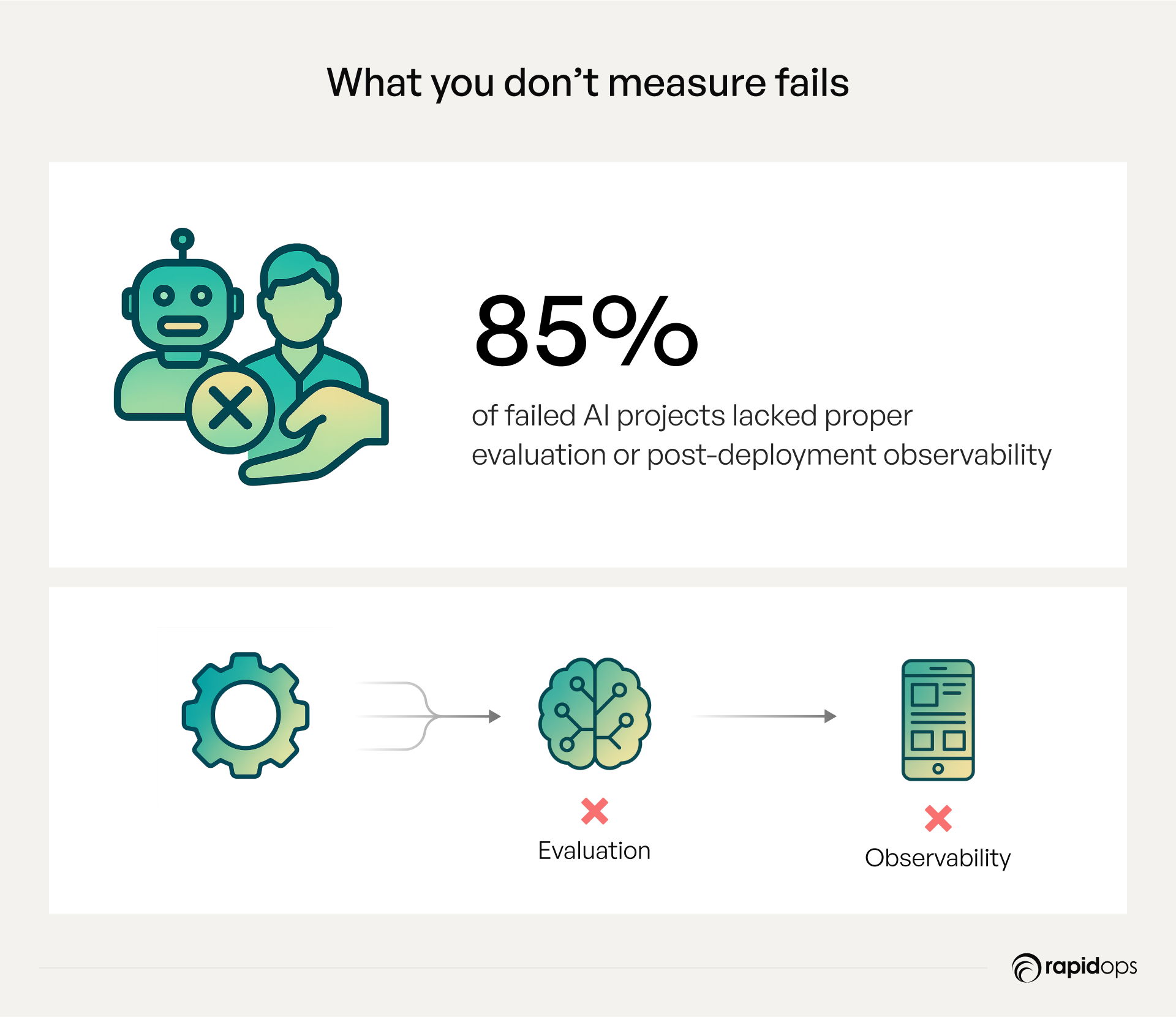

8. Lack of evaluation and observability

AI initiatives often stumble when organizations fail to effectively monitor or evaluate models in real-world conditions. A system that performs well in testing or demo environments may behave unpredictably in production, leaving teams unable to reproduce results or explain model decisions. According to industry research, nearly half of AI projects struggle post-deployment due to inadequate monitoring and evaluation, underscoring the scale of this challenge.

The root causes are typically missing traces, inconsistent test sets, and the absence of repeatable workflows. Without strong observability, incident resolution slows, and models essentially function as “black boxes” inside critical business processes.

For example, a large retail company deploying an AI-powered demand forecasting tool found that predictions accurate in testing environments often broke down during high-volume sales periods. The result: inventory shortages, manual overrides, and mounting operational friction.

The business impact is significant: delayed responses to issues, declining trust in AI outputs, and reduced enterprise adoption. This reveals a core lesson, even the most advanced models cannot deliver consistent value without transparent monitoring, reproducibility, and continuous evaluation. Leaders who overlook this capability often find their AI programs stalling before they ever reach enterprise scale.

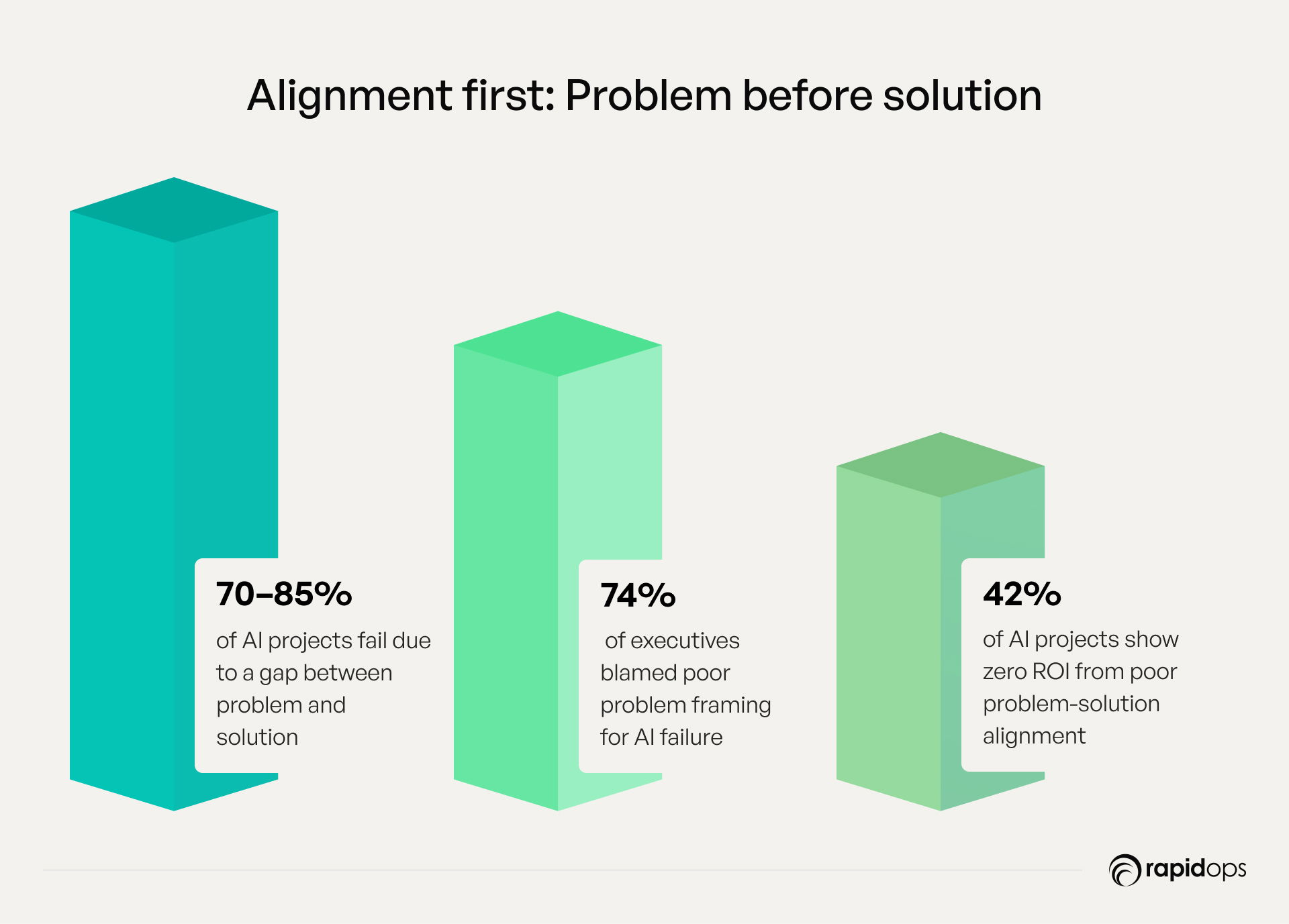

9. Pilot-to-production disconnect

AI pilots often deliver impressive results in controlled environments, but many struggle to scale across the enterprise. The challenge is not the technology itself, but how pilots are planned, executed, and operationalized. Understanding the common pitfalls can help organizations turn promising experiments into enterprise-ready solutions.

A primary mistake is testing pilots in isolation. Systems that perform well in labs may face integration bottlenecks, workflow misalignments, or service-level disruptions when deployed broadly. Another frequent error is neglecting operational planning and scalability, where pilots succeed in a limited scope but fail when infrastructure, compliance, or processes expand. Organizations also often underestimate hidden costs and resource requirements, resulting in delays, inefficiencies, and skepticism among stakeholders.

For instance, a manufacturing company piloting AI-driven predictive maintenance saw excellent results in lab simulations. Yet, deploying the solution across multiple plants exposed unexpected equipment downtime and disrupted workflows, underscoring the gap between pilot success and production readiness.

The lesson is clear: isolated success does not guarantee enterprise impact. Executives must ensure pilots are tested under realistic production conditions, supported by robust operational controls, and seamlessly integrated with workflows. By addressing these mistakes early, organizations can transform AI pilots into scalable, high-impact solutions that deliver measurable, sustainable business value.

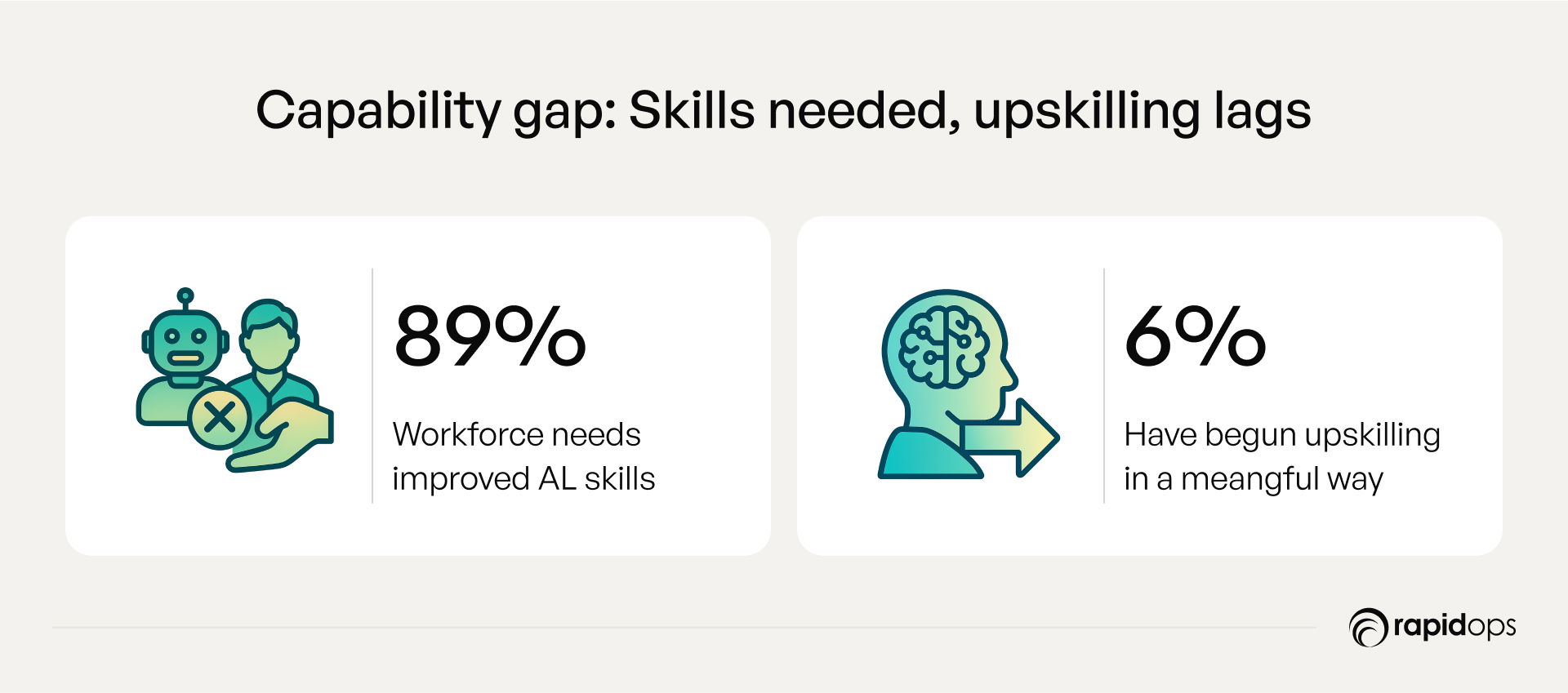

10. Internal team or partner capability limitations

Even highly promising AI initiatives can falter when organizations lack the necessary internal expertise or rely on partners without sufficient experience. Skills gaps and limited partner capabilities frequently cause AI projects to underperform, highlighting a systemic challenge in scaling enterprise AI effectively.

These issues often stem from incomplete in-house capabilities, limited training programs, and dependency on partners unfamiliar with the demands of enterprise-scale deployment. For example, a retail organization implementing an AI-powered inventory optimization system experienced repeated delays and inconsistent forecasts because internal teams struggled with model deployment, while the external partner lacked the experience to integrate the solution with legacy supply chain systems.

The consequences are substantial: slower adoption, suboptimal solutions, and a higher risk of project failure. Awareness of skills gaps alone is insufficient, organizations must strategically develop internal capabilities and carefully select partners with proven enterprise AI expertise.

“AI leaders are raising the bar with more ambitious goals.” Nicolas de Bellefonds, BCG senior partner

This underscores a critical insight: successful AI adoption requires aligning internal talent and external support with the complexity of the initiative. Organizations that invest in building skilled, cross-functional teams and collaborate with experienced AI partners are far more likely to achieve scalable, sustainable, and measurable AI impact.

Transforming AI challenges into a strategic advantage

AI failure rarely happens overnight; it often builds quietly across strategy, data, governance, operational readiness, talent, and technology. Understanding these patterns isn’t about assigning blame; it’s about seeing the full picture, making informed decisions, and building resilience as AI initiatives scale from pilots to enterprise impact.

At Rapidops, we’ve partnered with organizations across retail, manufacturing, and distribution, observing how even promising AI initiatives can stall when teams, operations, and strategy aren’t fully aligned. Leaders often feel the frustration of stalled pilots, fragmented data, and missed opportunities. These experiences reinforce a simple truth: turning AI experiments into measurable business impact requires foresight, clarity, and thoughtful execution, lessons any organization can apply to achieve meaningful results.

Step back and reflect on your AI initiatives. Where might gaps in strategy, data, team adoption, or operations limit impact? Which targeted actions could transform insights into measurable value?

Consider a complimentary consultation to review your initiatives, uncover hidden risks, and explore how to scale AI effectively, turning lessons from past challenges into lasting enterprise advantage.

Frequently Asked Questions

How do executive expectations influence the success or failure of AI initiatives?

Executive expectations shape priorities, funding, and evaluation metrics. Misaligned expectations often cause AI fail when pilots are judged solely on technical novelty rather than measurable business outcomes. Setting clear, outcome-focused expectations ensure teams align strategy with enterprise goals. Leadership visibility, defined KPIs, and regular progress reviews help prevent stalled initiatives and build confidence in AI adoption across the organization.

Why is long-term AI strategy more important than short-term project wins?

Focusing on short-term AI wins can create temporary successes that don’t scale. AI fail often occurs when projects are isolated experiments lacking a roadmap for enterprise adoption. A long-term strategy integrates data, infrastructure, and business processes, ensuring AI initiatives deliver sustainable value. Planning for scalability, cross-functional alignment, and operational readiness prevents fragmented deployments and increases ROI.

How can organizations measure real business impact from AI beyond technical metrics?

AI fail is frequently driven by reliance on accuracy or system performance metrics alone. True business impact requires linking AI outcomes to operational KPIs such as revenue growth, cost reduction, or customer satisfaction. Monitoring these metrics in real-world contexts, rather than controlled environments, highlights whether AI initiatives achieve strategic objectives and provides executives with actionable insights to guide enterprise-scale adoption.

What hidden operational challenges commonly derail enterprise AI adoption?

Operational gaps fragmented workflows, unclear roles, inadequate training, or weak process integration, often cause AI fail. Even technically successful models can underperform if operational realities are ignored. Recognizing these challenges early allows organizations to align AI solutions with business processes, define accountability, and maintain continuity. Addressing operational readiness ensures AI delivers measurable value rather than remaining a proof-of-concept.

How does misalignment between IT, business, and data teams affect AI outcomes?

AI fail frequently arises from misalignment between IT, business units, and data teams. Disconnected priorities, unclear ownership, and siloed processes hinder deployment and adoption. Collaboration across functions ensures data availability, infrastructure support, and operational integration. Aligning technical capabilities with business goals reduces delays, improves model relevance, and maximizes enterprise-wide impact, transforming AI from experimental pilots into scalable solutions.

Why are reproducibility and traceability of AI models critical for scaling initiatives?

Lack of reproducibility and traceability often leads to AI fail when models perform inconsistently across environments. Being able to reproduce results and track model decisions ensures reliability, accountability, and adherence to compliance standards. Organizations can debug issues faster, maintain stakeholder trust, and scale solutions confidently. Standardized processes for model logging, versioning, and testing create a foundation for consistent, enterprise-ready AI deployments.

How can companies detect and prevent subtle biases or errors in AI systems before deployment?

AI fail can occur when hidden biases or errors skew outputs, leading to flawed decisions. Systematic validation, bias testing, and ongoing monitoring are essential. Ensuring diverse datasets, transparent algorithms, and rigorous evaluation frameworks mitigates risks. By detecting potential biases early, organizations protect operational integrity, maintain compliance, and increase confidence in AI solutions, transforming technology into a trusted enterprise asset.

What lessons can be learned from cross-industry AI failures in retail, manufacturing, or distribution?

Cross-industry AI fail shows that context, integration, and operational readiness are critical. In retail, poor demand prediction models led to inventory issues; in manufacturing, misaligned AI caused production inefficiencies; in distribution, incomplete automation reduced throughput. Lessons include prioritizing business alignment, embedding AI into workflows, ensuring data quality, and preparing teams for adoption. Learning from these failures prevents repeating similar mistakes across sectors.

How do resource allocation and prioritization impact the success of AI programs?

AI fail is often linked to insufficient or misallocated resources. Teams may lack data engineers, model specialists, or operational support, causing delays and underperformance. Prioritizing high-impact initiatives, ensuring adequate staffing, and balancing experimentation with enterprise needs prevents wasted investment. Clear resource planning enables consistent execution, smoother adoption, and scalable AI programs that deliver measurable business outcomes.

Why is continuous monitoring and learning essential for sustaining AI performance over time?

Without continuous monitoring, AI fail becomes inevitable as models degrade, data drifts, or business contexts change. Ongoing evaluation ensures model accuracy, operational effectiveness, and alignment with evolving objectives. Implementing feedback loops, retraining schedules, and performance dashboards enables organizations to sustain impact, maintain trust, and maximize ROI, ensuring AI evolves as a reliable, enterprise-scale capability rather than a static experiment.

Rahul Chaudhary

Content Writer

With 5 years of experience in AI, software, and digital transformation, I’m passionate about making complex concepts easy to understand and apply. I create content that speaks to business leaders, offering practical, data-driven solutions that help you tackle real challenges and make informed decisions that drive growth.

What’s Inside

- 1. Identifying the right use case

- 2. Problem–solution mismatch

- 3. Project, not product/program

- 4. Business infrastructure gaps

- 5. Data preparedness overlooked

- 6. System integration is inadequately addressed

- 7. Change management and adoption gaps

- 8. Lack of evaluation and observability

- 9. Pilot-to-production disconnect

- 10. Internal team or partner capability limitations

- Transforming AI challenges into a strategic advantage

Let’s build the next big thing!

Share your ideas and vision with us to explore your digital opportunities

Similar Stories

- AI

- 4 Mins

- September 2022

- AI

- 9 Mins

- January 2023

Receive articles like this in your mailbox

Sign up to get weekly insights & inspiration in your inbox.