DALL-E is an artificial intelligence program that creates images from textual descriptions, revealed by OpenAI on January 5, 2021. It uses a 12-billion parameter training version of the GPT-3 transformer model to interpret the natural language inputs and generate corresponding images.

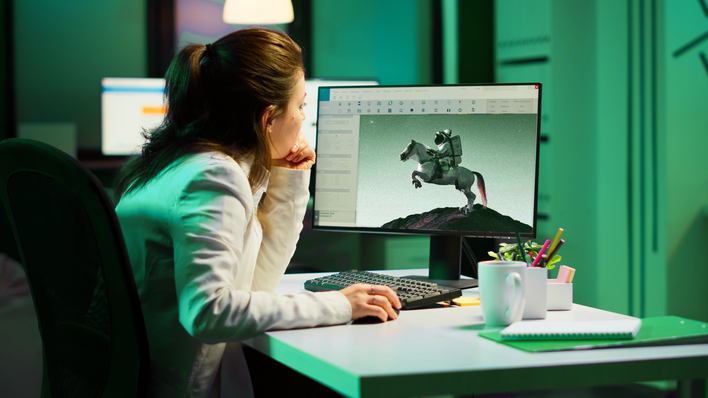

The program was demonstrated with inputs such as "an armchair in a living room" or "a pizza with pepperoni and mushrooms," which generated corresponding images. For some inputs, multiple distinct but plausible outputs were provided. The system was also able to generate animations from textual descriptions.

OpenAI plans to release a lighter version of the model that can be run on standard laptops and an API for accessing the model, though no timeline was provided.

How DALL-E 2 works?

DALL-E 2 is a neural network that uses the same principles as GPT-3 to generate images from textual descriptions, but it goes one step further. It can also parse natural language inputs and generate corresponding images.

The technology first parses the text input to generate an image to identify the objects and scene layout mentioned in the description. It then generates an image by placing those objects in a realistic 3D environment and rendering them from different viewpoints. The end result is a high-resolution, photorealistic image.

Benefits of DALL-E 2

DALL-E 2 offers several benefits over traditional image generation algorithms:

1. Photorealism

It can generate highly realistic images, making it ideal for applications that require lifelike images (e.g., virtual reality, augmented reality, and digital assistants).

2. Scalability

It can generate images of arbitrarily large size and resolution. This makes it possible to generate images of very high quality without the need for expensive hardware.

3. Flexibility

It is not limited to generating images from textual descriptions; it can also generate images from natural language inputs (e.g., "a picture of a dog"). This makes it possible to generate images on demand without needing pre-defined datasets.

4. Efficiency

It is very efficient and can generate images in real time on a standard GPU. This makes it possible to use DALL-E 2 in interactive applications, such as digital assistants and games.

Interesting examples of DALL-E 2

Corgi and cat fixing the website, polaroid

Cat and corgi fixing the website, 8-bit pixel art

Corgi and cat fixing the website, oil painting

Limitations of DALL-E 2

DALL-E 2 is not perfect; it has some limitations that should be considered when using it for image generation:

1. Semantic accuracy

It can sometimes generate semantically inaccurate images (e.g., an image of a dog that does not look like an actual dog). This is because DALL-E 2 relies on text input to generate images, and sometimes the text input may be incorrect or ambiguous.

2. Object placement

It can sometimes place objects in an incorrect or unrealistic location in the generated image (e.g., a dog that is floating in the air). It is due to the fact that DALL-E 2 relies on a 3D environment to place objects, and sometimes the 3D environment may be incorrect or inaccurate.

3. Input size

Since DALL-E 2 uses a neural network, it requires a fixed input size of minimum of 64x64 pixels.

4. Output format

It generates images in PNG format. This limitation is there because DALL-E 2 uses a neural network that is only capable of generating PNG images and no other formats.

Final words

While we see DALL-E 2 on rise for being an AI image generator, we can assume that there are a few in the making and more to be rolled out. It is a refreshing concept and has shown us the path to a more innovative and brighter future for technology and people.

There is a threat that AI will take all jobs from humans, but that is not entirely true. With DALL-E 2, the illustrations and designs still need improvement.

If anything, such technologies will enable humans to create better and more interactive designs of data for which only humans have to feed and assess the quality of the output.

Niyati Madhvani

A flamboyant, hazel-eyed lady, Niyati loves learning new dynamics around marketing and sales. She specializes in building relationships with people through her conversational and writing skills. When she is not thinking about the next content campaign, you'll find her traveling and dwelling in books of any genre!

Let’s build the next big thing!

Share your ideas and vision with us to explore your digital opportunities

Similar Stories

Receive articles like this in your mailbox

Sign up to get weekly insights & inspiration in your inbox.