In every corner of the modern enterprise, sales calls, service chats, contracts, reports, and product logs, valuable insights are hiding in plain sight. But most of this knowledge remains locked in silos, fragmented across teams and systems, too vast and unstructured for traditional tools to handle. The opportunity? If businesses could truly tap into this intelligence, they wouldn’t just move faster, they’d become exponentially smarter.

That’s the promise that sparked the rise of large language models (LLMs). Originally developed in research labs, LLMs were trained to understand and generate human-like text at scale, powering a wave of consumer breakthroughs from chatbots to code assistants. But as enterprises adopted them, a gap quickly emerged.

These models, while fluent, were context-blind. They couldn’t interpret industry-specific terminology, failed to reason over enterprise workflows, and raised serious concerns around data security, governance, and compliance. What businesses needed wasn’t just language intelligence; they needed models that aligned with their unique domains, systems, and decision logic.

This led to the emergence of Enterprise LLMs: purpose-built models that combine the language power of LLMs with enterprise-grade control, domain expertise, and deep system integration. Trained on proprietary and regulated data, and embedded within tools like ERP, CRM, and SCM, these models are engineered to turn data chaos into coordinated, intelligent action.

As industries shift toward intelligence-first operations, Enterprise LLMs are emerging as the backbone of modern business. This blog breaks down what they are, how they differ from generic models, and why now is the time for enterprises to adopt them to stay ahead.

What is enterprise LLM

Enterprise large language models (Enterprise LLMs) represent a fundamental evolution in AI, moving from general-purpose, consumer-facing tools to domain-specific, business-optimized systems designed to power secure, context-rich intelligence at scale. Unlike public LLMs trained on diverse internet data, Enterprise LLMs are purpose-built for business environments where precision, compliance, governance, and operational integration are not optional; they’re foundational.

At their core, enterprise LLMs are generative AI models fine-tuned on an enterprise’s proprietary data, including documents, knowledge bases, system logs, ERP records, CRM interactions, and policy manuals. This domain-specific tuning, enabled by expert LLM development, allows them to reason over complex business contexts, provide grounded responses, and automate high-value knowledge workflows with confidence and traceability.

Where public LLMs deliver generic fluency, enterprise LLMs deliver relevance, trust, and actionability, making them strategic enablers of AI-native transformation across industries like, manufacturing, retail, and distribution.

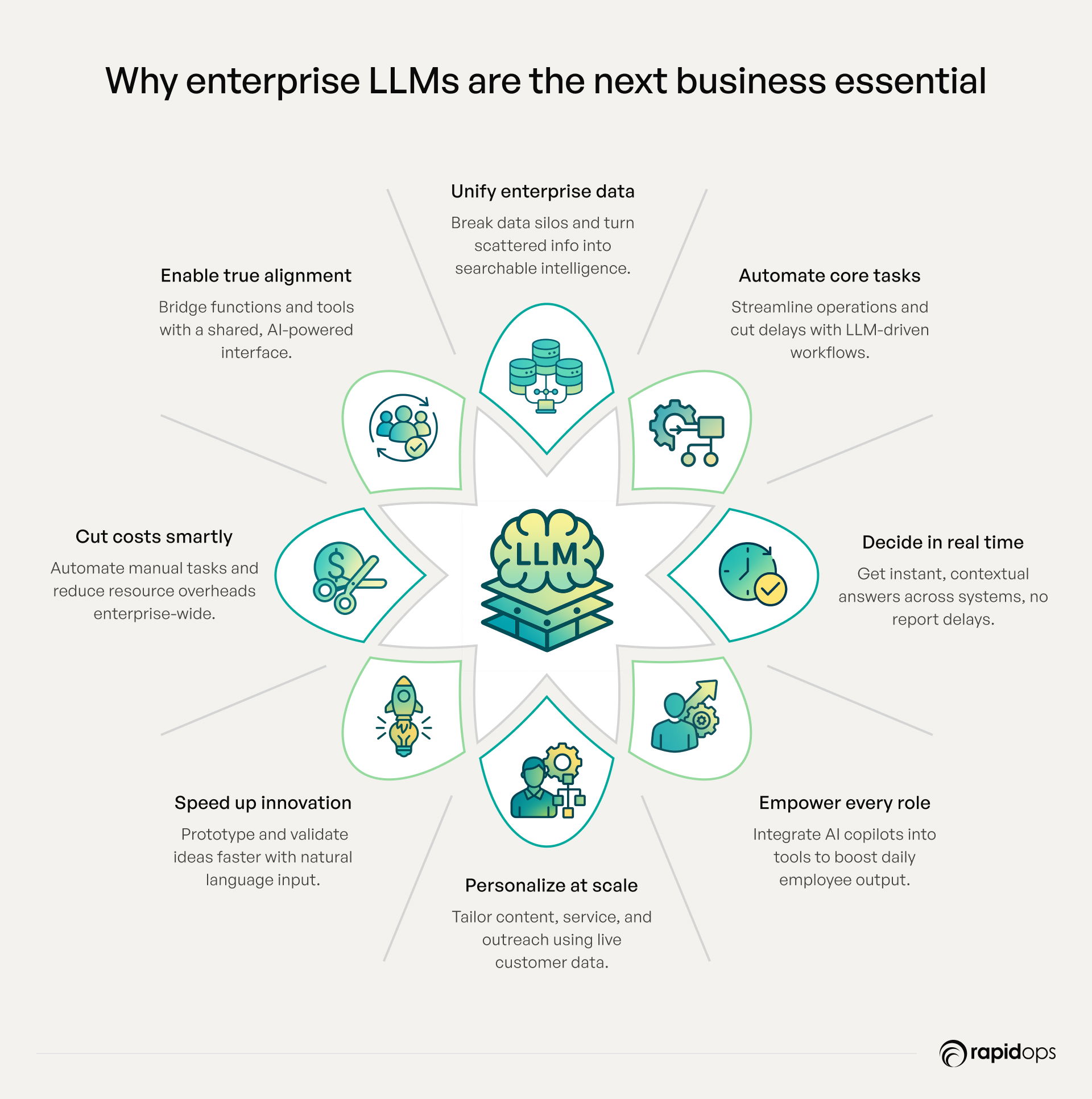

Why your business needs an enterprise LLM

Enterprises today are under mounting pressure to deliver faster decisions, operational efficiency, and differentiated customer experiences while navigating increasing data complexity, regulatory scrutiny, and cost constraints. Traditional tools are ill-equipped to extract intelligence from the exponential volumes of structured and unstructured data spread across departments, systems, and formats.

Enterprise-grade LLMs provide a purpose-built solution enabling organizations to unify knowledge, automate intelligence-driven workflows, and unlock new dimensions of productivity, personalization, and innovation, all while maintaining governance, security, and cost-efficiency at scale.

1. Unify knowledge and break down data silos

Enterprise data lives in silos spanning CRMs, ERPs, emails, contracts, support tickets, call transcripts, and more. Enterprise LLMs act as an orchestration layer that understands language, context, and intent across disparate data sources. They transform disconnected content into a single, queryable intelligence surface, powering enterprise search, domain-specific Q&A, and contextual reasoning. This eliminates bottlenecks, democratizes access to knowledge, and creates a more agile, informed organization.

2. Automate workflows for efficiency and scalability

Enterprise LLMs automate time-intensive, knowledge-driven processes that span multiple tools and touchpoints. From summarizing policy documents and legal agreements to generating compliance reports and automating support resolution, LLMs drastically reduce cycle times. This automation is a core focus in AI agent development, where agents handle operational logic across systems without manual intervention.

3. Provide real-time, contextual insights for faster decision-making

Executives and teams often wait days or weeks for reports that answer basic but critical questions. LLMs remove these delays by providing natural language access to enterprise data in real-time. With LLM-integrated dashboards, copilots, or chat interfaces, decision-makers can ask open-ended questions like “What’s driving sales decline in region X?” and receive precise, contextualized answers synthesized across systems. Initiatives in generative AI strategy and consulting are increasingly centered around these decision augmentation capabilities.

4. Boost employee productivity with AI copilots

By integrating LLM-powered copilots into internal tools, such as email, CRM, ERP, and knowledge bases, organizations empower employees to do more with less. Sales teams can auto-generate proposals. Legal can search contracts with natural language. HR can answer policy questions instantly. These copilots reduce time-to-information, eliminate friction in digital workflows, and elevate workforce productivity without disrupting existing systems.

5. Deliver hyper-personalized customer experiences

Consumer expectations are rising. Generic interactions won’t suffice. Enterprise LLMs enable fine-grained personalization, tailoring content, product recommendations, and support interactions using real-time customer data. Whether it’s dynamic chatbot responses, personalized email copy, or AI-enhanced sales outreach, LLMs enable brands to speak to each customer as an individual, driving higher satisfaction, loyalty, and lifetime value.

6. Foster innovation and shorten time-to-value

LLMs reduce the complexity of exploring new ideas. Business users can describe what they want in natural language, “simulate pricing impact under scenario Y,” and the model executes. This accelerates prototyping, automates experimentation, and reduces dependence on technical gatekeepers; the result: faster innovation cycles, faster validation, and faster business impact.

7. Optimize costs and improve resource efficiency

LLMs don’t just create new capabilities; they reduce operational waste. By automating repetitive tasks, minimizing duplicated effort, and improving accuracy in knowledge work, they reduce cost per outcome across departments. Moreover, with reusable model architectures and scalable deployment options, enterprises avoid vendor lock-in and reduce the long-term cost of ownership.

8. Enhance collaboration and knowledge flow across teams

Enterprise LLMs break the language barriers between departments, systems, and roles. They enable marketing to understand product data, finance to access operational details, and support teams to tap into sales insights, using a shared language interface. This boosts cross-functional collaboration and accelerates alignment, making the organization more responsive and resilient.

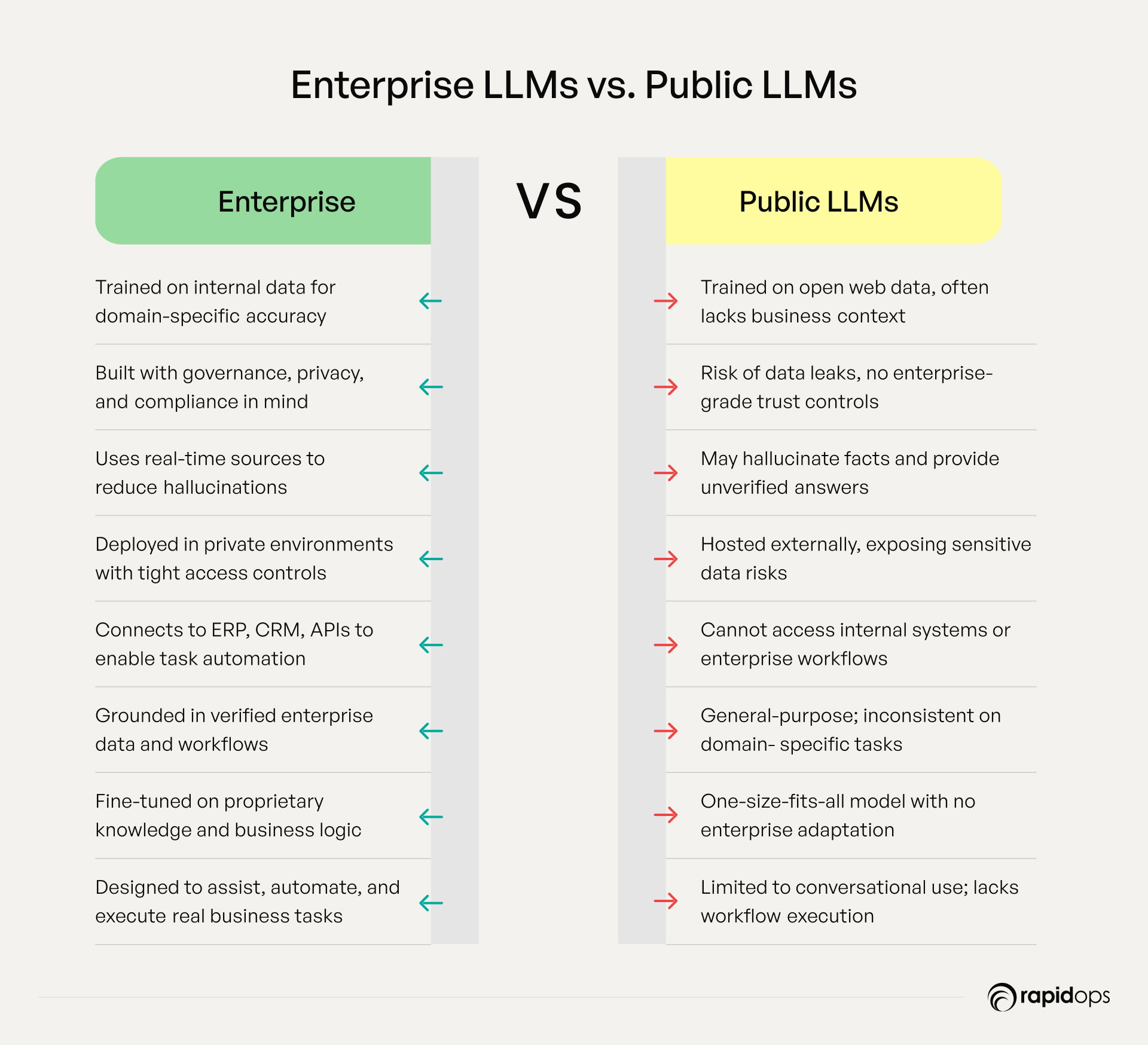

How enterprise LLMs differ from public LLMs

Public LLMs like ChatGPT, Claude, or Gemini are designed for broad, general-purpose tasks. Trained on massive internet-scale datasets, they perform well in open-ended conversations across diverse topics. However, in enterprise settings, these models often fall short frequently hallucinating facts, lacking domain-specific context, and raising concerns around data privacy, compliance, and operational trust.

Enterprise LLMs, by contrast, are not pre-fine-tuned for business use. They must be purposefully tailored using internal knowledge, domain-specific data, regulatory frameworks, and proprietary workflows. This deliberate customization is essential to ensure meaningful business alignment and safe deployment.

Here’s how enterprise LLMs stand apart.

- Contextual relevance: Enterprise LLMs are fine-tuned on private, domain-specific corpora such as internal policies, process documentation, and business data. This ensures their outputs are not only accurate and grounded but also contextually relevant, aligned with the terminology, workflows, and logic unique to the enterprise.

- Trust and compliance: Deployed within secure, governed environments complete with audit trails, role-based access, and responsible AI controls, enterprise LLMs meet enterprise-grade privacy, compliance, and intellectual property protection requirements.

- Reliable, grounded outputs: Integrated with vector databases and RAG pipelines, these models reduce hallucinations by grounding responses in real-time, verified enterprise sources.

- System interoperability: Enterprise LLMs interface with core systems (CRM, ERP, APIs, data lakes), enabling workflow execution, contextual search, and real-time decision support across business functions.

While public LLMs offer language fluency, enterprise LLMs deliver operational impact. They are architected not just to converse, but to function securely, contextually, and at scale within complex enterprise environments.

Why enterprise LLMs must be secure, integrated, and built for real business needs

Enterprise LLMs go far beyond generic models, they’re engineered as part of a secure, composable AI stack that aligns with enterprise systems, policies, and workflows.

They automate document-heavy tasks like policy comparison, audits, and knowledge retrieval, while embedding intelligence into platforms like SAP, and Workday to drive real-time, context-aware decisions. Robust governance is built-in: access controls, red-teaming, domain validation, and output traceability ensure compliance and ethical use, especially in regulated sectors like finance, life sciences, and public infrastructure.

While open models like Llama 3 or Cohere’s Command R+ offer flexibility, enterprise success depends on tailored integration, models must be fine-tuned, orchestrated, and deployed within a secured, governed environment that reflects business-specific data, logic, and regulations. It’s this foundation that enables enterprise LLMs to deliver safe, scalable, and trusted business value.

How RAG, vector databases, and orchestration make enterprise LLMs work

Enterprise-grade LLMs are rarely deployed in isolation. Their true potential is unlocked through orchestration frameworks and retrieval-enhancement layers, which elevate them from static generators to dynamic intelligence engines.

- Vector databases: Store semantically indexed embeddings of enterprise content, enabling context-aware retrieval and dynamic prompting.

- Retrieval-augmented generation: Enhances model accuracy and relevance by grounding generation in enterprise-authoritative sources at runtime.

- LLM orchestration frameworks: Manage interactions between LLMs, APIs, data sources, and execution environments, allowing intelligent chaining of tasks such as document parsing, knowledge enrichment, and workflow automation.

Together, these components enable LLMs to act as enterprise copilots capable of surfacing insights, executing multi-step actions, and making informed decisions on live business data with full traceability and governance.

Enterprise LLMs turn complexity into clarity by embedding intelligence into systems and workflows. When properly governed and aligned, they shift AI from experimentation to scalable execution enabling automation, insights, and strategic control at scale.

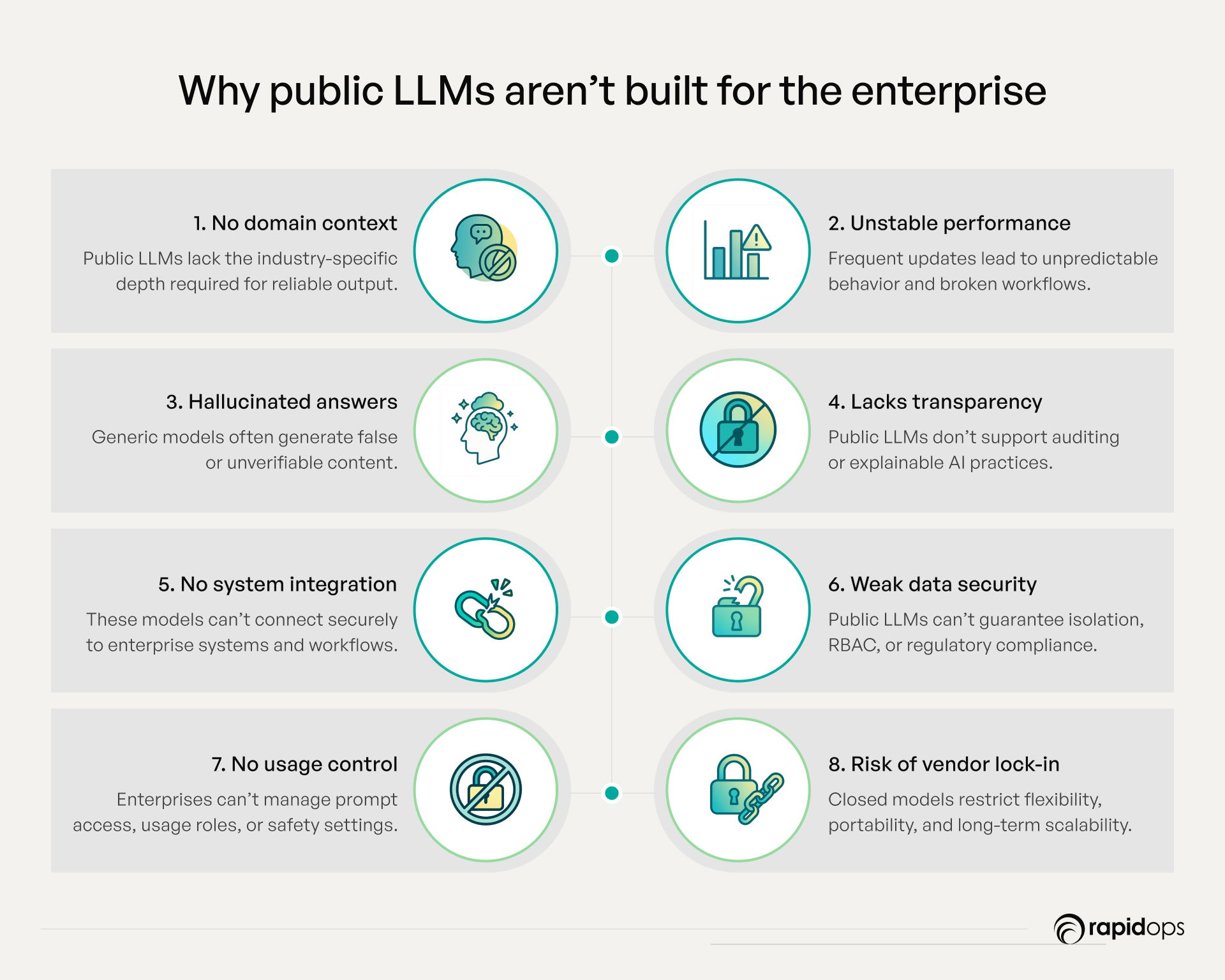

Why enterprises can’t just use public LLMs as-is

While public LLMs have captured mainstream attention, they fall short of meeting the operational, regulatory, and architectural requirements of enterprise-grade applications. For enterprises prioritizing trust, scale, and integration, these models often introduce more risk than value.

1. Lack of fine-tuning for domain-specific context

Public LLMs are trained in broad, open-domain data. They cannot be reliably adapted to understand the nuanced, proprietary, and often regulated language of specific industries. Without LLM fine-tuning capabilities on private enterprise data, outputs lack relevance and contextual precision.

2. No control over model behavior or updates

Enterprise environments demand stability, predictability, and version control. Public LLMs are typically updated without notice, introducing variability in output and performance, making it difficult to validate outcomes, maintain compliance, or run consistent production workflows.

3. Hallucinations and unverifiable outputs

Generic LLMs can generate fluent but inaccurate or entirely fictional content, a critical risk in domains like finance, or legal. Without mechanisms to verify sources or validate answers, these models pose significant challenges for business-critical use.

4. Governance and transparency are missing

Enterprises require full visibility into how AI makes decisions. Public LLMs offer little to no transparency into internal reasoning, logs, or audit trails. This lack of explainability undermines trust and impedes regulatory compliance across sectors.

5. Inability to integrate with enterprise systems

AI must work in concert with internal systems, including ERPs, CRMs, knowledge bases, and APIs. Public LLMs are closed systems that don’t natively support secure, bi-directional integration with enterprise tech stacks, limiting their practical utility.

6. Security, privacy, and data isolation gaps

Public models don’t meet enterprise-grade security requirements. They lack support for data isolation, encryption at rest, role-based access controls (RBAC), and compliance frameworks like HIPAA or GDPR, introducing unacceptable risks in regulated industries.

7. No prompt governance or access control

Without the ability to govern prompts, restrict usage by roles, or enforce safety filters, enterprises lose control over how the models are accessed and used. This opens the door to unintended behavior, misuse, and exposure of sensitive data.

8. Vendor lock-in risks limit strategic flexibility

Most public LLMs are closed-source and cloud-tied. This architecture not only limits transparency but also creates long-term vendor lock-in risks, making it difficult to shift infrastructure, ensure portability, or negotiate costs at scale. Public LLMs fall short on control, security, and integration; enterprises need purpose-built models aligned with governance, workflows, and strategic data to scale AI responsibly.

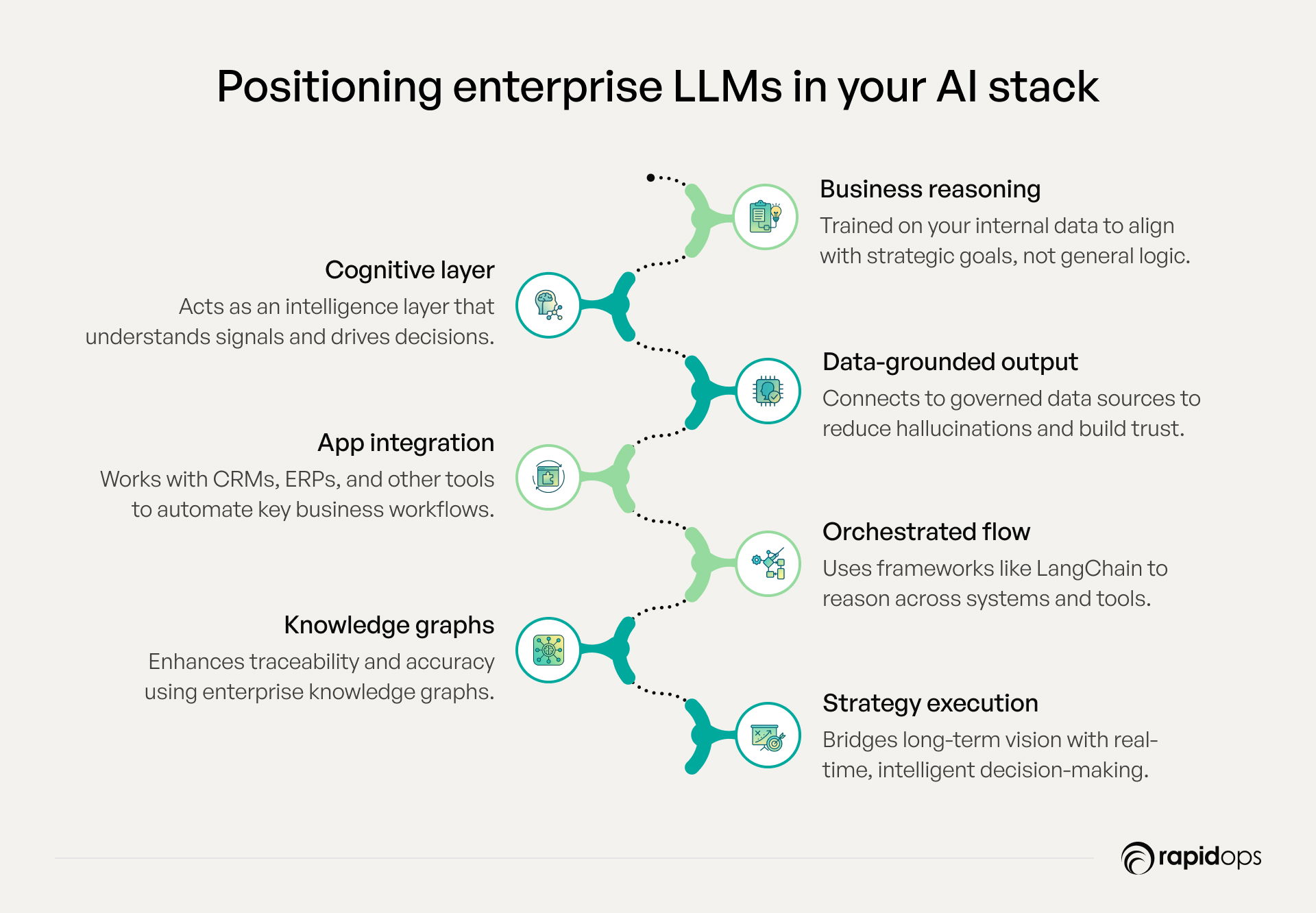

Where enterprise LLMs fit in your AI stack

Enterprise LLMs aren’t just another layer in the tech stack; they serve as the cognitive engine of your AI-native architecture. They connect data, systems, and decisions to deliver contextual, intelligent outputs across the business.

- Business-aligned reasoning: Enterprise LLMs are trained on your unique knowledge, internal processes, customer context, and proprietary IP, enabling responses that align with your strategic goals, not just general intelligence.

- Cognitive intelligence layer: Positioned above foundational data infrastructure, LLMs interpret signals, understand business language, and generate insights in real time.

- Data-grounded accuracy: They securely access data lakes, warehouses, and unstructured content, grounding outputs in governed enterprise sources, reducing hallucinations and ensuring trustworthy responses.

- Application integration: LLMs integrate with CRMs, ERPs, HR, and supply chain systems through APIs, enabling intelligent automation in everyday workflows like summarizing policies or assisting sales.

- Workflow orchestration: Frameworks like LangChain and LlamaIndex allow LLMs to retrieve, reason, and act across multiple tools and steps, enabling multi-hop decision-making and tool usage.

- Enhanced with knowledge graphs: Integrating with metadata systems and knowledge graphs improves accuracy, traceability, and relevance, especially in regulated environments.

- Execution engine for strategy: Ultimately, enterprise LLMs unify data, tools, and knowledge into adaptive capabilities that bridge long-term strategy with day-to-day operations.

This placement turns LLMs from static models into dynamic business enablers powering intelligence, agility, and impact across the enterprise.

Core capabilities that make an LLM ‘enterprise-grade’

To unlock real business value from generative AI, enterprises must go beyond generic, off-the-shelf models. True enterprise-grade LLMs are not just defined by size or novelty; they are defined by their ability to integrate securely, scale reliably, and operate responsibly within complex business environments.

The following core capabilities distinguish enterprise-grade LLMs from consumer-grade or open models.

1. Custom fine-tuning on proprietary and domain-specific data

Enterprise-grade LLMs are fine-tuned using confidential, industry-specific, and operational data such as legal contracts, financial transactions, medical histories, or supply chain logs. This enables the model to deeply understand organizational context, specialized terminology, and compliance requirements, producing outputs that are relevant, precise, and aligned to business operations.

2. Granular access control and prompt governance

These models offer advanced security through role-based permissions, ensuring only authorized users can access sensitive data or functionalities. Prompt governance adds a layer of control, allowing enterprises to define what kinds of prompts are allowed, monitor intent, and enforce ethical and regulatory policies critical for industries like finance, or defense.

3. Deep observability and operational telemetry

Enterprise LLMs come with built-in monitoring, structured logging, and telemetry to track real-time performance, prompt behavior, response quality, and system anomalies. This level of observability enables continuous improvement, supports compliance reporting, and gives teams actionable insights into how the model is being used across applications.

4. Auditability and traceability of outputs

Every interaction, whether user-generated or system-initiated, can be traced, logged, and explained. This ensures complete transparency and accountability, allowing organizations to understand how decisions were made, verify outputs, and support audit requirements in highly regulated environments.

5. Cost-efficiency and resource optimization at scale

Enterprise LLMs are engineered for sustained performance without runaway costs. With optimized inference pipelines, workload-aware autoscaling, and the ability to run on dedicated or shared compute, they help organizations balance performance, price, and infrastructure utilization, enabling predictable scaling as demand grows.

6. Scalable inference and high availability

Enterprise workloads demand consistent, low-latency performance across thousands of concurrent users and massive data volumes. Enterprise-grade LLMs are optimized for autoscaling, load balancing, and redundancy to maintain uptime and performance, whether used in internal workflows or customer-facing platforms.

7. Flexible and secure deployment options

To meet diverse security and compliance needs, these models support multiple deployment configurations, including on-premises, private cloud, virtual private cloud (VPC), and hybrid environments. This ensures full data sovereignty, control over infrastructure, and compliance with jurisdictional regulations, without compromising model performance.

8. Built-in disaster recovery and business continuity

Enterprise-grade deployments include geo-redundant backups, failover mechanisms, and automated disaster recovery to ensure business continuity. In the event of disruptions, whether due to system failure or security threats, services can resume without data loss or operational downtime.

9. Seamless integration with enterprise ecosystems

Enterprise LLMs are built to plug into existing technology stacks. With native support for APIs, vector databases, orchestration tools, and retrieval-augmented generation (RAG) pipelines, these models can be embedded into CRMs, ERPs, knowledge systems, and decision engines, accelerating AI adoption without disrupting existing workflows.

High-impact enterprise LLM use cases driving real business value

The real promise of enterprise LLMs lies in how they quietly transform business, adapting supply chains mid-flight, accelerating sales with contextual intelligence, and reducing risk through systems that understand policy nuance. These aren’t pilots they’re the invisible layer powering faster decisions, smarter workflows, and competitive advantage at scale. To explore more, here are detailed LLM use cases that highlight their impact across various business functions.

1. AI assistant for customer support

Enterprise LLMs are elevating customer support beyond transactional interactions by powering intelligent assistants that understand the full context of each customer’s history, behavior, and needs. Unlike chatbots that rely on static scripts, these LLMs are trained on enterprise-specific support data product guides, service records, ticket resolutions, and escalation protocols, enabling them to respond accurately to complex, multi-step queries.

Customers experience faster, more personalized resolution through natural language interfaces across chat, voice, and email. Meanwhile, support teams benefit from reduced average handle times, improved first-contact resolution, and lower operational costs. Over time, LLMs learn from real interactions, continuously improving accuracy and adaptability.

For enterprise service leaders, this translates into scalable, intelligent customer support that enhances satisfaction while freeing up human agents for high-value conversations.

2. Employee knowledge assistant

In large enterprises, frontline teams often struggle to find timely, relevant answers hidden across fragmented systems, outdated manuals, or static intranets. Enterprise LLMs address this challenge by serving as intelligent knowledge assistants, offering conversational access to SOPs, process documentation, and institutional knowledge contextualized by role, location, and task.

Whether a warehouse supervisor needs to confirm a shipping protocol or a field technician requires equipment calibration steps, LLMs deliver precise, real-time answers using natural language. These assistants stay up to date by integrating with systems like SharePoint, Confluence, and ticketing platforms, responding to complex, nuanced queries with traceable, source-linked outputs.

This dramatically reduces time-to-knowledge, eliminates bottlenecks, accelerates onboarding, and ensures knowledge continuity across teams and locations. It transforms scattered information into a unified, on-demand knowledge layer accessible enterprise-wide.

3. Contract and policy document analysis

Contracts, compliance documents, and vendor agreements often span hundreds of pages filled with nuanced legal language and buried clauses. Reviewing them manually is time-consuming, inconsistent, and prone to oversight. Enterprise LLMs are transforming this process by automating clause extraction, risk detection, and semantic comparison across documents.

Trained on domain-specific legal, procurement, and regulatory corpora, these models can highlight deviations from standard terms, identify missing clauses, and summarize obligations in plain language, dramatically reducing review time from days to minutes. Legal, risk, and procurement teams can instantly surface red flags, benchmark terms against similar agreements, and maintain audit trails.

For enterprises managing large volumes of contracts, this means faster cycles, lower legal costs, improved compliance, and better negotiation leverage while empowering non-legal teams to act confidently on contractual information.

4. AI copilots in core business apps (ERP, CRM, SCM)

Enterprise systems like SAP and Oracle are essential to daily operations, but they are often complex, siloed, and difficult to navigate for non-technical users. Embedding LLM-powered copilots directly into these applications changes that by turning every interface into an intelligent assistant.

These copilots understand the structure and semantics of enterprise data. They help users create reports, retrieve records, generate workflows, and answer operational queries using plain language, eliminating the need for SQL, scripting, or deep system training. For example, a supply chain analyst can ask, “Show all shipments delayed over 5 days last quarter,” and get accurate results with context and recommendations.

The result is reduced dependency on IT, improved user productivity, and greater system adoption. By surfacing insights contextually and enabling intelligent automation within core systems, enterprise LLMs unlock the full value of existing tech investments.

5. Root cause analysis for operational incidents

Downtime, delivery failures, and quality defects often originate from subtle, recurring issues hidden in complex data incident reports, maintenance logs, support tickets, and machine outputs traditional analytics struggle to connect these dots in real time. Enterprise LLMs bring a new approach by ingesting unstructured and semi-structured data to uncover causal patterns and narrative explanations.

These models don’t just identify what happened; they interpret why it happened by recognizing semantic links across systems and timeframes. For example, they can correlate vibration data from a sensor with an increase in quality complaints and technician notes, thereby pinpointing a failing motor before a line shutdown.

This empowers ops, engineering, and quality teams to move from reactive firefighting to proactive prevention. In high-velocity industries like manufacturing, logistics, or utilities, this capability reduces MTTR (Mean Time to Resolution), enhances uptime, and supports continuous operational improvement.

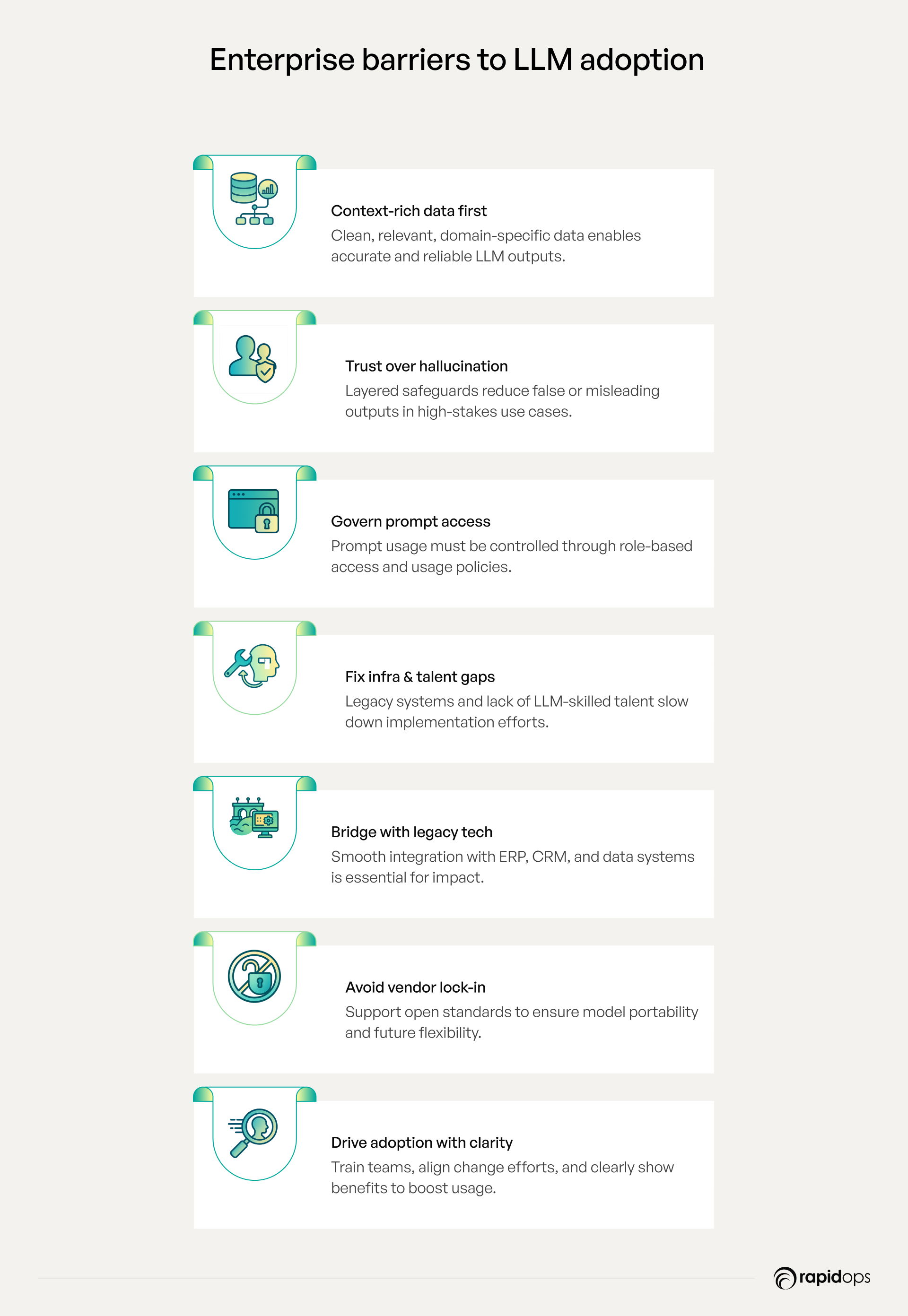

What are the key enterprise challenges in adopting LLMs

While LLMs offer breakthrough capabilities, adopting them at an enterprise level demands far more than technical integration. Organizations face challenges around domain alignment, trust, scalability, and governance, each of which must be addressed strategically to unlock sustainable, responsible, and business-aligned value from LLMs.

1. Grounding LLMs in high-quality, context-rich enterprise data

The effectiveness of enterprise LLMs hinges on the quality, relevance, and structure of the data they’re trained or augmented with. It’s not just about volume, it’s about signal over noise. Clean, well-labeled, domain-rich data ensures that the model understands business context, adheres to regulatory nuance, and drives outcomes that are reliable, auditable, and aligned with real-world workflows.

2. Hallucinations and trust in outputs

LLMs can generate confident but factually incorrect outputs known as hallucinations. In high-stakes environments, this undermines trust, decision accuracy, and regulatory compliance. Enterprises must implement layered safeguards such as retrieval-augmented generation (RAG), human-in-the-loop review, and structured grounding to mitigate these risks.

3. Prompt governance and access control

Without proper oversight, prompt-based interactions can inadvertently expose sensitive data or generate inconsistent results. Organizations need prompt management frameworks, access policies, and role-based guardrails to standardize usage and ensure model behavior aligns with enterprise values and compliance mandates.

4. Infrastructure readiness and talent gaps

Legacy IT environments often lack the scalability, orchestration, and observability required to run LLMs efficiently. At the same time, organizations face a shortage of talent skilled in LLMOps, model evaluation, and secure AI integration, further slowing adoption.

5. Integration with legacy systems

LLMs must operate within the broader enterprise ecosystem, including ERP, CRM, data lakes, and line-of-business tools. Creating interoperable workflows requires robust APIs, middleware orchestration, and careful data harmonization to avoid disrupting mission-critical systems.

6. Vendor lock-in and lack of model portability

Choosing a proprietary foundation model without a clear migration strategy can result in vendor lock-in, limiting flexibility and increasing long-term costs. Enterprises must prioritize model portability, support for open standards (e.g., ONNX, MLflow), and the ability to run models across cloud, hybrid, and on-prem environments.

7. Change management and user adoption

LLMs introduce new ways of working from natural language interfaces to AI-augmented decision-making. Ensuring adoption requires not only intuitive interfaces but also ongoing training, stakeholder alignment, and clear communication of benefits and limitations to reduce resistance.

Enterprise LLMs, built around what makes you unique

The next era of business isn’t just about data or technology; it’s about unlocking the full potential of your people, your processes, and your purpose. Enterprise LLMs are the enabler of that shift, empowering your teams to move faster, collaborate more deeply, and innovate with confidence.

We understand that embracing AI at the enterprise level can feel complex. There’s uncertainty. There are risks. And there’s the challenge of aligning innovation with your organization’s values and responsibilities. But you don’t have to navigate it alone.

Enterprise LLMs aren’t plug-and-play tools; they need to be thoughtfully shaped around your unique culture, security posture, and business objectives. That’s where Rapidops comes in. We’re more than a technology partner; we’re your guide through this transformation. From identifying the right opportunities to building enterprise-ready solutions, we help you move forward with clarity, care, and long-term impact.

Curious how enterprise LLMs can deliver meaningful value in your business?

Schedule an appointment with one of our enterprise LLM experts for a free, personalized consultation focused on your goals, challenges, and the outcomes that matter most to your business.

Frequently Asked Questions

How does an enterprise LLM work?

An enterprise LLM ingests structured and unstructured business data such as documents, emails, product information, and operational metrics. It transforms it into a knowledge layer that can power intelligent interactions across teams, systems, and workflows. Using techniques like retrieval-augmented generation (RAG), prompt engineering, and fine-tuning, it grounds outputs in an enterprise context while ensuring accuracy, compliance, and security. It can be deployed on-prem, in the cloud, or hybrid models based on data governance needs.

What kind of data do enterprise LLMs use?

Enterprise LLMs are trained or fine-tuned on a combination of public knowledge and private business data. This includes product catalogs, knowledge base articles, contracts, emails, operational logs, customer interactions, and even data from internal tools. Vector databases and RAG pipelines help contextualize responses using this data without exposing it outside enterprise environments.

Can enterprise LLMs use real-time data from our business?

Yes. Enterprise LLMs can be architected to pull in real-time data via APIs or middleware from systems such as CRMs, ERPs, supply chain platforms, or IoT devices. This enables context-aware responses that reflect live business conditions, empowering tasks like dynamic customer support, inventory optimization, or sales enablement.

How do companies fine-tune LLMs using their data?

Companies typically fine-tune LLMs by feeding domain-specific documents, structured datasets, and real-world queries into the model using supervised learning or parameter-efficient tuning methods (like LoRA or adapters). This process adjusts the model’s outputs to reflect business terminology, reasoning, and workflows. Some enterprises also use prompt engineering and RAG-based architectures as low-lift alternatives to full fine-tuning.

What’s the best way to start with enterprise LLMs in a low-risk way?

Begin with a defined, high-impact use case such as an internal knowledge assistant or document summarization using a proof-of-concept (POC) approach. Leverage open-source or private foundation models, apply guardrails, and use techniques like RAG to limit risk. Start with minimal data exposure and gradually scale across systems once governance and performance benchmarks are validated.

Rahul Chaudhary

Content Writer

With 5 years of experience in AI, software, and digital transformation, I’m passionate about making complex concepts easy to understand and apply. I create content that speaks to business leaders, offering practical, data-driven solutions that help you tackle real challenges and make informed decisions that drive growth.

What’s Inside

- What is enterprise LLM

- Why your business needs an enterprise LLM

- How enterprise LLMs differ from public LLMs

- Why enterprise LLMs must be secure, integrated, and built for real business needs

- How RAG, vector databases, and orchestration make enterprise LLMs work

- Why enterprises can’t just use public LLMs as-is

- Where enterprise LLMs fit in your AI stack

- Core capabilities that make an LLM ‘enterprise-grade’

- High-impact enterprise LLM use cases driving real business value

- What are the key enterprise challenges in adopting LLMs

- Enterprise LLMs, built around what makes you unique

Let’s build the next big thing!

Share your ideas and vision with us to explore your digital opportunities

Similar Stories

- AI

- 4 Mins

- September 2022

- AI

- 9 Mins

- January 2023

Receive articles like this in your mailbox

Sign up to get weekly insights & inspiration in your inbox.