Enterprise leaders today face a critical decision point in AI adoption. While many organizations have achieved initial efficiency gains with generic large language models (LLMs) like ChatGPT, Claude, etc., these solutions fall short of delivering the competitive differentiation and strategic value that justify significant technology investments.

The fundamental limitation is clear: off-the-shelf AI lacks the institutional knowledge, industry expertise, and business context required to drive meaningful outcomes. Generic responses don't align with brand standards, don't understand complex business processes, and can't leverage the competitive intelligence that defines market leaders.

LLM fine tuning addresses this strategic gap by transforming generic AI into customized business capabilities. Rather than accepting AI that operates at surface level, the fine tuning process creates systems that understand organizational knowledge, follow established protocols, and deliver results that reflect institutional expertise.

For enterprise leaders evaluating AI investments, llm fine tuning represents the difference between cost-saving automation and revenue-generating competitive advantage. In this article, we are providing a strategic framework for understanding, evaluating, and implementing LLM fine tuning initiatives that create measurable business value.

What is LLM Fine Tuning Exactly?

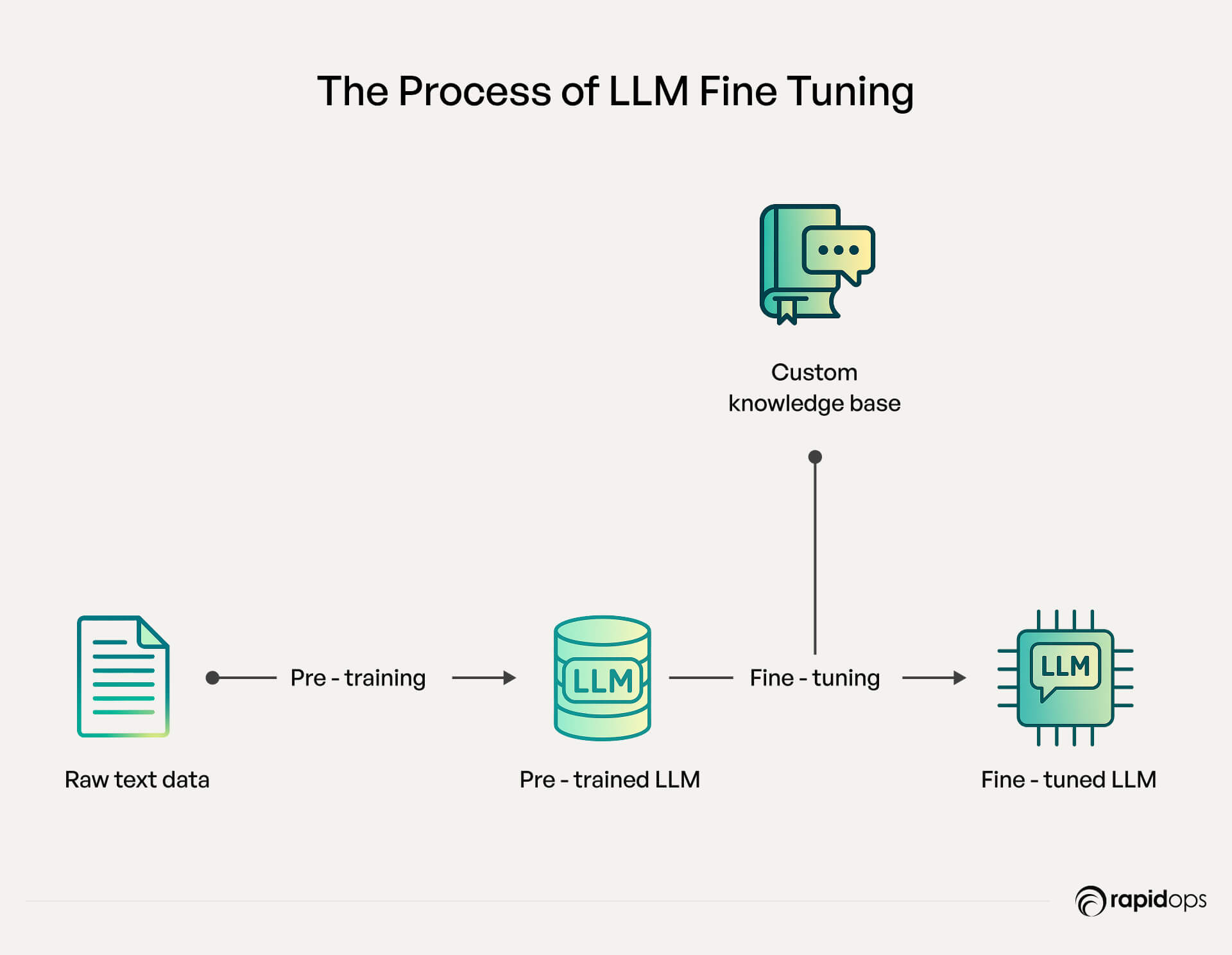

The concept builds upon pre trained large language models that already possess sophisticated language understanding capabilities. These base models have been trained on vast amounts of general information, giving them broad knowledge across multiple domains. The fine tuning process takes these foundational capabilities and specializes them for specific business applications through additional training on company-specific training data and use cases.

This approach delivers several strategic advantages over starting from scratch. Building large language models from the ground up requires massive computational resources, extensive datasets, and specialized expertise that only a few LLM fine tuning service providers possess. LLM fine tuning leverages the existing model's performance while adding the specialization that makes AI truly valuable for specific business applications.

The fine tuning process involves training the model on carefully curated datasets that reflect the organization's knowledge base, hard-learned operational wisdom, communication patterns, decision-making processes, and desired outcomes. This might include customer interaction histories, technical documentation, successful sales communications, compliance procedures, or any other information that represents how the organization operates at its best.

When executed properly, the fine tuning process creates AI systems that don't just understand language, they understand the business. These systems can generate responses that align with company standards, make recommendations based on institutional knowledge, and maintain consistency with established brand voice and values. The result is AI that operates as an extension of organizational expertise rather than a generic tool that requires constant oversight and correction.

5 Key Business Benefits of Fine-Tuning LLMs

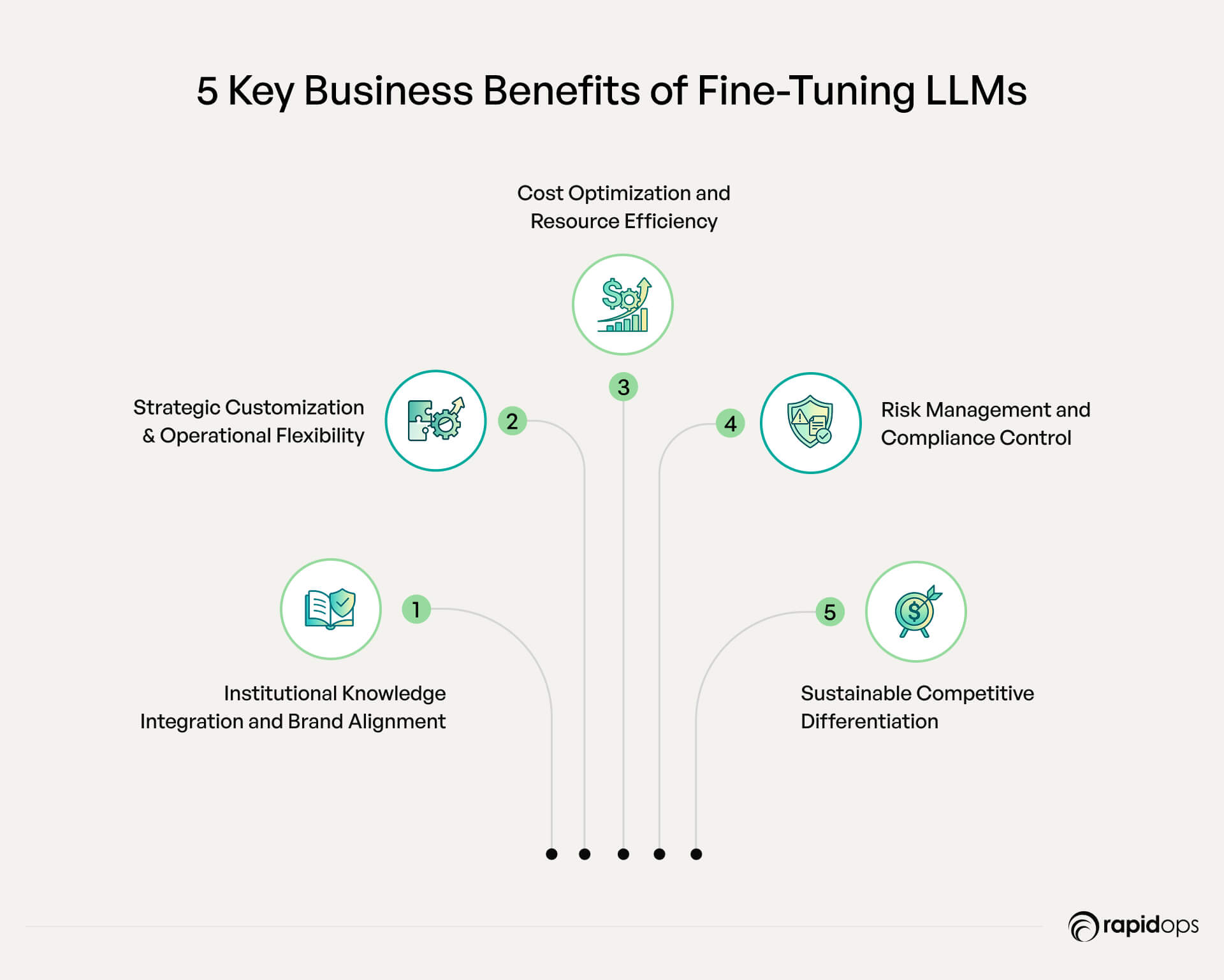

Institutional Knowledge Integration and Brand Alignment

LLM fine tuning transforms accumulated institutional knowledge into scalable AI capabilities, shifting from siloed expertise to organization-wide intelligence. Through comprehensive training on organizational data, fine-tuned models access and apply decades of institutional learning consistently across all interactions.

Fine-tuned models understand the context behind decisions, the reasoning driving successful outcomes, and the nuances distinguishing high-performing organizations from competitors. This enables AI systems to deliver responses that maintain the same quality standards and value propositions that the brand is known for.

Brand consistency becomes achievable when AI systems understand not just what to communicate, but how to communicate it. Fine-tuned models learn proven communication patterns, tone preferences, and messaging strategies, ensuring every AI-generated interaction reinforces brand values and maintains professional standards customers expect.

Strategic Customization and Operational Flexibility

The fine tuning process provides unprecedented flexibility in tailoring AI capabilities to specific business requirements. Unlike off-the-shelf solutions forcing process adaptation to software limitations, fine-tuned models customize to support existing workflows while enabling efficiency gains.

Organizations can train different models for different business functions, customer segments, or domain specific tasks. Sales teams leverage models optimized for lead qualification, while technical teams use models trained on product specifications and troubleshooting procedures. Each model calibrates precisely for optimal model's performance in its intended use case.

The ability to modify and update models as business requirements evolve provides strategic agility that traditional software cannot match. Fine-tuned models can be retrained to reflect changing market conditions, customer preferences, and business strategies, ensuring AI capabilities remain aligned with current objectives.

Cost Optimization and Resource Efficiency

LLM fine tuning delivers substantial cost advantages over alternative AI implementation approaches. Rather than paying per API call for external AI services, organizations can deploy fine-tuned models on their own infrastructure, eliminating ongoing usage fees and reducing long-term operational expenses.

The efficiency gains compound across multiple business functions. Automated processes that previously required human intervention can operate continuously without additional labor costs. Customer service inquiries, document processing, and content generation scale without proportional increases in staffing requirements.

The fine tuning process also reduces the total cost of ownership compared to building AI capabilities from scratch. Organizations leverage existing pre trained model capabilities rather than investing millions in foundational AI development, accelerating time-to-market while minimizing capital expenditure.

Risk Management and Compliance Control

Fine-tuned models provide superior control over AI behavior and outputs compared to generic solutions. Organizations can train models to follow specific compliance protocols, adhere to regulatory requirements, and maintain consistency with established risk management frameworks.

The ability to audit and modify a model's performance reduces operational risks associated with AI deployment. Unlike black-box external AI services, fine-tuned models enable organizations to understand decision-making processes, trace outputs to training data, and implement controls that ensure compliance with industry regulations.

Data security and intellectual property protection improve significantly with fine-tuned models. Sensitive business information remains within organizational control rather than being processed by external AI services, reducing exposure to data breaches and competitive intelligence risks.

Sustainable Competitive Differentiation

The fine tuning process creates defensible competitive advantages that cannot be easily commoditized or replicated. While competitors can access the same base models, they cannot replicate the specific training, domain expertise, and custom applications defining an organization's fine-tuned capabilities.

This differentiation compounds over time as organizations accumulate more data, refine training processes, and develop sophisticated applications. The gap between custom AI capabilities and generic solutions continues widening, creating sustained competitive advantages that become increasingly difficult for competitors to match.

Organizations with sophisticated, fine-tuned AI capabilities can offer services and experiences that competitors cannot match, enabling premium pricing, improved customer retention, and market share gains that directly impact financial performance and shareholder value.

Popular LLM Models for Enterprise Fine Tuning

The landscape of available models for enterprise fine tuning presents both opportunities and strategic considerations. Understanding the trade-offs between different pre trained model options is essential for making informed decisions that align with long-term business objectives and operational requirements.

Major technology companies like Meta, OpenAI, Anthropic, and Google have developed powerful base models that serve as foundations for LLM fine tuning initiatives. These LLM development companies focus on creating the underlying AI architectures, while specialized fine tuning companies help enterprises customize these models for specific business applications.

Open-Source Models: Control and Cost Efficiency

Open-source pre trained model options like Llama 2 and Llama 3 from Meta have gained significant traction in enterprise environments. These models offer complete ownership and control over fine-tuned capabilities, eliminating ongoing licensing costs and reducing dependency on external providers. Organizations can deploy these models on their own infrastructure, ensuring data security and regulatory compliance while maintaining full control over the model's performance and behavior.

Mistral represents another compelling open-source option, particularly for organizations prioritizing efficiency and cost-effectiveness. The model's architecture enables strong performance with lower computational requirements, making it accessible for organizations with limited technical infrastructure. Code Llama has proven particularly valuable for domain specific tasks, offering specialized capabilities for software development, documentation, and technical support functions.

Commercial Fine Tuning Services: Ease of Implementation

Commercial fine-tuning services from OpenAI, Anthropic, and Google offer ease of implementation by handling technical complexity and providing professional support. This allows organizations to focus on business applications rather than infrastructure, accelerating time-to-value.

However, these large providers often offer limited customization. Their solutions tend to follow standardized processes that may not fully meet specific industry or business needs. Additionally, their services come at a premium, with high costs even for minor adjustments.

In contrast, many enterprises turn to specialized fine-tuning firms that provide deeper customization, greater flexibility, and industry-specific expertise, often at a more reasonable cost.

Industry-Specific Pre-Trained Models: Domain Expertise Foundation

Industry-specific pre trained model options offer strategic starting points for organizations with specialized requirements. Legal technology providers can leverage models already trained on legal documents and terminology. Healthcare organizations can build upon models that understand medical terminology and regulatory requirements. Financial services firms can utilize models trained on financial data and compliance frameworks.

These specialized models provide head starts for the fine tuning process by incorporating domain knowledge that would otherwise require extensive additional training data and expertise.

Strategic Selection Considerations

The selection decision should align with organizational capabilities, strategic objectives, and risk tolerance. Organizations with strong technical capabilities and data security requirements often favor open-source approaches. Companies prioritizing rapid implementation and professional support may find working with experienced fine tuning specialists more attractive. The key is matching model characteristics with business requirements and partnering with providers who understand enterprise implementation challenges.

LLM Fine Tuning Methods Explained

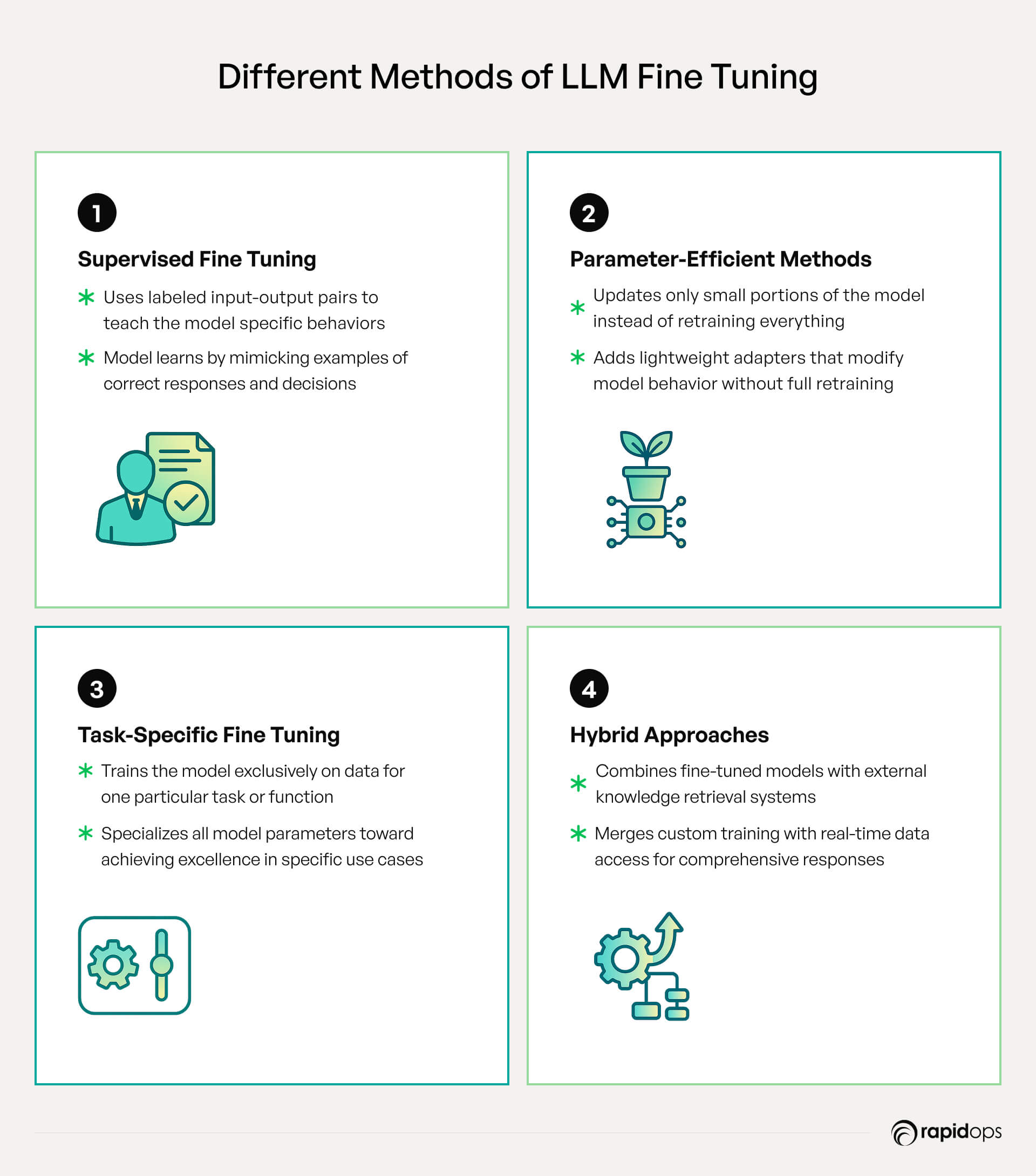

Supervised Fine Tuning: Foundation for Business Applications

Supervised fine tuning provides direct control over model behavior by training on specific input-output pairs that reflect desired business outcomes. This approach works exceptionally well for defined domain specific tasks with clear success criteria. Organizations compile examples from their highest-performing employees, most successful customer interactions, and best-practice documentation to train models that replicate proven success patterns.

The methodology requires comprehensive training data that demonstrate successful interactions, decisions, or outcomes. Customer service teams can train models on successful resolution examples, while sales teams can use winning communication patterns. The model learns to replicate these successful patterns, effectively scaling expertise across the organization.

Success depends heavily on training data quality and coverage. Training datasets must represent the full range of scenarios the model will encounter while maintaining consistent quality standards. The predictability of supervised fine tuning makes it particularly attractive for business-critical applications where consistent model's performance is essential.

Parameter-Efficient Methods: Optimizing Resource Utilization

Parameter-efficient methods like LoRA (Low-Rank Adaptation) have revolutionized enterprise AI implementation by dramatically reducing computational requirements while maintaining the model's performance quality. These approaches update only small subsets of model parameters, enabling organizations to achieve LLM fine tuning benefits without massive infrastructure investments.

The strategic advantage extends beyond cost savings. Parameter-efficient methods enable organizations to maintain multiple specialized model variants for different business functions or customer segments. Sales teams, customer service departments, and technical support groups can each have optimized models while sharing underlying infrastructure and maintaining consistent model's performance standards.

This approach also facilitates experimentation and iteration. Organizations can test different training approaches, compare model's performance across variants, and refine models without significant resource commitments. Deployment flexibility allows multiple model variants to be maintained simultaneously, with different versions activated based on user requirements or business contexts.

Task-Specific Fine Tuning: Precision-Targeted Capabilities

Task-specific fine tuning focuses model capabilities on particular business functions, delivering exceptional model's performance for defined use cases while optimizing resource utilization. This approach is particularly effective for organizations with clearly defined AI requirements and established success metrics.

Classification applications enable automated decision-making for routine business processes. Customer inquiry routing, document categorization, compliance monitoring, and quality assessment benefit from models trained specifically for classification tasks. Content generation applications require models that understand organizational communication standards, brand voice, and audience preferences.

Technical applications like code generation benefit significantly from task-specific fine tuning. Models trained on organizational codebases, development standards, and architectural patterns can generate code that integrates seamlessly with existing systems while maintaining quality and security standards. The limitation is reduced flexibility for applications outside the training scope, requiring organizations to balance specialization benefits against versatility requirements.

Hybrid Approaches: Combining Techniques for Enhanced Performance

Modern enterprise implementations often combine multiple approaches to maximize the model's performance. Retrieval augmented generation (RAG) systems can be integrated with fine-tuned models to provide both specialized knowledge and access to real-time information. This hybrid approach leverages the strengths of both techniques while mitigating their individual limitations.

The combination of retrieval augmented generation with fine-tuned models enables organizations to maintain up-to-date information while preserving the specialized language understanding capabilities that fine tuning provides. This approach is particularly valuable for applications requiring both domain expertise and current information access.

Organizations can also implement transfer learning technique approaches where a pre trained model is first fine-tuned for general business applications, then further specialized for specific domain specific tasks. This layered approach maximizes the efficiency of the fine tuning process while ensuring optimal model's performance across different use cases.

How to Fine Tune an LLM: Strategic Implementation

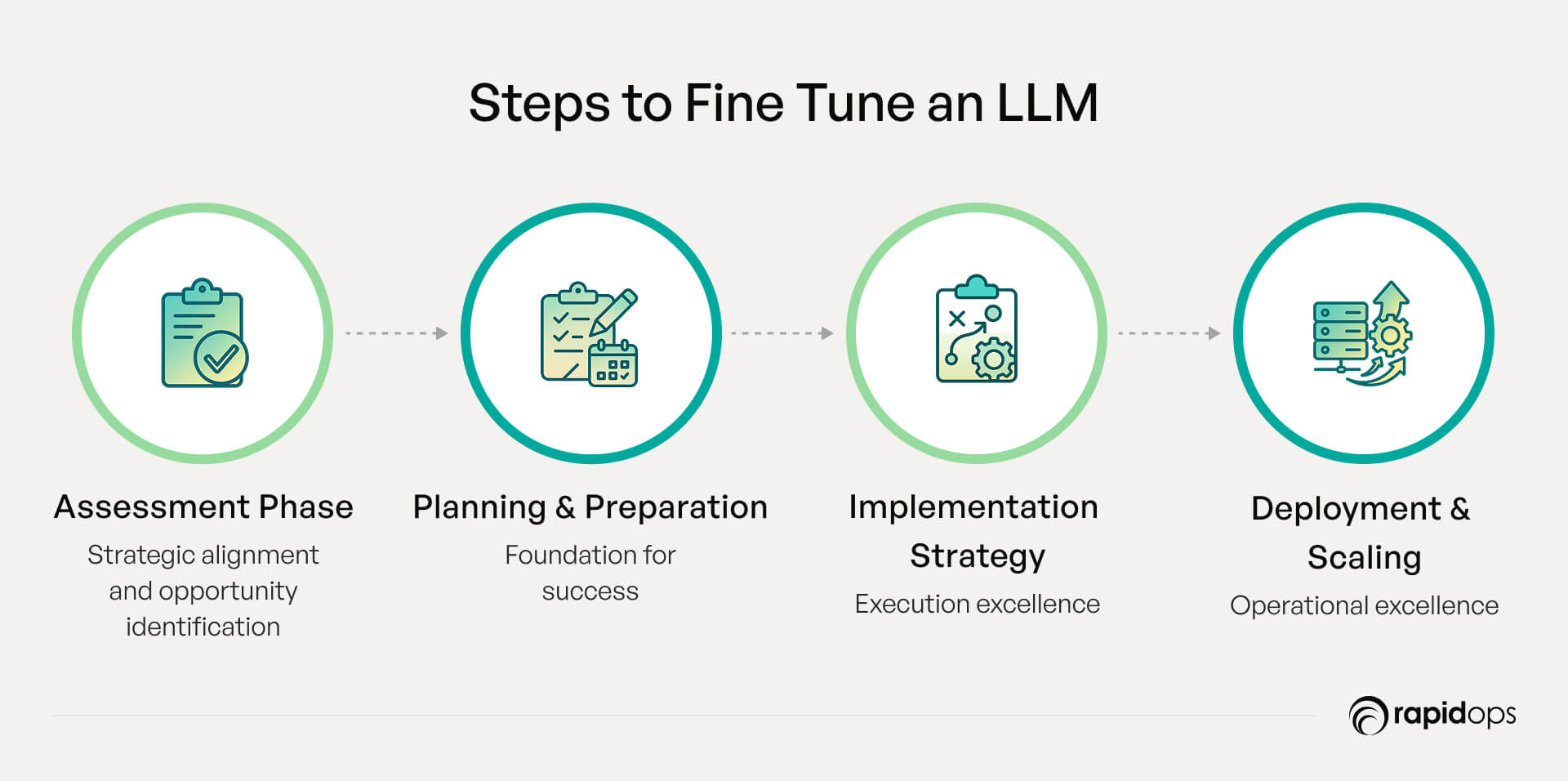

Assessment Phase: Strategic Alignment and Opportunity Identification

Start by identifying use cases where the fine tuning process creates measurable business advantages. Customer service operations with complex inquiries and document processing workflows requiring domain expertise typically provide strong ROI. Evaluate current operational bottlenecks where expert knowledge creates competitive advantages.

Conduct honest data audits to determine training data availability and quality. The fine tuning process requires substantial, high-quality examples that represent desired outcomes. Many organizations discover their data needs significant cleaning before supporting effective LLM fine tuning efforts.

Consider partnering with specialized fine tuning companies to provide expertise, accelerate timelines, and reduce internal resource requirements while ensuring successful outcomes. These partnerships can help organizations leverage transfer learning techniques approaches, and optimize model's performance from the outset.

Planning and Preparation: Foundation for Success

Establish clear objectives and success metrics that align with business goals. Define specific model's performance targets rather than vague improvement aspirations. Data preparation typically requires the most time and resources, often involving subject matter experts to review examples and ensure consistency.

Choose between cloud-based solutions offering scalability versus on-premises deployments providing control. Infrastructure decisions impact long-term costs and operational flexibility. Start with pilot programs to minimize risk while building organizational expertise and demonstrating value.

The planning phase should also consider how retrieval augmented generation capabilities might complement fine-tuned models, creating hybrid solutions that maximize both specialized knowledge and information access.

Implementation Strategy: Execution Excellence

Coordinate technical processes with business requirements and change management. Ensure technical teams understand business success criteria through regular progress reviews. Test models across representative scenarios, edge cases, and real-world conditions to validate the model's performance before production deployment.

Plan workflow integration alongside model development to ensure smooth transitions from development to production environments. User training and change management processes require careful attention to maximize adoption and minimize disruption.

The implementation should leverage transfer learning technique approaches where appropriate, building upon existing pre trained model capabilities while adding organization-specific knowledge and behaviors.

Deployment and Scaling: Operational Excellence

Begin with controlled rollouts to limited user groups, enabling issue identification and the model's performance optimization before broader deployment. Monitor model accuracy, response quality, user satisfaction, and business impact metrics continuously during the initial phases.

Establish processes for continuous improvement, model maintenance, and adaptation to changing business requirements. Regular retraining schedules and performance reviews ensure fine-tuned models continue delivering value as business needs evolve.

Consider implementing retrieval augmented generation capabilities alongside fine-tuned models to provide comprehensive solutions that combine specialized knowledge with access to current information sources.

Real-World Enterprise Applications

Customer Service: Strategic Differentiation Through AI Excellence

Fine-tuned customer service models handle complex inquiries that traditionally required experienced representatives. Models trained on successful resolution patterns understand not just product information but communication strategies that drive customer satisfaction. They adapt communication styles based on customer sentiment while providing solutions that address underlying needs.

The scalability advantage enables consistent high-quality service during peak demand without proportional staffing increases. Integration with CRM systems enables personalized interactions, leveraging complete customer histories, strengthening relationships and driving loyalty.

These applications often benefit from hybrid approaches combining fine-tuned models with retrieval augmented generation systems, enabling both specialized knowledge application and access to current product information and policies.

Document Processing: Operational Excellence Through Intelligence

Fine-tuned models process industry-specific documents with accuracy exceeding manual review. Contract analysis applications identify key terms, risks, and compliance requirements across large volumes. Legal teams can flag issues and receive analysis that supports decision-making while reducing review time.

Compliance monitoring becomes proactive when models understand regulatory requirements and identify potential issues before violations occur. The intelligence extracted creates valuable business insights through trend analysis and performance metrics that support strategic planning.

These domain specific tasks benefit significantly from models trained on organization-specific document formats and terminology, demonstrating superior model's performance compared to generic solutions.

Sales and Marketing: Revenue Growth Through Personalization

Sales applications enable personalization at scale while maintaining brand consistency. Models trained on successful interactions and conversion patterns generate communications that resonate with specific customer segments and drive measurable outcomes.

Lead qualification becomes more sophisticated when models understand characteristics predicting successful conversions. Marketing teams can produce targeted materials that maintain brand consistency while adapting to different audiences and campaign objectives, with direct revenue impact tracking through conversion funnels.

The fine tuning process enables these models to understand nuanced communication patterns and customer preferences that drive superior model's performance in sales and marketing applications.

Operations: Efficiency and Intelligence Integration

Operational models create efficiency gains while enabling intelligent decision-making across business processes. Process automation extends beyond task completion to include adaptive decision-making based on changing conditions.

Manufacturing operations optimize production schedules considering demand forecasts and supply constraints. Quality control processes identify issues earlier, reducing waste and improving satisfaction. Predictive capabilities enable proactive maintenance and resource planning based on operational data patterns.

These applications demonstrate how the fine tuning process can create specialized capabilities for domain specific tasks while maintaining the flexibility to adapt to changing operational requirements.

HR and Talent: Strategic Workforce Development

HR applications balance automation with human judgment to improve efficiency while maintaining a personal touch. Resume screening becomes more consistent when models understand qualifications and characteristics predicting role success, reducing bias and improving candidate experience.

Employee communication and policy guidance benefit from models that understand organizational culture and communication preferences. Performance management can be supported by models that understand career progression patterns and development requirements, enabling personalized guidance aligned with business objectives.

The language understanding capabilities developed through fine tuning enable these models to navigate complex HR scenarios while maintaining appropriate sensitivity and compliance with employment regulations.

Advanced Techniques and Emerging Trends

Multi-Modal Fine Tuning: Expanding Capabilities

The evolution of large language models increasingly includes multi-modal capabilities, enabling the fine tuning process to incorporate visual, audio, and text data simultaneously. This advancement opens new possibilities for domain specific tasks that require understanding multiple data types.

Organizations can now fine-tune models that understand both textual instructions and visual context, enabling applications in quality control, training, and customer service that were previously impossible with text-only models.

Federated Learning Integration

Federated learning approaches enable organizations to participate in collaborative model training while maintaining data privacy. This transfer learning technique allows multiple organizations to contribute to model development without sharing sensitive training data.

The approach is particularly valuable for industry consortiums and organizations with strict data governance requirements, enabling collaborative development of specialized models for domain specific tasks.

Real-Time Adaptation

Emerging techniques enable models to adapt their performance based on real-time feedback and changing conditions. This capability represents a significant advancement in the model's performance optimization, allowing systems to improve continuously based on user interactions and outcomes.

These adaptive capabilities enhance the value of the fine tuning process by enabling models to maintain optimal performance as business conditions and requirements evolve.

Future Outlook and Strategic Recommendations

The LLM fine tuning landscape continues to evolve rapidly, with several trends shaping the strategic considerations for enterprise leaders. Understanding these developments enables organizations to make investments that will remain valuable as the technology matures and capabilities expand.

Multi-modal fine tuning represents a significant expansion of AI capabilities beyond text-based applications. Organizations will soon be able to fine tune models that understand images, documents, audio, and video content specific to their business contexts. This expansion enables applications in quality control, training, customer service, and business intelligence that integrate multiple data types for more comprehensive analysis and decision-making.

The democratization of the fine tuning process continues as computational requirements decrease and tools become more accessible. Parameter-efficient methods and improved training techniques are making LLM fine tuning accessible to organizations without massive technical infrastructure investments. This trend will accelerate adoption while increasing competitive pressure as more organizations develop custom AI capabilities.

Integration sophistication is advancing rapidly, with fine-tuned models becoming more seamlessly integrated with existing business systems and workflows. The distinction between custom AI capabilities and standard business applications will continue to blur, making AI augmentation a natural extension of business operations rather than a separate technical implementation.

The convergence of retrieval augmented generation with fine-tuned models will create more powerful hybrid systems that combine specialized knowledge with real-time information access. This integration will enable organizations to maintain current information while preserving the specialized language understanding capabilities that make fine-tuned models valuable.

Regulatory frameworks around AI are evolving, with implications for fine tuning practices, data governance, and compliance requirements. Organizations must build fine tuning capabilities that can adapt to changing regulatory environments while maintaining business value and competitive advantages.

Strategic recommendations for enterprise leaders emphasize the importance of beginning fine tuning exploration now while maintaining focus on clear business value. The organizations that develop LLM fine tuning capabilities today will have significant advantages as the technology becomes more mainstream and competitive pressures increase.

Implementation Challenges You Need to Know About

Data Quality and Availability Constraints

Training data challenges represent the most significant barrier to fine tuning implementation. Organizations underestimate requirements for high-quality, representative training data, with dataset compilation requiring substantial time and expertise.

Training examples from multiple sources create consistency issues due to varying quality standards and formatting conventions. Standardizing training data while maintaining value requires careful curation and extensive manual review.

Privacy and security add complexity when datasets contain sensitive business information, customer data, or proprietary knowledge, requiring compliance with data protection regulations.

Many organizations discover insufficient data volume for effective the fine tuning process, particularly in specialized domains, requiring additional collection efforts or compromised model's performance objectives.

Technical Infrastructure and Expertise Requirements

The fine tuning process requires technical expertise that many organizations lack internally. Model selection, training configuration, performance evaluation, and deployment all require specialized knowledge often absent from current IT teams.

Infrastructure decisions must balance the model's performance, cost, and security. Cloud solutions offer scalability but create ongoing costs and vendor dependencies, while on-premises deployments require significant technical infrastructure and expertise.

Managing multiple models creates version control complexity. Tracking model's performance, managing updates, and ensuring consistent deployment requires systematic approaches that many organizations lack.

Integration challenges arise when fine-tuned models must work within existing architectures. API compatibility and performance characteristics may not align with current systems, requiring additional development.

Organizational Change and Adoption Barriers

Change management requirements often exceed expectations when AI alters established workflows. Employees may resist changes, especially when perceiving AI as threatening job security or professional expertise.

Training requirements extend beyond technical skills to new work approaches and decision-making. Organizations must invest in comprehensive programs that help employees leverage AI effectively while maintaining quality standards.

Traditional metrics may not capture AI-augmented process value. Organizations struggle to establish appropriate success metrics and baseline measurements reflecting LLM fine tuning investment impact.

Cultural resistance emerges when AI conflicts with organizational values or power structures. Overcoming this requires careful communication, demonstrated value, and gradual implementation, building confidence over time.

Regulatory and Compliance Complexity

Regulatory requirements vary significantly across industries. Healthcare must ensure HIPAA compliance, financial services must address reporting requirements, and regulated industries must ensure AI doesn't create new compliance risks.

Evolving AI regulations create uncertainty for long-term planning. Compliance requirements are likely to become more stringent, requiring adaptable fine tuning capabilities without compromising business value.

Third-party services complicate vendor relationships and data governance. Understanding data handling practices, security measures, and compliance capabilities becomes crucial for regulatory compliance and risk management.

AI systems may require documentation and audit processes exceeding traditional software obligations. Organizations must establish processes for documenting training data, model decisions, and model's performance outcomes that satisfy regulatory requirements.

Conclusion and Next Steps

Success requires treating the fine tuning process as a long-term capability investment rather than a short-term technology implementation. Organizations must focus on training data quality, organizational change management, and continuous improvement processes that enable sustained value creation.

The competitive landscape favors early adopters who are building custom AI capabilities that competitors cannot easily replicate. Organizations that delay exploration risk falling behind competitors developing proprietary AI advantages that drive operational efficiency and market differentiation.

Implementation success depends on executive sponsorship, strategic investment, and organizational commitment to the changes required for maximizing AI value. The technology is mature, tools are accessible, and competitive advantages are measurable. The question is whether organizations will lead or follow in developing these transformative capabilities.

As large language models continue to evolve and the fine tuning process becomes more sophisticated, organizations that establish strong foundations now will be best positioned to leverage advanced capabilities like retrieval augmented generation integration and transfer learning technique innovations.

Curious how a fine-tuned LLM can best serve your business? As one of the leading LLM fine tuning companies, we help enterprises transform generic large language models into powerful, customized business capabilities. Talk to our LLM fine tuning expert to find the best use cases of fine tuned models for your business and whether your training data is ready for the fine tuning process or not. Book your free consultation call today.

Got questions? We’ve got answers!

What is fine tuning an LLM?

Fine tuning an LLM is the process of taking a pre-trained language model and training it further on specific data to make it better at particular tasks. Instead of building an AI model from scratch, you customize an existing one to understand your business, industry, or specific use cases better.

What is the best fine-tuning tool for LLM?

Popular fine-tuning tools include Hugging Face Transformers, OpenAI's fine-tuning API, and platforms like Weights & Biases. However, having the right tool is not enough - you need a technology expert who understands how to configure these tools properly, select the right training data, and optimize the model for your exact business needs. The tool is just the instrument; expertise makes it work effectively.

What is LLM memory tuning?

LLM memory tuning refers to optimizing how a language model stores and retrieves information during conversations or tasks. It involves adjusting the model's ability to remember context from earlier parts of a conversation, maintain consistency across long documents, and efficiently manage computational resources when processing large amounts of text.

Is fine-tuning LLMs expensive?

The cost depends on your specific requirements and use cases. Simple fine-tuning projects can be quite affordable, while complex enterprise implementations require larger investments. However, if you work with big consulting firms, they often charge premium rates even for straightforward use cases. A domain expert who specializes specifically in LLM fine-tuning will typically deliver better results at lower costs because they focus exclusively on this technology.

What are some of the applications of LLMs?

Common LLM applications include:

- Customer service: Automated support and chatbots

- Content creation: Writing, editing, and marketing materials

- Code generation: Software development assistance

- Document analysis: Processing contracts, reports, and legal documents

- Language translation: Multi-language communication

- Data analysis: Extracting insights from text data

- Personal assistants: Scheduling, email management, and task automation

- Education: Tutoring, lesson planning, and personalized learning

What’s Inside

- What is LLM Fine Tuning Exactly?

- 5 Key Business Benefits of Fine-Tuning LLMs

- Popular LLM Models for Enterprise Fine Tuning

- LLM Fine Tuning Methods Explained

- How to Fine Tune an LLM: Strategic Implementation

- Real-World Enterprise Applications

- Advanced Techniques and Emerging Trends

- Future Outlook and Strategic Recommendations

- Implementation Challenges You Need to Know About

- Conclusion and Next Steps

Let’s build the next big thing!

Share your ideas and vision with us to explore your digital opportunities

Similar Stories

- AI

- 4 Mins

- September 2022

- AI

- 9 Mins

- January 2023

Receive articles like this in your mailbox

Sign up to get weekly insights & inspiration in your inbox.