You may already be aware that AI agents are revolutionary technology with the potential to drastically reduce your operational costs and unlock next-level efficiency. The exciting part is that businesses that have already implemented AI agents are seeing these changes in streamlined operations, faster decision-making, and measurable improvements in productivity. Most likely, your business is also considering adopting AI agents, which makes sense given the transformational impact they are already having.

Since you are looking into AI agent frameworks, you probably know that these frameworks lay the foundation for AI agents. They make autonomy achievable by defining how an agent functions, its capabilities, and how well it understands your business processes. Simply put, the framework you choose will determine how effectively your AI agents perform and the value they bring to your operations.

That’s exactly why, in this article, we’re covering the latest AI agent frameworks in the market. We’ll explore how their capabilities have evolved, how they differ from one another, and how selecting the right framework can help your business achieve higher efficiency, smarter operations, and a competitive edge in today’s AI-driven landscape.

Understanding AI agent frameworks and their impact

To maximize the benefits of AI agents, it’s crucial to understand the underlying frameworks that support them. These frameworks define how agents operate, make decisions, and interact with your employees. AI frameworks are essential building blocks for developing intelligent systems and AI systems, enabling organizations to create, manage, and deploy advanced, scalable solutions.

Knowing their core functions helps you see how the right framework can drive smarter operations, faster decisions, and tangible business impact. Agent development is a key aspect of building scalable and efficient AI systems, as it leverages specialized tools and libraries within these frameworks to streamline the creation of sophisticated AI agents.

What are AI agent frameworks

AI agent frameworks are structured platforms designed to build, deploy, and manage autonomous agents. Unlike traditional automation tools that follow static scripts, these frameworks give agents the ability to perceive context, reason through choices, act, and continuously learn from outcomes. This makes them far more adaptive than conventional bots or workflow engines.

At their core, AI agent frameworks create a foundation for dynamic collaboration between multiple agents and humans, aligning activities with broader enterprise objectives. Whether coordinating supply chains, supporting customer interactions, or orchestrating knowledge across departments, these frameworks ensure that agents operate not in isolation but as part of an integrated ecosystem. The rise of agentic AI and the availability of open source frameworks have made it easier to build, customize, and deploy multi-agent systems for a wide range of business and technical applications.

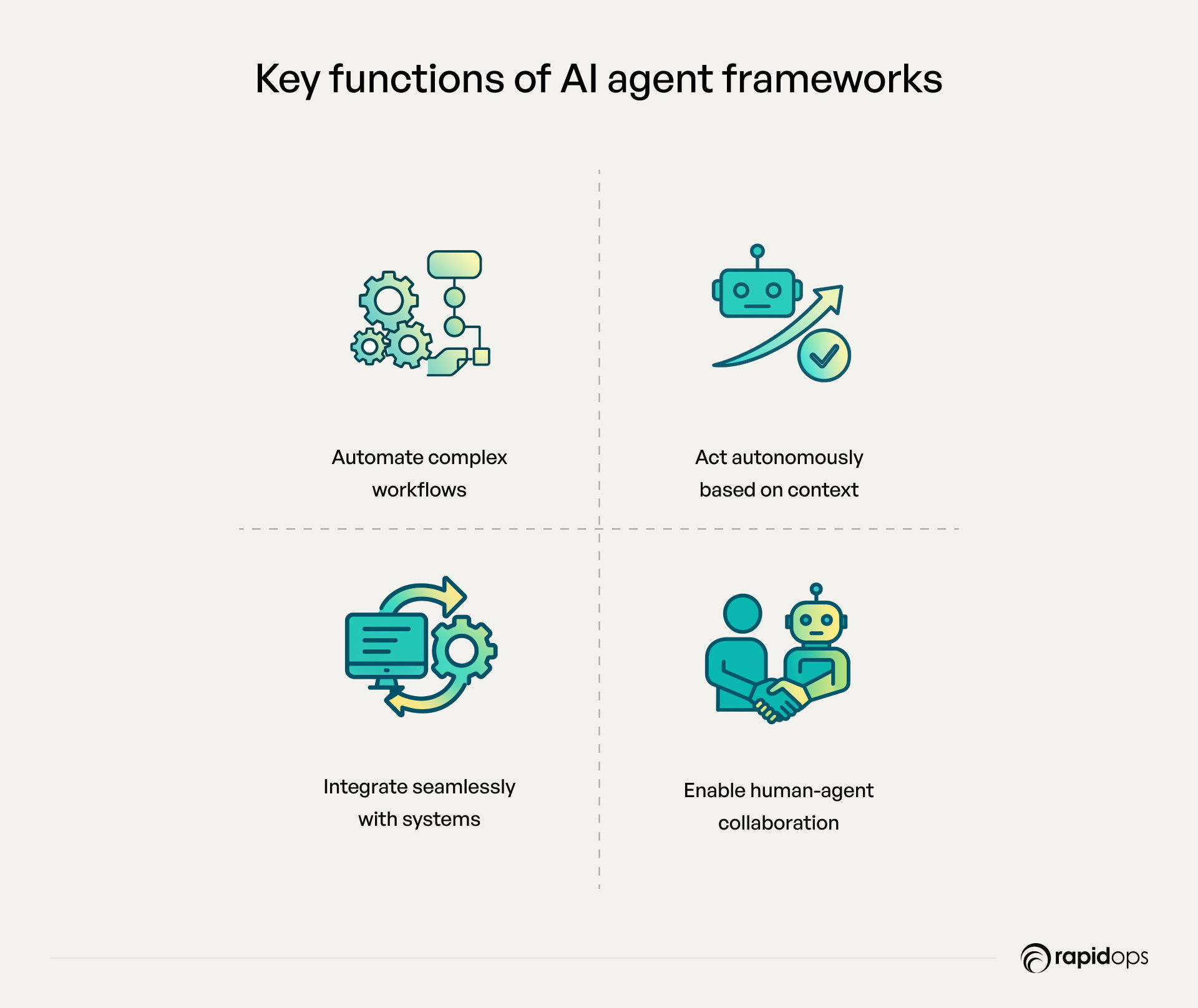

Core functions of AI agent frameworks

The value of agent frameworks lies in their versatility. They deliver a set of essential functions that enable enterprises to move from task-level automation to system-wide intelligence.

- Task automation: Automating not only routine but also complex workflows across departments.

- Decision-making: Equipping agents with the ability to act based on context, rules, and learned patterns.

- Integration: Seamlessly connecting with APIs, enterprise systems, and third-party platforms to ensure information flow is uninterrupted.

- Collaboration: Supporting interaction and coordination between agents and human teams for higher efficiency and trust.

- Workflow management: Organizing and automating agent actions and specific tasks within multi-step processes, enabling a structured task flow and orchestration across complex workflows.

Together, these functions provide enterprises with a unified environment to develop agents that are not just reactive but also adaptive and proactive. Robust error handling is also critical, ensuring reliable agent operations by allowing systems to recover from failures or terminate gracefully.

Why they matter in 2025

As businesses in 2025 confront rising complexity, AI agent frameworks are becoming foundational to enterprise strategy. Their significance can be seen across several dimensions.

- Scalability: Allowing organizations to manage multi-agent workflows that span entire enterprises without loss of performance.

- Interoperability: Enabling smooth collaboration across diverse systems, models, and data sources.

- Observability: Providing visibility into agent behavior, making it possible to monitor, audit, and govern decisions transparently.

- Adaptability: Ensuring agents can respond to changing market conditions, customer needs, or operational disruptions in real time.

- Efficiency & consistency: Delivering repeatable, reliable outcomes that reduce variability and operational risk.

- Future readiness: Bridging the gap between legacy automation and the emerging world of interconnected, multi-agent systems.

In this way, AI agent frameworks are more than a technology trend; they represent the architecture on which the next generation of enterprise autonomy will be built. Some frameworks are well-suited for specific enterprise needs, while others may present a steeper learning curve for new users. As AI agents evolve, frameworks continue to adapt to new business and technical challenges.

How we evaluated these AI agent frameworks

Selecting the right AI agent framework for enterprise adoption requires a structured, multi-dimensional evaluation. Effective evaluation goes beyond surface-level features, examining both operational capabilities and strategic impact. To provide a clear perspective, we analyzed frameworks across six critical dimensions that are essential for enterprise decision-making, helping you identify which frameworks can deliver the most value, streamline operations, and support smarter, more autonomous business processes.

1. Scalability & performance

Enterprises like yours operate at scale and are beginning to experiment with multi-agent workflows in pilot or proof-of-concept stages across distributed environments. Assessment focuses on whether a framework can efficiently manage these workloads, dynamically allocate resources, and maintain consistent performance under high-demand scenarios. Scalability ensures AI agents operate cohesively without bottlenecks, delays, or service degradation, even as your operations expand globally. This dimension reflects the framework’s ability to support growth, complexity, and evolving enterprise needs.

2. Observability & transparency

Comprehensive observability is key to operational confidence and accountability. Frameworks are analyzed for tracing, logging, monitoring, and reporting capabilities, allowing you to track agent activity, detect anomalies, and optimize workflows. Transparency also encompasses cost visibility, providing decision-makers with insight into the total cost of ownership and enabling efficient resource management. Observability ensures both operational reliability and informed executive oversight.

3. Integration & ecosystem compatibility

AI agent frameworks do not operate in isolation. Assessment considers the framework’s ability to integrate seamlessly with APIs, vector databases, cloud platforms, and existing enterprise systems. While open source frameworks often provide flexibility and customization, proprietary software can offer enhanced security, compliance, and dedicated support, but may involve licensing costs and less customization. Effective integration ensures that deploying agents enhances your workflows rather than disrupting them, enabling faster adoption and delivering measurable return on investment. This dimension emphasizes practical alignment with enterprise technology landscapes.

4. Cost-efficiency

Cost is a strategic consideration alongside technical and operational capabilities. Evaluation includes the total cost of ownership, licensing, infrastructure, and maintenance. Cost-efficient frameworks deliver enterprise-grade functionality without excessive financial burden, ensuring that your investment in multiple agent systems translates into measurable business value. This dimension balances operational effectiveness with financial prudence.

5. Community maturity & support

Long-term viability depends on the strength and maturity of the supporting community. Frameworks are evaluated for open-source adoption, contributor activity, and enterprise partnerships. A robust community drives continuous innovation, provides dependable troubleshooting resources, and accelerates adoption of best practices, critical for enterprises like yours that rely on stable, forward-compatible solutions. This ensures frameworks remain relevant and sustainable over time.

6. Security & governance

Data protection, compliance, and controlled access are non-negotiable. Evaluation considers role-based access controls, robust data isolation, and regulatory compliance readiness. Security extends to how agents interact with sensitive systems and datasets, maintaining operational integrity while allowing AI agents to act autonomously where appropriate. This ensures you can trust the framework to operate safely within complex organizational environments. Incorporating human-in-the-loop processes is essential for compliance and oversight in AI agent frameworks.

Leading AI agent frameworks in 2025

1. AutoGen (Microsoft Research)

AutoGen, developed by Microsoft Research, is an advanced AI agent framework designed to facilitate collaborative multi-agent systems. Positioned at the intersection of research innovation and enterprise applicability, AutoGen is open-source and supports both experimental and operational use cases. Its design emphasizes flexibility, coordination, and adaptability, making it a strong contender for organizations exploring scalable AI agent orchestration in 2025. AutoGen also complements other Microsoft AI tools, such as Microsoft Semantic Kernel, an open-source AI framework for enterprise integration and orchestration within the Microsoft ecosystem.

Key differentiators include their focus on multi-agent collaboration, enabling agents to dynamically communicate, plan, and execute tasks collectively.

Architecture

At its core, AutoGen is structured around autonomous agents, an orchestration layer, and memory/state management modules that ensure context preservation across interactions. AutoGen's approach to memory handling enables efficient information retention and retrieval, maintaining state across tasks and supporting robust multi-agent orchestration. The framework supports both single-agent workflows for simpler tasks and multi-agent distributed execution for complex operations, allowing enterprises to scale seamlessly. Its modular design allows integration of custom tools and plugins, providing flexibility to extend functionality without compromising stability or performance.

Key features

AutoGen excels in agent collaboration and decision-making intelligence, supporting dynamic workflows where agents coordinate tasks and share knowledge in real time. It integrates seamlessly with large language models (LLMs), supports retrieval-augmented generation (RAG), and enables advanced memory and state management, critical for context-rich applications.

Developer utilities include debugging and simulation tools, while its modular architecture accommodates no-code or low-code interfaces, reducing time-to-deployment and easing adoption for enterprise teams. It also provides real-time tracing of agent workflows, highlighting task dependencies and execution paths. These features support advanced data processing across distributed workflows, enabling efficient management and execution of complex datasets for AI tasks. With built-in monitoring tools, enterprises can track resource allocation and workflow efficiency and integrate with existing dashboards for transparent operations and actionable insights.

Use case

In retail, AutoGen streamlines inventory management and automates customer support workflows, enabling faster, context-aware responses, while empowering support agents to intervene or collaborate as needed. By coordinating autonomous agents across operations, it reduces bottlenecks, improves efficiency, and enhances customer experience. Retailers gain agility, operational resilience, and the ability to adapt quickly to changing demand and market dynamics.

Pros/cons

Strengths:

- High scalability for multi-agent workflows

- Advanced agent collaboration and autonomous planning

- Modular design allowing seamless integration with enterprise tools

- Rich developer utilities supporting rapid deployment

Limitations:

- Complexity may require skilled engineering teams for optimal implementation

- Early-stage ecosystem with limited enterprise adoption compared to commercial alternatives

- Resource overhead for multi-agent distributed operations

2. CrewAI

CrewAI is a robust AI agent framework engineered for orchestrating collaborative agents in enterprise environments. Combining commercial readiness with operational flexibility, it supports complex multi-agent workflows while maintaining contextual awareness and real-time coordination. Key differentiators include dynamic agent collaboration, intelligent task delegation, and seamless integration with enterprise systems, leveraging specialized agents for role-based task execution, making CrewAI a strategic solution for organizations aiming to scale AI operations efficiently in 2025.

Architecture

The framework is structured around autonomous agents, a centralized orchestration layer, and memory/state management modules that preserve context across tasks. The architecture directly shapes each agent's behavior in collaborative workflows, determining how agents execute tasks, interact with tools, and coordinate to achieve shared objectives. CrewAI supports both single-agent workflows for simpler operations and multi-agent distributed execution for large-scale, complex processes. Its modular design enables integration with APIs, enterprise databases, cloud services, and third-party tools, ensuring that enterprises can adopt AI capabilities without disrupting existing workflows or infrastructure.

Key features

CrewAI enables agents to collaborate dynamically, sharing knowledge and making context-aware decisions in real time, enhancing operational intelligence across enterprise workflows. In CrewAI-powered environments, customer support agents can be seamlessly integrated into automated decision-making workflows, enabling manual intervention, human approval, and ensuring high-quality customer service within multi-agent or AI-driven systems. It integrates seamlessly with LLMs, external tools, and retrieval-augmented generation (RAG), extending agent capabilities.

Its modular architecture supports distributed task management, while developer-focused utilities, debugging tools, testing frameworks, and optional no-code interfaces accelerate deployment, reduce friction, and deliver strategic insights alongside operational efficiency.

CrewAI also emphasizes strong observability and monitoring. Logs capture inter-agent communication and task handoffs, while metrics track workflow throughput, agent utilization, and error identification. This provides enterprises with transparency across distributed workflows, enabling optimization of team-level automation and maintaining operational control in dynamic environments.

Use case

CrewAI supports manufacturers in quality assurance, production planning, and supply chain management. Autonomous agents monitor production metrics, detect anomalies, and coordinate corrective actions in real time. Multiple AI agents collaborate to optimize manufacturing processes by communicating and adapting to dynamic workflows, allowing specialized agents to handle complex tasks together. They also streamline supplier interactions, inventory replenishment, and logistics scheduling. By enabling intelligent, coordinated agent workflows, CrewAI reduces operational bottlenecks, enhances product quality, and drives faster, more reliable manufacturing outcomes.

Pros/cons

Strengths:

- High efficiency in multi-agent task coordination and collaboration

- Flexible modular architecture for seamless enterprise integration

- Developer-friendly utilities with optional no-code deployment

- Scalable for complex and distributed workflows

Limitations:

- Initial setup for large-scale deployments may require skilled engineering resources

- Some advanced features may not match research-focused frameworks

- Resource overhead can increase with highly distributed workflows

3. LangChain

LangChain is a leading AI agent framework designed to streamline the development and orchestration of applications powered by large language models (LLMs). Widely adopted across both research and enterprise contexts, LangChain offers a modular, flexible, and scalable architecture that enables organizations to leverage LLMs effectively for complex workflows. It also plays a crucial role in building AI-powered applications for enterprise use, allowing businesses to automate tasks and enhance operational efficiency.

Its key differentiators in 2025 include robust integration capabilities, support for retrieval-augmented generation (RAG), and extensive developer tooling, making it an ideal choice for enterprises seeking to accelerate AI-driven automation and decision-making.

Architecture

LangChain’s architecture is built around a modular design consisting of agents, orchestration layers, memory/state management, and connector modules. LangChain provides built-in memory features that support both short-term and long-term context management: short-term memory is handled via in-memory buffers within a session, while long-term memory is enabled through integrations with vector stores or databases, using customizable memory classes for efficient context tracking. It supports both single-agent workflows for straightforward tasks and multi-agent orchestration for distributed, complex processes. The framework’s modularity allows seamless integration with enterprise databases, APIs, cloud services, and external tools, enabling adoption without disrupting existing enterprise systems or operations.

Key features

LangChain enables LLM-powered agents to operate intelligently across workflows, coordinating actions and maintaining context through advanced memory management. It supports RAG for real-time information retrieval, integrates with external tools and APIs, and drives dynamic decision-making across distributed tasks. LangChain can also access and manage a knowledge base, allowing agents to leverage structured repositories of information for enhanced understanding and performance.

Enterprises also benefit from comprehensive observability and monitoring features, including step-by-step execution logs, API call tracking, and performance metrics such as latency and success rates. These insights can be integrated into existing monitoring systems, providing full workflow visibility, improving reliability, and ensuring accountability at scale.

Developer utilities, including testing frameworks, debugging tools, and optional no-code interfaces, further accelerate deployment and enhance reliability, enabling enterprises to deliver scalable, context-aware AI solutions that boost efficiency, automation, and informed decision-making.

Use case

In distribution, LangChain orchestrates inventory tracking, order fulfillment, and route optimization across warehouses and transportation networks. LLM-powered agents analyze real-time data, forecast demand, and coordinate with suppliers and carriers to ensure timely deliveries. LangChain also supports advanced data analysis, enabling deeper insights into operational performance and identifying optimization opportunities. By enabling autonomous, coordinated agent operations, LangChain reduces manual effort, enhances accuracy, improves operational agility, and drives more efficient, scalable distribution workflows.

Pros/cons

Strengths:

- Modular, scalable architecture suitable for both single-agent and multi-agent workflows

- Seamless integration with LLMs, external tools, and enterprise systems

- Supports RAG for context-aware decision-making

- Strong observability and monitoring features for enterprise accountability

- Developer-friendly utilities with no-code and debugging support

Limitations:

- Initial deployment may require specialized AI/engineering expertise

- Resource overhead can be significant for high-volume, multi-agent operations

- Some enterprise-ready dashboards may require additional customization

4. LangGraph

LangGraph is an AI agent framework designed to enable enterprises to build graph-based, multi-agent workflows powered by large language models (LLMs). By representing tasks, data, and agent interactions as a graph, LangGraph allows organizations to orchestrate complex dependencies, dynamic decision-making, and real-time collaboration efficiently. Its differentiators in 2025 include graph-native workflow modeling, seamless LLM integration, and modular extensibility, making it particularly suited for enterprises seeking structured, scalable, and context-aware AI solutions. LangGraph also supports semantic search within enterprise workflows, enhancing information retrieval and natural language understanding.

Architecture

LangGraph’s architecture revolves around a graph-centric orchestration layer, where nodes represent agents, tasks, or data, and edges define dependencies and interactions. LangGraph facilitates creating agents for specific roles within the workflow, allowing users to design and deploy specialized AI agents tailored to distinct tasks. The framework supports both single-agent execution for simple workflows and distributed, multi-agent graph execution for complex enterprise scenarios. Its modular design allows integration with APIs, databases, cloud services, and enterprise applications, enabling organizations to adopt AI-driven processes without disrupting existing infrastructure.

Key features

LangGraph enables agents to collaborate intelligently through a graph-based structure, maintaining contextual awareness across interconnected tasks. The framework supports LLM integration and retrieval-augmented generation (RAG) for enhanced decision-making and real-time problem solving.

A standout capability is observability and monitoring. Its graph-based orchestration provides visualizations of node interactions and dependency paths, allowing enterprises to monitor task completion across agents, identify workflow bottlenecks, and ensure operational transparency. By embedding visibility directly into the graph structure, LangGraph enables better debugging, performance tuning, and accountability across multi-agent workflows.

Its modular design ensures scalable and adaptive workflows, while developer-focused utilities, including debugging tools, testing frameworks, and optional no-code interfaces, facilitate fast and reliable deployment. Together, these capabilities allow LangGraph to deliver structured, transparent, and highly efficient AI-driven workflows for enterprise-scale operations.

Use case

LangGraph empowers retailers to anticipate customer demand and optimize inventory across channels using graph-driven agent coordination. Autonomous agents analyze historical sales, regional trends, and promotions to forecast demand accurately, enabling proactive stock allocation. Virtual assistants can also be deployed for customer engagement and support, automating responses and enhancing user interaction in retail environments. This approach reduces overstock and stockouts, enhances supply chain responsiveness, and drives operational efficiency while supporting data-driven, scalable retail decisions.

Pros/cons

Strengths:

- Graph-based orchestration for structured, interdependent workflows

- Modular architecture enabling seamless enterprise integration

- LLM and RAG support for context-aware intelligence

- Built-in observability with graph-level monitoring and workflow transparency

- Developer-friendly tools, including no-code interfaces and testing utilities

Limitations:

- Requires initial graph modeling expertise for complex workflows

- May have higher resource usage in highly interconnected agent networks

- Advanced enterprise dashboarding may need custom integration

5. Semantic Kernel

Semantic Kernel is an AI agent framework designed to orchestrate intelligent agents with a focus on semantic reasoning and contextual understanding. By combining AI planning, memory management, and LLM integration, enterprises can execute context-aware, multi-step workflows with precision. The integration of AI models within Semantic Kernel enables advanced reasoning and automation, allowing organizations to build intelligent applications that leverage the latest in AI technology. In 2025, Semantic Kernel stands out for its robust semantic orchestration, extensible plugin architecture, and seamless integration with enterprise systems, making it ideal for organizations seeking Gen AI solutions that balance reasoning, automation, and adaptability.

Architecture

At its core, Semantic Kernel operates on a kernel layer that manages agents, memory/state, plugins, and orchestration. It also supports integration with machine learning models, enabling enhanced decision-making and continuous improvement of AI-driven processes. It supports both single-agent workflows for targeted tasks and multi-agent orchestration for complex, distributed processes. Its modular and extensible design integrates smoothly with enterprise databases, APIs, cloud platforms, and third-party tools, allowing businesses to scale AI capabilities without disrupting existing infrastructure.

Key features

Semantic Kernel enables agents to operate with context-aware intelligence by leveraging semantic reasoning and memory for executing multi-step workflows. Natural language understanding plays a crucial role in enabling these agents to recognize user intent, manage conversational context, and deliver more responsive interactions. It supports LLM integration, retrieval-augmented generation (RAG), plugins, and advanced orchestration, ensuring autonomous task execution that aligns with enterprise objectives.

A distinguishing capability is its observability and monitoring, which captures semantic workflow metrics such as agent reasoning steps, memory usage, and plugin execution. This visibility provides enterprises with fine-grained governance, transparency, and operational oversight, critical for maintaining trust and reliability in context-aware AI workflows.

Developer utilities, including debugging frameworks, testing tools, and optional no-code interfaces, further accelerate deployment while ensuring scalable, adaptive, and intelligent workflows for enterprise environments.

Use case

Semantic Kernel empowers manufacturers to implement predictive maintenance, optimize production processes, and coordinate real-time workflows. Semantic-driven agents analyze machine performance, detect potential failures, and recommend adjustments to improve throughput. By minimizing downtime, optimizing resource utilization, and enabling proactive decision-making, Semantic Kernel helps manufacturers scale intelligent operations while maintaining high-quality standards across complex production environments.

Pros/cons

Strengths:

- Semantic reasoning and memory support for context-aware workflows

- Extensible plugin architecture for seamless enterprise integration

- LLM and RAG support for intelligent decision-making

- Built-in observability for reasoning and memory usage metrics

- Developer-friendly tools, including debugging frameworks and no-code options

Limitations:

- Initial setup and workflow design may require specialized expertise

- Resource usage can increase with complex multi-agent orchestration

- Enterprise-grade dashboards may require additional customization

6. LlamaIndex

LlamaIndex is an AI agent framework designed to streamline access to large, complex datasets for AI workflows. By providing a unified interface for data ingestion, indexing, and retrieval, it enables enterprises to build LLM-powered applications that are both data-aware and contextually precise.

In 2025, LlamaIndex differentiates itself with flexible data connectors, advanced retrieval mechanisms, and seamless integration with large language models (LLMs), making it particularly valuable for organizations seeking to efficiently extract actionable insights from distributed data sources. Compared to other frameworks, LlamaIndex offers greater flexibility and customization, making it suitable for a wider range of applications and use cases.

Architecture

At its core, LlamaIndex is structured around a data-centric orchestration layer that includes connectors, index structures, memory/state management, and query processing modules. This architecture enables AI automation for data-driven workflows, allowing complex processes to be automated efficiently and at scale. It supports both single-agent operations for simple retrieval tasks and multi-agent workflows that coordinate data queries across distributed systems. Its modular design integrates seamlessly with enterprise databases, APIs, cloud storage, and third-party tools, allowing businesses to adopt AI-driven data orchestration without disrupting existing infrastructure.

Key features

LlamaIndex enables agents to operate with context-aware intelligence, leveraging indexed data to deliver precise, relevant outputs. It supports LLM integration, retrieval-augmented generation (RAG), and dynamic query processing to ensure accurate, real-time responses to complex data requests.

A critical strength is its observability and monitoring capabilities, which track query execution, indexing performance, retrieval accuracy, and agent response times. These insights allow enterprises to optimize data pipeline efficiency and maintain transparency into how AI agents interact with distributed datasets, ensuring reliability and trust at scale.

Developer-focused utilities, including debugging frameworks, testing tools, and optional no-code interfaces, further accelerate deployment and support enterprise-grade, data-intensive applications.

Use case

In distribution, LlamaIndex empowers enterprises to query large, distributed repositories in real time to optimize inventory management, order fulfillment, and logistics planning. AI agents analyze shipment histories, forecast demand, and coordinate warehouse operations to maximize efficiency. LlamaIndex enables agents to tackle complex tasks in distribution networks, such as managing intricate workflows and multi-step processes through effective collaboration and communication. By reducing decision latency, improving accuracy, and enhancing supply chain responsiveness, LlamaIndex helps distributors scale data-driven AI applications while ensuring timely and cost-effective delivery across complex networks.

Pros/cons

Strengths:

- Data-centric architecture enabling efficient indexing and retrieval

- Seamless LLM and RAG integration for context-aware operations

- Observability tools for monitoring query and pipeline performance

- Modular design for flexible enterprise integration

- Developer-friendly utilities, including debugging tools and no-code options

Limitations:

- Requires careful setup of data connectors and indexing for optimal results

- Resource usage may increase with extremely large or complex datasets

- Enterprise dashboarding features may require additional customization

7. LangChain4j (Java Ecosystem)

LangChain4j brings the power of AI agent frameworks to the Java ecosystem, enabling enterprises to integrate LLM-driven workflows directly into Java-based applications. Designed for robust, type-safe, and scalable deployments, it allows organizations to embed intelligent agents without disrupting existing enterprise systems. In 2025, LangChain4j differentiates itself with Java-native APIs, modular workflow orchestration, and seamless enterprise integration, making it particularly suited for enterprises leveraging Java at scale. LangChain4j also supports building conversational AI applications in Java environments, enabling the development of sophisticated, natural language interfaces and virtual assistants.

Architecture

The framework is built around Java-first agents, orchestration layers, memory/state management, and plugin modules. It supports both single-agent workflows for targeted tasks and multi-agent coordination for distributed enterprise operations. LangChain4j’s modular design ensures smooth connections with Java APIs, enterprise databases, cloud services, and microservices, allowing AI capabilities to integrate seamlessly into existing infrastructure.

Key features

LangChain4j enables agents to execute context-aware, LLM-powered tasks with efficiency and reliability. Core features include retrieval-augmented generation (RAG), external tool integration, dynamic workflow execution, and memory management. It also offers robust dialogue management, allowing developers to build advanced conversational systems that integrate natural language understanding (NLU) and dialogue policies for personalized, multi-turn interactions.

A standout differentiator is its observability and monitoring support, which provides Java-native logging and performance metrics across task execution, agent coordination, and resource consumption. By integrating with established Java monitoring frameworks (e.g., Micrometer, JMX, or enterprise APM tools), it ensures transparent operations, fine-grained visibility, and rapid troubleshooting in production environments.

Developer-focused utilities such as Java-native debugging, testing frameworks, and optional no-code adapters further accelerate deployment, reduce engineering overhead, and maintain operational reliability for enterprise-scale AI workflows.

Use case

In retail, LangChain4j empowers Java-based systems to automate personalized promotions, loyalty program management, and in-store workflow coordination. Embedded AI agents analyze customer behavior, predict purchasing patterns, and optimize staff allocation for peak hours. These AI agents work alongside human agents, who review, approve, or modify AI-generated outputs and handle complex tasks that require human intervention. By reducing manual oversight, improving operational efficiency, and enabling data-driven personalization, LangChain4j helps retailers scale intelligent processes and enhance the overall shopping experience.

Pros/cons

Strengths:

- Fully Java-native for enterprise-grade integration and type safety

- Supports single-agent and multi-agent distributed workflows

- RAG and LLM integration for context-aware operations

- Built-in observability and monitoring via Java-native tools

- Developer-friendly utilities: debugging, testing, and optional no-code adapters

Limitations:

- Requires Java expertise for initial deployment and configuration

- High-volume distributed workflows may demand additional resources

- Advanced dashboarding may require supplementary setup

8. OpenAI Agents

OpenAI Agents are designed to enable autonomous, context-aware AI operations across enterprise environments. Leveraging OpenAI’s advanced LLMs, these agents can perceive context, orchestrate multi-step workflows, and adapt dynamically to changing requirements. In 2025, OpenAI Agents differentiate themselves with robust LLM integration, seamless API connectivity, and scalability across industries, providing enterprises with intelligent digital collaborators that extend beyond traditional automation. As one of the popular AI agent frameworks for enterprise use, OpenAI Agents offer modular design and specialized task capabilities that streamline AI development processes.

Architecture

The framework is structured around agent cores, orchestration layers, memory/state management, and external tool connectors. OpenAI Agents enable organizations to create autonomous agents that can perceive input, process information, and execute actions across diverse workflows. It supports both single-agent operations for targeted tasks and multi-agent coordination for complex, distributed workflows. OpenAI Agents integrate with enterprise databases, cloud services, and third-party APIs, allowing organizations to embed intelligent decision-making capabilities without disrupting existing systems.

Key features

OpenAI Agents enable context-driven task execution and intelligent decision-making. Core capabilities include:

- Retrieval-augmented generation (RAG) support

- LLM integration for semantic and contextual reasoning

- External tool usage for enhanced workflow capabilities

- Memory management for multi-step task continuity

- Collaborative multi-agent workflows for distributed operations

A key differentiator is observability and monitoring, which provides visibility into execution details, inter-agent coordination, and system resource usage. These insights can integrate with enterprise monitoring systems, ensuring governance, operational reliability, and actionable insights across all AI-driven processes.

Developer-focused utilities, including debugging tools, testing frameworks, and optional no-code interfaces, accelerate deployment and enhance operational reliability, enabling enterprises to scale AI workflows efficiently while maintaining accuracy, consistency, and accountability.

Use cases

In manufacturing, OpenAI Agents monitor energy consumption, machinery usage, and resource allocation across production facilities. Agents analyze operational data to identify inefficiencies, suggest adjustments, and optimize workflows in real time. By reducing energy waste, improving resource utilization, and enabling proactive operational decisions, OpenAI Agents help manufacturers cut costs, enhance sustainability, and scale AI-driven efficiency across complex production environments.

Pros/cons

Strengths:

- Deep LLM integration for context-aware decision-making

- Supports both single-agent and multi-agent workflows

- Flexible integration with enterprise APIs and cloud services

- Built-in observability for workflow execution and resource monitoring

- Developer-friendly utilities: debugging, testing, and optional no-code interfaces

Limitations:

- Initial setup may require expertise in AI orchestration

- Resource usage can increase with multi-agent complexity

- Enterprise-grade monitoring dashboards may require customization

9. Atomic Agents

Atomic Agents is an AI agent framework designed for highly modular, task-focused automation, enabling enterprises to orchestrate discrete AI agents that execute independent yet coordinated workflows. Atomic Agents excels at managing multi-step tasks through modular orchestration, allowing complex processes to be automated across different systems. In 2025, its key strengths lie in flexible agent composition, lightweight deployment, and rapid integration, making it ideal for organizations seeking precise, scalable AI solutions without overhauling existing systems.

Architecture

The framework is built around independent atomic agents, a lightweight orchestration layer, memory management, and plugin modules. Each agent operates autonomously but can be orchestrated for multi-agent workflows when coordination is required. Its modular architecture allows seamless integration with enterprise databases, APIs, cloud services, and existing application stacks, ensuring minimal disruption while maximizing automation efficiency.

Key features

Atomic Agents deliver task-specific intelligence with modular execution, enabling context-aware actions across business processes. Core capabilities include:

- LLM integration for context-aware decision-making

- Memory/state management for multi-step task continuity

- Tool and plugin usage for extended workflow capabilities

- Inter-agent communication for coordinated operations

A key differentiator is observability and monitoring, providing granular visibility into individual agent execution and coordinated workflows. Enterprises can capture task performance, agent interactions, and system resource usage, integrating these insights into existing monitoring tools to ensure operational control, accountability, and predictable AI operations.

Developer-focused utilities, including debugging tools, testing frameworks, and optional low-code interfaces, accelerate deployment, support maintainable workflows, and maintain operational reliability for enterprise-scale automation.

Use case

In distribution, Atomic Agents monitor vehicle locations, traffic conditions, and shipment priorities in real time. Each agent autonomously evaluates routes, coordinates with others to prevent delays, and dynamically reallocates resources when disruptions occur. By reducing transit times, minimizing fuel costs, and improving on-time deliveries, Atomic Agents enable distributors to scale responsive, AI-driven logistics networks efficiently.

Pros/cons

Strengths:

- Modular agent design for highly targeted workflows

- Lightweight and flexible for rapid deployment

- LLM and memory integration for context-aware operations

- Built-in observability for agent execution and coordinated workflows

- Developer-friendly tools for testing, debugging, and low-code deployment

Limitations:

- Requires careful orchestration for complex multi-agent workflows

- Additional monitoring may be needed for large-scale deployments

- An early-stage ecosystem may limit third-party plugin availability

Choosing the right AI agent framework

Businesses today are raising the bar for AI agents and rightly so, as these intelligent systems continue to evolve rapidly. Yet, their full potential can only be realized when your business lays the right foundations. Selecting the right AI agent framework is critical: it transforms agents from isolated tools into trusted collaborators that orchestrate workflows, enable smarter decisions, and scale with your operations. Drawing from our AI experts’ experience, we highlight the key factors to guide your framework choice.

1. Align with business objectives

Evaluate frameworks against your enterprise goals. Whether the focus is accelerating decision-making, optimizing multi-step workflows, reducing manual effort, or scaling operations, the framework must support measurable outcomes. Aligning technology with strategy ensures automation delivers tangible business value rather than isolated technical gains.

2. Assess framework maturity

Framework maturity reflects stability, reliability, and the risk of adoption. Production-ready frameworks offer enterprise-grade dependability, while experimental or research-stage options may provide cutting-edge capabilities requiring governance. Understanding maturity helps balance innovation with operational continuity.

3. Evaluate orchestration style

Agents coordinate tasks differently, sequentially, hierarchically, graph-based, or event-driven. Select a style that aligns with the workflow's complexity and interdependencies. Proper orchestration ensures smooth collaboration, avoids bottlenecks, and maximizes automation efficiency across enterprise operations.

4. Check enterprise readiness

A framework must be production-ready, scalable, and integration-ready, fitting seamlessly into existing IT infrastructure, enterprise architecture, and security protocols. Enterprise readiness ensures minimal disruption, faster adoption, and consistent performance across teams and geographies.

5. Integration and key capabilities

Examine how the framework connects with LLMs, APIs, plugins, databases, and workflow tools, while also evaluating multi-agent coordination, memory/state management, RAG support, and workflow automation. Combining integration and capabilities ensures agents embed seamlessly into current systems, enabling cohesive automation and operational resilience. Keywords: framework selection criteria, automation resilience.

6. Scalability and future-proofing

Select frameworks that scale across teams, departments, and geographies, and can adapt to evolving AI technologies, business growth, and changing automation demands. Future-proof frameworks ensure investments remain relevant, resilient, and continuously valuable.

7. Decision-making guidance

Adopt a structured approach: use a checklist or scoring system, weigh trade-offs (flexibility vs. simplicity, cutting-edge features vs. stability), and align framework selection with workflow complexity, industry-specific requirements, and organizational maturity. This ensures that choices are strategic, risk-aware, and outcome-driven.

Harness AI agent frameworks for enterprise success

AI agents can only reach their full potential when your business lays the right foundation, starting with the right framework. The right framework ensures your AI agents orchestrate complex workflows, maintain context across processes, and support smarter, faster decision-making, transforming everyday operations into intelligent, scalable systems. Frameworks like AutoGen, LangChain, and CrewAI demonstrate how multi-agent orchestration and LLM integration solutions solve real-world challenges and deliver measurable business impact.

At Rapidops, we combine deep industry experience with hands-on expertise to help organizations identify, build, and implement the AI agent frameworks that truly fit their workflows and business goals. We don’t just deploy technology; we ensure your teams adopt it effectively, improve observability, and achieve tangible outcomes that make automation a real strategic advantage.

Take the next step: schedule a complimentary strategy call with one of our AI experts to explore which AI agent framework best aligns with your organization and receive tailored guidance on designing, implementing, and scaling intelligent AI agents that drive growth, efficiency, and innovation.

Frequently Asked Questions

What industries are benefiting most from AI agent frameworks today?

If your business operates in retail, healthcare, finance, or manufacturing, you’ll find that top AI agent frameworks are already transforming these sectors. Retailers use AI agents to create personalized shopping experiences and streamline inventory management. Healthcare providers deploy them for patient triage, diagnostics, and resource allocation. Financial institutions leverage AI agents for fraud detection and compliance, while manufacturers optimize production and logistics through predictive maintenance. Understanding how these frameworks are applied in your industry can help you identify opportunities to enhance efficiency, reduce costs, and deliver more value to your customers.

How can businesses assess the maturity of an AI agent framework?

Assessing maturity is about more than just features; it’s about reliability and long-term viability. A mature framework typically offers consistent updates, robust documentation, and a strong developer community. Look for frameworks that have been successfully deployed in enterprise environments, offer seamless LLM integration, and provide comprehensive orchestration tools. Evaluating maturity this way ensures your business can confidently adopt the framework without facing operational hiccups, giving you a foundation for sustainable automation growth.

What metrics indicate framework reliability and stability?

When considering top AI agent frameworks, focus on measurable indicators of performance. Reliability can be assessed through uptime, error rates, and task completion accuracy. Stability comes from resilience under high workloads, built-in monitoring, and automated failover mechanisms. Frameworks with strong vendor support and a proven track record in enterprise deployments provide an extra layer of confidence, letting you scale AI-driven automation without unexpected interruptions.

How do frameworks differ in terms of orchestration and agent coordination?

AI agent frameworks approach orchestration in different ways. Some provide a centralized control layer for distributing tasks and monitoring agents, while others favor decentralized coordination, where agents operate autonomously but communicate via defined protocols. Choosing the right orchestration style depends on your workflows and business needs. Selecting a framework that aligns with your operational model ensures your AI agents work together efficiently, reducing bottlenecks and maximizing productivity.

What role do internal technical capabilities play in framework choice?

Your team’s technical skills are a key factor in framework adoption. If your developers have experience with programming languages, cloud platforms, and LLM integration, you can leverage more advanced, customizable frameworks. For teams with limited technical bandwidth, frameworks offering pre-built modules or low-code interfaces can accelerate deployment. Matching framework complexity to your internal capabilities ensures smoother implementation, faster results, and more effective use of AI agents across business functions.

Which AI agent frameworks are best suited for small and medium businesses?

For small and medium businesses, the most effective AI agent frameworks are those that combine ease of use, scalability, and practical functionality. Frameworks like LangChain, LlamaIndex, and OpenAI Agents allow SMBs to quickly automate repetitive tasks, integrate with existing systems, and leverage pre-trained LLMs without heavy technical resources. By focusing on frameworks that balance affordability, flexibility, and growth potential, SMBs can make informed decisions, deploy AI agents efficiently, and gradually expand automation to drive operational efficiency and customer engagement.

What are common pitfalls when selecting AI agent frameworks?

Many organizations encounter avoidable challenges during framework selection. Common pitfalls include overlooking integration complexity, underestimating technical demands, prioritizing features over scalability, or ignoring compliance needs. Choosing the right AI agent framework without evaluating community adoption or vendor support can also create long-term risks. Being aware of these pitfalls enables your business to make informed decisions, avoid implementation delays, and maximize the benefits of AI agent frameworks.

How do you determine which AI agent framework fits your business goals?

Start by defining the specific problems you want AI agents to solve and the outcomes you aim to achieve. Evaluate top AI agent frameworks against key criteria, including scalability, orchestration capabilities, reliability, and enterprise readiness. Pilot testing or proof-of-concept deployments can provide real-world insights into performance, helping you select a framework that aligns with both immediate objectives and long-term strategic goals. This approach ensures your investment delivers measurable impact.

How to balance cost versus capability when selecting a framework?

Cost should be considered alongside functionality and long-term value. Top AI agent frameworks differ in licensing, cloud usage, and maintenance needs. Look beyond upfront costs to understand potential savings from automation, productivity gains, and improved decision-making. Prioritize frameworks that provide essential features, scalability, and integration capabilities without unnecessary complexity. This balance ensures your investment drives meaningful business outcomes without compromising efficiency or flexibility.

How do AI agent frameworks adapt to evolving LLM capabilities?

Leading frameworks are designed to evolve with advancements in LLM technology. Look for frameworks that allow modular LLM integration, API-based updates, and multi-agent orchestration. This adaptability enables your business to leverage improvements in reasoning, context understanding, and multi-modal processing without disrupting workflows. By choosing flexible frameworks, you ensure your AI agents remain effective, future-ready, and capable of delivering continuous value as LLM technology advances.

Rahul Chaudhary

Content Writer

With 5 years of experience in AI, software, and digital transformation, I’m passionate about making complex concepts easy to understand and apply. I create content that speaks to business leaders, offering practical, data-driven solutions that help you tackle real challenges and make informed decisions that drive growth.

What’s Inside

- Understanding AI agent frameworks and their impact

- How we evaluated these AI agent frameworks

- Leading AI agent frameworks in 2025

- 1. AutoGen (Microsoft Research)

- 2. CrewAI

- 3. LangChain

- 4. LangGraph

- 5. Semantic Kernel

- 6. LlamaIndex

- 7. LangChain4j (Java Ecosystem)

- 8. OpenAI Agents

- 9. Atomic Agents

- Choosing the right AI agent framework

- Harness AI agent frameworks for enterprise success

Let’s build the next big thing!

Share your ideas and vision with us to explore your digital opportunities

Similar Stories

- AI

- 4 Mins

- September 2022

- AI

- 9 Mins

- January 2023

Receive articles like this in your mailbox

Sign up to get weekly insights & inspiration in your inbox.