In just a few years, large language models (LLMs) have become central to how enterprises generate content, automate services, and make decisions. Yet most of today’s models were trained on a narrow slice of the enterprise reality: text. That’s no longer enough.

Your business doesn’t just communicate in language; it sees, hears, moves, and reacts across a web of formats. From product images and voice messages to sensor readings and scanned contracts, enterprise data is inherently multimodal. And that’s where a new class of intelligence is emerging, multimodal LLMs.

Multimodal LLMs are advanced models trained to understand, interpret, and generate information across formats, such as text, images, audio, video, and more in a unified context. By integrating modalities, they go beyond task automation to enable reasoning, synthesis, and human-like understanding.

This shift isn’t incremental; it’s foundational. Unlike first-generation LLMs that operate in isolation, multimodal systems bring AI closer to how humans process the world: holistically, contextually, and cross-functionally. They’re reshaping how businesses interact with customers, make real-time decisions, and automate complex workflows with greater nuance and precision, especially when powered through focused LLM development that fine-tunes these models on enterprise-specific data for maximum relevance.

In this blog, we’ll explore what multimodal LLMs are, how they’re trained using enterprise-specific data to perform targeted business tasks, how they work across industries, and where they’re creating real impact today. If you’re defining the next phase of enterprise intelligence, this is where transformation begins.

Defining multimodal LLMs, AI that understands beyond text

What is a multimodal LLM?

A multimodal large language model (LLM) is an advanced AI system designed to process and understand multiple types of data, such as text, images, audio, video, and sensor inputs, in a unified framework. Unlike traditional LLMs that rely solely on text, multimodal LLMs integrate diverse modalities to generate deeper context and more accurate outputs. This allows them to interpret visual cues, understand spoken language, and respond with relevance across formats. Enterprise-grade multimodal LLMs are trained on massive cross-modal datasets, enabling them to reason across inputs and deliver intelligent responses tailored to complex, real-world scenarios in business, healthcare, retail, and beyond.

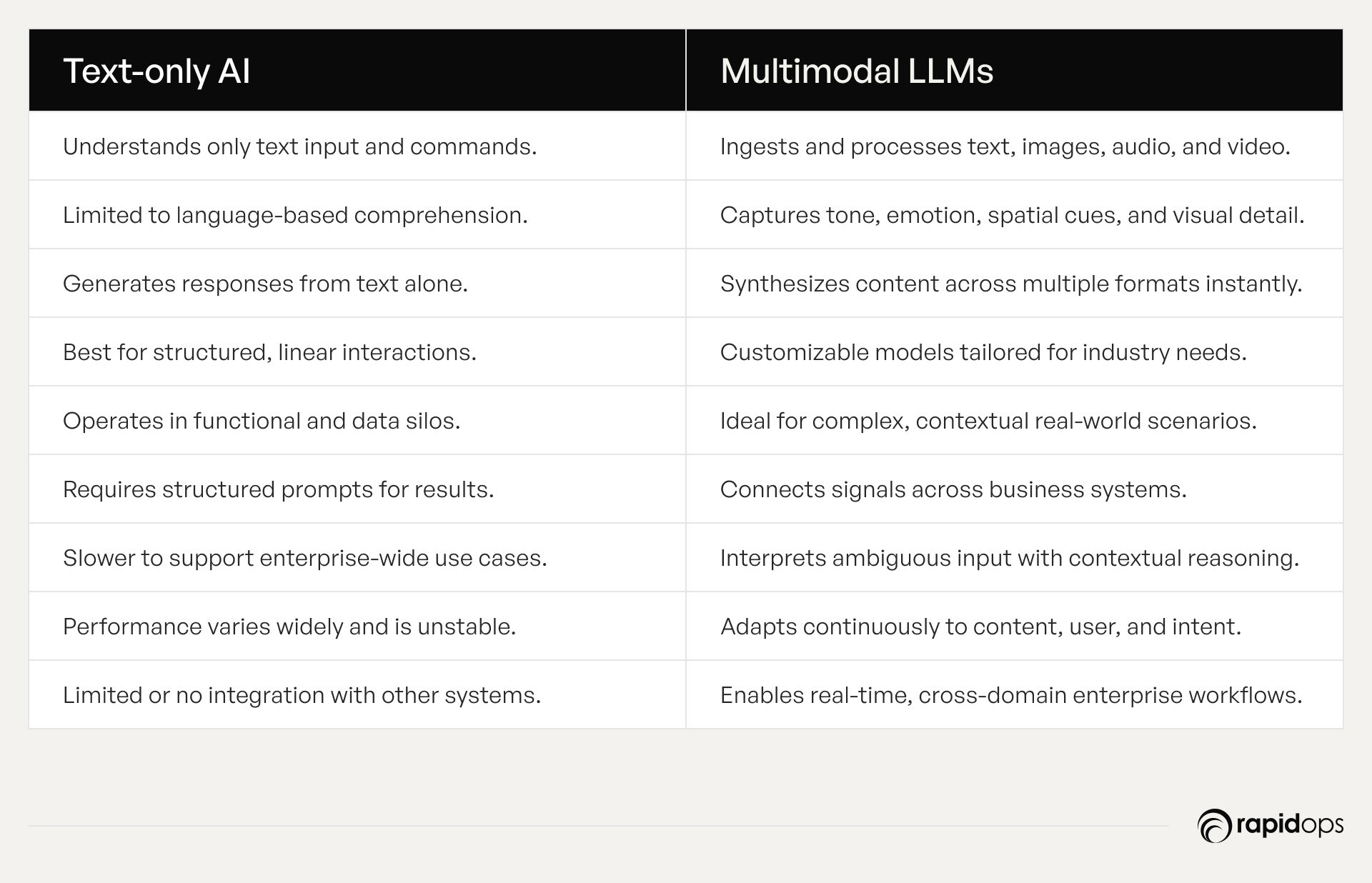

How multimodal LLMs differ from text-only AI

To understand their true leap forward, let’s compare multimodal LLMs with traditional, text-only AI systems.

While text-based LLMs are powerful for language generation, summarization, and structured document processing, their utility is constrained by a single input channel. Multimodal LLMs go beyond language, combining text, images, audio, video, and sensor data into one contextual stream. This unlocks more informed, context-aware automation and decision-making at scale, mirroring how real-world decisions are made across formats, not just words.

Common modalities in enterprise contexts

Enterprises generate data across an ecosystem of touchpoints:

- Text from contracts, support chats, and documentation

- Images from product catalogs, manufacturing lines, or retail environments

- Audio from customer service calls and operational voice logs

- Video from surveillance, inspections, or training

- Sensor data from IoT devices, industrial systems, and smart infrastructure

Multimodal LLMs interpret these signals holistically, rather than in isolation, providing a more complete understanding of business conditions and customer intent.

Why modalities matter in real-world enterprise systems

Modern businesses operate in environments that are inherently multimodal. A support issue might begin with a chat, escalate with a photo upload, and require diagnostic audio or sensor data for resolution. Text-only models can capture fragments, but multimodal LLMs deliver continuity, connecting disparate inputs into a unified understanding that mirrors how humans perceive complex scenarios.

This is especially critical in industries like retail, manufacturing, and distribution, where delivering great customer experiences depends on interpreting visual, verbal, and contextual data. Multimodal systems help unify this intelligence, enabling faster responses, sharper insights, and smarter automation across the value chain.

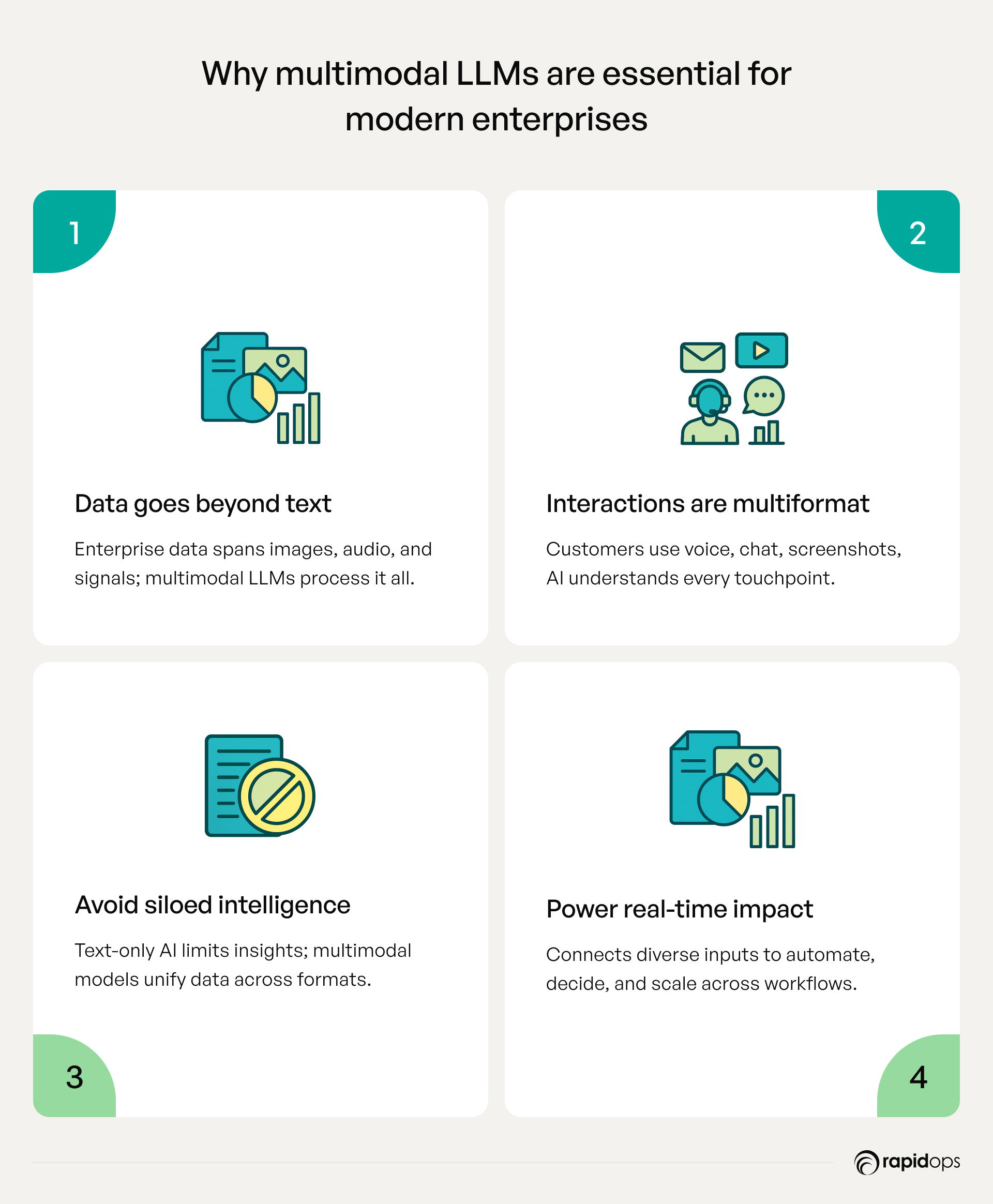

Why businesses need multimodal LLMs, not just text-based models

Enterprise data now spans far beyond text, ranging from product visuals and voice logs to video assessments and IoT signals. Text-only models can’t capture the full picture. Multimodal LLMs connect these diverse inputs into one intelligent system, eliminating blind spots and enabling faster, more contextual decisions that scale across teams.

1. Enterprise data is inherently multimodal

Across industries from manufacturing to retail to distribution, data doesn’t live in spreadsheets alone. It includes thermal scans, product photos, conversational transcripts, and telemetry from machines. These are not secondary signals; they are core to operational intelligence. Ignoring them means missing the real-time context required to act with speed and scale.

2. Customer interactions are no longer text-first

Today’s customer journeys unfold across chat, voice, images, and video, often within a single interaction. A service issue might begin with a typed message, followed by a screenshot, then a voice call. Text-only LLMs miss these cues. They can’t detect tone escalation, interpret product defects, or correlate cross-channel context. This leads to longer resolution times, missed insights, and fractured experiences, especially costly in high-stakes industries where trust is paramount.

3. Text-centric models fragment enterprise intelligence

Even the most advanced text-based models operate in isolation. They can’t process images, audio, or real-world signals together, forcing enterprises to invest in siloed tools for every modality. This raises complexity, inflates costs, and introduces friction in governance, compliance, and user experience.

4. The case for unified intelligence

Multimodal LLMs shift the paradigm. By aligning inputs across text, image, audio, video, and sensor data, they create a unified understanding of context more closely aligned with how businesses operate. This enables enterprises to:

- Automate cross-functional workflows

- Personalize customer experiences in real time

- Accelerate decision-making across business units

- Break down data silos with actionable insight

- Minimize manual interpretation and reduce inefficiencies

This isn’t just a technology upgrade; it’s a strategic leap. Multimodal LLMs realign AI with the way your enterprise thinks, decides, and delivers value. They enable a new level of enterprise intelligence that is context-aware, adaptive, and built for scale.

How multimodal LLMs work in enterprise environments

To truly appreciate their strategic impact, it’s critical to understand not just what multimodal LLMs are but how they operate within enterprise ecosystems. This deep dive, designed for business leaders like you, decodes each layer of architecture and ties it to practical business value.

1. Independent encoding of diverse inputs

Enterprise signals come in many forms, such as structured text, high-resolution visuals, audio files, videos, and streams from sensors (e.g., IoT, telemetry). Multimodal LLMs begin by transforming each type into specialized embeddings:

- Text is tokenized and mapped to semantic vectors.

- Images go through convolutional or transformer-based vision encoders.

- Audio is transformed into spectrogram representations.

- Videos and sensor feeds are segmented into frame-by-frame or time-series embeddings.

This step preserves the inherent richness of each data type while converting it into standardized numeric representations, laying the foundation for unified processing.

2. Cross‑modal alignment and fusion

The magic lies in the fusion and careful alignment of these embeddings into a shared context.

- Cross-attention layers enable the model to determine which modality informs another (e.g., image context clarifying a text query).

- Projection layers map disparate embeddings into a joint latent space.

- The result is a fused representation where different modalities inform and enrich each other, creating a coherent, composite view of an event or request.

For enterprises, that means disparate data, like a support image and a payment record, can be interpreted together, enabling seamless automation or diagnosis.

3. Learning cross‑modal associations

During training, multimodal LLMs are exposed to paired data (e.g., image + caption, audio + transcript, sensor + log). This allows the model to recognize:

- Visual patterns tied to text (e.g., packaging damage visualized + described)

- Aural cues that reflect sentiment or urgency

- Time-based correlations (e.g., a spike in machine temperature, followed by anomaly alerts and operator notes)

This cross-modal reasoning capability underpins intelligent inference, enabling the model to answer questions like:

- “Is the damaged unit covered under warranty?”

- “Is this audio complaint critical?”

- “Was this sensor anomaly a false alarm?”

4. Contextual inference & output generation

Once trained, the model applies its fused understanding to generate precise, task-specific outputs:

- Text summaries with embedded visuals or audio context, enhancing clarity and reducing manual interpretation

- Annotated images or sensor charts that highlight areas of interest

- Mixed-media responses, like an email coupled with a chart snapshot or voice alert

This capability transforms the model from a text-only responder into a cross-channel assistant, capable of delivering actionable intelligence in the format that best suits the outcome.

5. Attention mechanisms & temporal modeling

Multimodal LLMs leverage attention across both modalities and time sequences:

- Self-attention dynamically determines which modality segments carry more weight for a given task.

- Temporal encoding preserves the order and duration of events, critical for workflows like machine maintenance histories or multi-step customer interactions.

Together, these enable the model to:

- Recognize escalation in sentiment or technical severity

- Connect events in time (e.g., maintenance logs, sensor spikes, technician notes)

- Build a holistic narrative that spans modalities and time slices

6. Scalability & enterprise‑grade infrastructure

These models are built for enterprise scale:

- Distributed GPU training creates robust embeddings across terabytes of multimodal training data

- Cloud-native inference pipelines (e.g., microservices, Kubernetes autoscaling) support real-time multimodal processing essential for 24/7 operations

- Batch processing frameworks handle large backlogs, such as scanning archived documents or media for compliance and trend insights

This layered enterprise-grade architecture shows how multimodal LLMs deliver tangible business value not just as a technical novelty, but as core components of intelligent, context-aware corporate systems.

How multimodal LLMs deliver real business value at enterprise scale

At enterprise scale, real value emerges through meaningful understanding. Multimodal LLMs elevate how businesses interpret and act on data across text, voice, visuals, and signals, within the natural flow of operations. These models enhance decision clarity, drive contextual automation, and align intelligence with how modern enterprises function. For forward-thinking business leaders, they represent not just innovation, but a pathway to more connected, adaptive, and high-impact outcomes.

1. Faster, context-aware decision-making

Business moves at the speed of context. Multimodal LLMs enable real-time decisions by interpreting disparate inputs emails, contracts, visual evidence, or customer voice recordings, in a single pass. Leaders gain actionable insights without waiting for manual reconciliation, enabling faster pivots in supply chain, finance, and customer operations.

2. Hyper-personalized customer experiences

Personalization at scale requires understanding more than customer profiles, it requires reading between the formats. Multimodal LLMs connect reviews, voice interactions, and product visuals to deliver relevance in every engagement. Enterprises can tailor digital experiences that feel intuitive, responsive, and brand-consistent across every channel.

3. Automation across complex workflows

Enterprise processes are inherently multimodal an order might involve PDFs, scanned barcodes, emails, and voice logs. These models automate cross-format tasks like compliance checks, document verification, and troubleshooting. The result is reduced manual effort, fewer errors, and more agile operations across departments.

4. Enhanced cross-functional collaboration

When insights are stuck in silos legal in PDFs, field ops in sensor logs, and customer feedback in audio clips, collaboration stalls. Multimodal LLMs break those barriers, delivering shared intelligence to cross-functional teams in a language they can act on. Marketing, IT, and compliance teams operate in sync, aligned by data, not just dashboards.

5. Breaking down data silos across the enterprise

Legacy architectures separate structured and unstructured data, making it difficult to extract enterprise-wide insights. Multimodal LLMs unify disparate sources from design images and technical documents to shipment videos enabling integrated analytics and strategic planning. Business leaders get a 360-degree view, not fragmented snapshots.

6. Improved risk management and compliance

From regulatory filings to compliance audits, risk today lives in multiple formats. Multimodal LLMs parse video footage, legal clauses, and even scanned signatures to flag non-compliance. Enterprises gain early-warning systems and audit-ready intelligence, reducing exposure and strengthening governance frameworks.

7. Cost-efficient and scalable intelligence

Scalability isn’t just about volume it’s about performance without cost spikes. Lightweight, modular architectures (like BLIP-2 or LLaVA) allow LLMs to operate efficiently across hybrid cloud infrastructure. Enterprises gain AI horsepower without overextending IT budgets, enabling broader use across teams and regions.

8. Future-ready infrastructure for AI transformation

The evolution of business demands AI that’s composable, extensible, and future-proof. Multimodal LLMs are architected for flexibility, able to integrate with ERP, CRM, and real-time data streams. As formats evolve (e.g., AR/VR, IoT telemetry), these models adapt, positioning your organization at the forefront of innovation.

Enterprise-ready multimodal LLMs leading the AI evolution

Enterprise-ready multimodal LLMs are changing how businesses make sense of the world. Today’s operations span far more than just text; they involve documents, conversations, images, dashboards, and signals. These models bring it all together, enabling deeper understanding and faster, context-aware decisions. But value doesn’t come from the model alone. It comes when it’s trained and tuned to your business, your data, your processes, your challenges. With the right partner, these models move beyond hype to become real drivers of impact streamlining workflows, elevating customer experiences, and enabling your teams to work smarter. That’s when AI truly becomes a strategic advantage.

GPT-4o (OpenAI)

GPT-4o delivers real-time understanding across modalities, combining text, image, and voice into a single experience. When adapted using enterprise-specific knowledge bases, customer interaction histories, or product datasets, it powers intelligent agents that speak, see, and reason with human-like precision. Enterprises use it to enable visual document Q&A, contextual chat copilots, and dynamic customer support systems that improve resolution speed and engagement quality.

Google Gemini

Gemini stands out for its deep integration with Google Cloud and its advanced reasoning across formats. When tuned with internal documentation, domain-specific content, and process flows, it enables intelligent enterprise search, automated document insights, and AI-generated recommendations that mirror real business logic. Its potential is unlocked when thoughtfully embedded into business operations, ensuring that intelligence flows where it matters most.

Flamingo (DeepMind)

Flamingo’s vision-language fusion excels in precision-driven sectors such as compliance and insurance. Trained on multimodal industry data, such as forms, scans, and case documentation, it automates complex interpretation tasks with auditability and context awareness. Fine-tuning with domain-specific datasets ensures that its outputs are not just intelligent, but also accurate, relevant, and regulation-ready.

LLaVA (Microsoft / Open community)

LLaVA’s open architecture is optimized for flexibility and innovation. Enterprises use it for tasks such as parsing visual documents, powering image-aware support agents, and enriching applications with visual intelligence. When trained with synthetic or domain-aligned multimodal datasets, LLaVA accelerates speed-to-value across diverse business functions, from operations to customer experience.

These models offer immense potential, but their value isn’t unlocked out of the box. It emerges when they are purposefully adapted to your unique context. Fine-tuning with your proprietary data, aligning with real workflows, and embedding them into your ecosystem requires more than access; it demands technical precision. When guided by experts who understand both the models and the business context, multimodal LLMs evolve into strategic capabilities, driving decisions, automation, and experiences that scale with confidence and clarity.

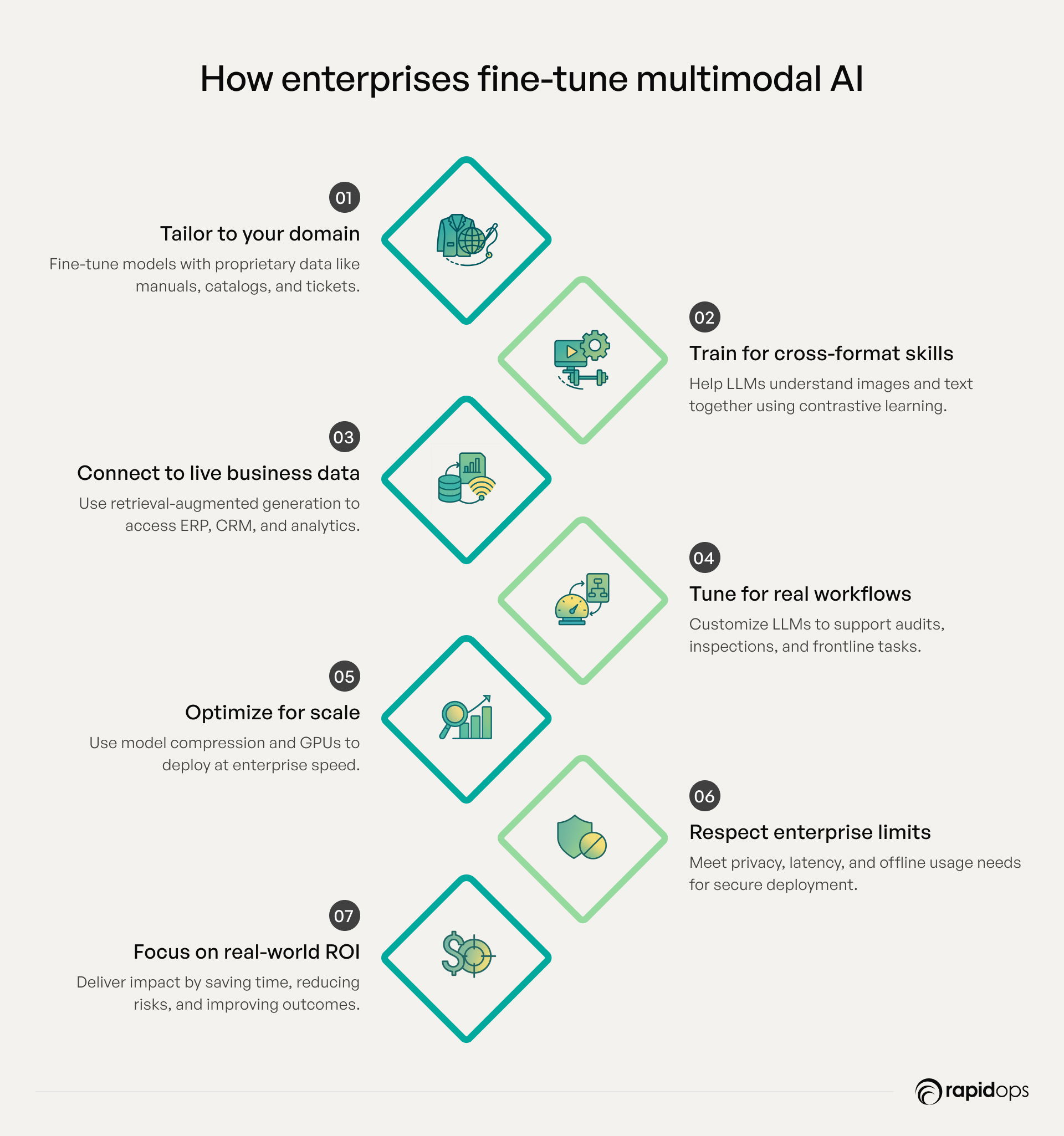

How multimodal LLMs are fine-tuned for enterprise use

In today’s fast-moving business environment, data comes in many forms: text, images, tables, videos, and system logs. Multimodal large language models (LLMs) can process all of these, but to make them work effectively for enterprise needs, they must be fine-tuned with care. This goes beyond technical training; it’s about aligning the model with the real-world context, systems, and challenges of your business.

1. Tailoring to your domain with enterprise-specific data

It starts by training the model on your organization’s unique data product catalogs, service records, visual assets, manuals, and more. For example, in retail, this might involve SKU imagery and product descriptions. In manufacturing, it could include machine logs or inspection images. This tuning ensures the model understands your operations, not just general language.

2. Teaching models to understand across formats

Using a technique called contrastive learning, models are trained to link different types of inputs, like a blueprint and its documentation or an image of a damaged product with a customer complaint. This cross-modal understanding enables smarter automation in quality checks, inventory control, or compliance.

3. Keeping responses updated with live business data (RAG)

Enterprises can’t rely on static models. Retrieval-Augmented Generation (RAG) connects the model to your live data, such as ERPs, supply chain dashboards, or internal wikis, so it can provide accurate, real-time answers. It’s like giving the model direct access to your knowledge systems.

4. Tuning for tasks that matter to your teams

Instruction tuning trains the model to handle real enterprise workflows, like generating safety reports, summarizing audits, or handling customer queries. When real data is scarce, synthetic examples (created in a controlled way) help make the model more robust without sacrificing accuracy.

5. Scaling with the right infrastructure

Deploying at scale requires the right foundation, cloud platforms, GPUs, and monitoring systems. Techniques like quantization and model distillation help run models efficiently at the edge on warehouse scanners, factory terminals, or sales apps without compromising performance.

6. Building for enterprise constraints

Enterprises need models that respect privacy, reduce latency, and work even with limited connectivity. That’s why smaller, fine-tuned models like LLaMA 2 or Mixtral are used to bring AI to the edge on-site, securely, and fast, whether in a distribution center or on a mobile device.

7. Focusing on measurable impact

Fine-tuning is valuable only if it delivers outcomes. Enterprises are using multimodal LLMs to reduce costs, boost efficiency, and improve decision-making. A fine-tuned model that can flag product inconsistencies or automate visual inspections can cut error rates by 20%, speed up operations, and free teams to focus on strategic work.

Enterprise readiness challenges before deploying multimodal LLMs

Deploying multimodal LLMs at scale isn’t just a technical upgrade it’s a foundational shift in how intelligence flows across the enterprise. While the promise is immense, realizing it requires navigating a new class of readiness challenges that span data, infrastructure, people, and governance. Here’s what business leaders must consider before bringing multimodal AI into production.

1. Model transparency and explainability

Multimodal LLMs combine language, vision, and other inputs into deeply layered inferences. But for regulated industries or high-stakes decisions, the “why” behind a prediction matters. Enterprises must evaluate how explainable model outputs are across modalities, whether diagnosing why a product image was flagged or understanding how a voice tone influenced a support escalation.

2. Bias and fairness across modalities

Bias doesn’t stop at text. Multimodal systems risk reinforcing inequality through visual, audio, or contextual misinterpretations. Leaders must implement fairness assessments not just on datasets, but on how modalities interact e.g., does the model favor certain demographics in image-based scenarios or misclassify speech patterns across accents?

3. Security, privacy, and cross-modal governance

More modalities mean more exposure. Voice inputs, scanned documents, and video data may carry sensitive PII, proprietary assets, or compliance flags. Enterprises need policies that govern multimodal data handling, model access, and audit trails especially where inputs span cloud, edge, or third-party platforms.

4. Multimodal data readiness and standardization

Multimodal models are only as good as the data they ingest. Scattered image formats, unlabeled audio files, or low-resolution scans can degrade performance. Standardizing input formats and embedding metadata across business units is essential to ensure consistency, context, and reliability in model training and inference.

5. Infrastructure and system integration challenges

Multimodal LLMs require robust infrastructure high-performance GPUs, distributed computing, and scalable pipelines. But the real challenge is integrating them into enterprise ecosystems: connecting with CRMs, ERPs, customer service platforms, and data lakes without adding latency or risk.

6. Organizational and talent readiness

Multimodal deployments often expose skills gaps. Success depends on cross-functional teams AI/ML engineers, data stewards, domain SMEs, and product leaders who can collectively shape prompts, refine outputs, and tune models. Upskilling and change leadership are as important as the model architecture itself.

7. Cost and performance tradeoffs

While multimodal LLMs unlock significant capabilities, they’re compute-intensive and can increase cloud costs. Enterprises must assess ROI by use case: where to deploy heavy models (e.g., compliance parsing), and where lightweight, task-specific agents might suffice.

8. Adoption, change management, and user trust

Even the most advanced multimodal system fails without adoption. Trust must be built through transparency, consistency, and co-creation. End users whether support agents, analysts, or executives need to see how the model complements their decisions, not replaces them.

For enterprises pursuing AI at scale, readiness isn’t optional. It’s the differentiator. Multimodal LLMs represent a powerful capability, but unlocking their full value begins with aligning the enterprise around clear governance, trusted data, infrastructure resilience, and human-centered design.

Strategic applications of multimodal LLMs in business

As business data grows in complexity and format, multimodal LLMs are emerging as strategic assets. They decode meaning across images, speech, video, and text, bridging silos and unlocking intelligence. Their value lies not in isolated tasks, but in how seamlessly they amplify core business workflows across industries like retail and manufacturing.

Modernizing knowledge management across the enterprise

Enterprise-wide knowledge management is another domain being transformed. Data lives in scattered formats, contracts in PDFs, training in videos, updates in email threads, and logs in machine-readable formats. Multimodal LLMs bring intelligence to this fragmentation. Leaders can now query across formats and receive synthesized insights. For example, an executive might ask, “What were the top risks flagged in last month’s compliance reports?” and receive a precise summary, drawing from scanned documents, email attachments, and related voice meeting notes. This dramatically enhances decision speed and accuracy while freeing up teams from manual information gathering.

- Search and summarize insights across documents, videos, and logs.

- Boost executive productivity with multimodal knowledge access.

- Turn scattered content into an enterprise-wide strategic advantage.

Transforming retail with immersive, context-aware experiences

Retail, by contrast, is one of the most multimodal-intensive industries in existence. Shoppers engage with brands through product images, descriptions, voice assistants, social media posts, customer reviews, and more. Understanding this rich stream of inputs and responding in context requires an AI agent's model that can think and interact like a human.

That’s where multimodal LLMs shine. In retail, they are powering smart search that interprets a photo or voice description to recommend the right product, style, or color. They’re enabling immersive shopping experiences through virtual try-ons, product comparison via voice and image input, and AI chat agents that can see the customer’s screenshot and respond with contextual support.

Even post-purchase experiences benefit: LLMs parse visual reviews and sentiment cues to flag product quality issues, optimize inventory presentation, or detect brand risk before it escalates. When tailored to a brand’s data and visual identity, these models don’t just automate, they elevate the entire customer journey with precision.

- Enable voice-and-vision-powered product discovery and search.

- Deliver immersive virtual try-ons and contextual chat support.

- Analyze multimodal feedback to refine product presentation and reduce returns.

Revolutionizing manufacturing and operational intelligence

In manufacturing, operations generate a massive volume of multimodal data every second from real-time telemetry streaming from machines to thermal imaging inspections, technician notes, maintenance videos, and scanned repair logs.

Traditionally, these signals have been siloed, analyzed separately, or overlooked entirely due to technical limitations. Multimodal LLMs are changing that. By integrating these signals into a unified understanding, manufacturers can move beyond reactive maintenance toward predictive and even autonomous systems.

For example, when a machine anomaly is detected via sensor data, the model can simultaneously analyze visual inspection images and match historical technician logs, identifying failure patterns that would otherwise be missed. It can even summarize operational events and recommend the most effective maintenance strategy, minimizing downtime. This depth of contextual understanding helps production lines run more smoothly, ensures traceability for audits, and equips field teams with instant answers derived from years of operational history.

- Improve predictive maintenance using combined sensor and visual data.

- Summarize technician logs and surface high-risk failure patterns.

- Enhance operational continuity and audit readiness.

By applying multimodal LLMs, enterprises move beyond automation to build intelligent ecosystems where data from every format works together. Whether it’s smarter operations, richer customer experiences, or faster decisions, these models unlock deeper understanding and real business outcomes by connecting the dots across modalities.

From possibility to purpose with multimodal LLMs

By now, you’ve seen how multimodal LLMs are reshaping how enterprises interpret data, make decisions, and deliver smarter customer experiences by bringing together language, visuals, and real-time context into a unified intelligence layer. But unlocking their full value isn’t just about adopting the technology. It’s about aligning it with your unique data, systems, and business goals so it works in your world.

At Rapidops, we bring over 16 years of experience helping enterprises evolve from inconsistent data flows and siloed decision-making to AI-driven systems that deliver clarity, speed, and strategic impact. Whether it’s enabling intelligent search across documents and visuals, automating decisions with real-time context, or elevating customer journeys through multimodal interactions, we’ve done it, and we understand what it takes to make it real.

Curious how multimodal LLMs could drive real impact in your business?

Book a free consultation with one of our multimodal LLM specialists. Our expert will help you identify high-impact use cases, assess your current capabilities, and outline next steps to move forward with clarity.

Frequently Asked Questions

What is the application of multimodal LLMs?

Multimodal LLMs are used to process and reason across multiple data types, text, images, audio, video, and sensor signals in a unified manner. This enables applications such as visual customer support, intelligent document search, predictive maintenance, multimodal analytics, and immersive retail experiences. When integrated into enterprise workflows, they unlock new forms of automation, improve decision speed, and enhance operational intelligence across departments.

Can multimodal LLMs understand images and text together?

Yes. Multimodal LLMs are designed to jointly interpret and generate meaning from inputs like images and text. For example, they can analyze a product image and a customer review simultaneously, or extract information from a scanned invoice and accompanying email. This combined reasoning enables more contextual responses, richer insights, and more human-like interactions in business applications.

What business problems can multimodal LLMs help solve?

Multimodal LLMs help solve complex business problems that involve diverse formats of data. These include automating support workflows that involve voice, screenshots, and text; improving inventory accuracy with visual product verification; enhancing knowledge retrieval from PDFs, videos, and reports; and enabling predictive maintenance by fusing sensor data with technician logs. Their value lies in unifying fragmented enterprise intelligence into streamlined, decision-ready insights.

Do multimodal LLMs require completely new data infrastructure?

Not necessarily. While high-quality, unified data improves outcomes, multimodal LLMs can often be integrated into existing enterprise architectures. Many platforms are built to work with current data lakes, warehouses, or lakehouses, provided the data is properly labeled, accessible, and governed. A thoughtful implementation strategy can bridge legacy systems and modern AI frameworks without requiring a complete overhaul.

How do businesses measure ROI from multimodal LLM implementation?

ROI is typically measured by the operational efficiencies and business outcomes these models enable, such as faster support resolution, reduced manual effort, improved decision speed, higher customer engagement, and lower error rates. Additional value can be realized through enhanced scalability, regulatory compliance, and time saved across content-heavy processes. Metrics should align with the business use case, from productivity gains to cost savings and customer satisfaction.

Rahul Chaudhary

Content Writer

With 5 years of experience in AI, software, and digital transformation, I’m passionate about making complex concepts easy to understand and apply. I create content that speaks to business leaders, offering practical, data-driven solutions that help you tackle real challenges and make informed decisions that drive growth.

What’s Inside

- Defining multimodal LLMs, AI that understands beyond text

- Why businesses need multimodal LLMs, not just text-based models

- How multimodal LLMs work in enterprise environments

- How multimodal LLMs deliver real business value at enterprise scale

- Enterprise-ready multimodal LLMs leading the AI evolution

- How multimodal LLMs are fine-tuned for enterprise use

- Enterprise readiness challenges before deploying multimodal LLMs

- Strategic applications of multimodal LLMs in business

- From possibility to purpose with multimodal LLMs

Let’s build the next big thing!

Share your ideas and vision with us to explore your digital opportunities

Similar Stories

- AI

- 4 Mins

- September 2022

- AI

- 9 Mins

- January 2023

Receive articles like this in your mailbox

Sign up to get weekly insights & inspiration in your inbox.