In the rapidly evolving world of digital technology, Generative AI has emerged as a groundbreaking force, offering new horizons for innovation.

Whether you're creating realistic human faces for a video game, generating personalized music, or writing unique texts, Generative AI can revolutionize the way you approach your projects.

This blog post will serve as your comprehensive guide to understanding and implementing a Generative AI framework. We will delve into its principles, provide a step-by-step guide to creating a framework, discuss potential challenges, and look ahead at future trends in this exciting field.

This blog post will equip you with the knowledge and tools to harness the potential of Generative AI for your next digital project.

Let's dive in!

What is generative AI?

Generative AI, a captivating facet of artificial intelligence, has the ability to create content - be it images, music, text, or even videos. The term 'generative' refers to the system's ability to generate new outputs from learned data inputs.

Instead of being explicitly programmed to complete a task, generative AI models learn patterns from data and are able to produce novel content, or in other words, they can generate data that is similar but not identical to the training data.

How it works

Generative AI models work by understanding and learning the distribution and patterns in the provided dataset. Once trained, they can sample from this learned distribution to produce new data points.

These models function based on probability; they assess thousands, or even millions, of possible combinations and select the most likely one.

Different types of generative AI

There are several generative models, each with unique mechanisms and applications. Here are the most common ones:

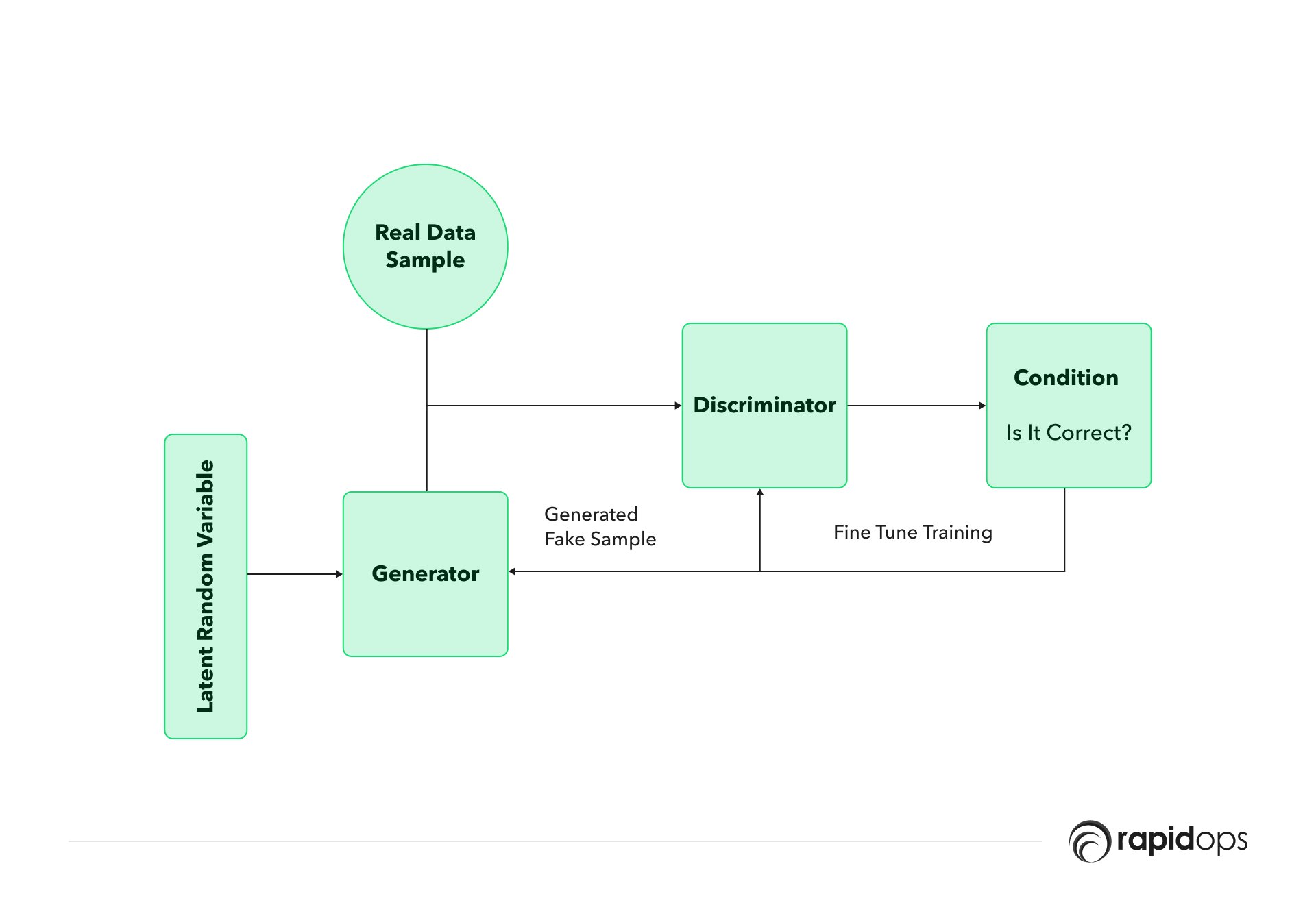

Generative Adversarial Networks (GANs)

GANs consist of two neural networks: a generator network that creates new data instances and a discriminator network that evaluates the generated data for authenticity.

They operate in a competitive setting where the generator tries to fool the discriminator, and the discriminator tries to classify real versus generated instances correctly.

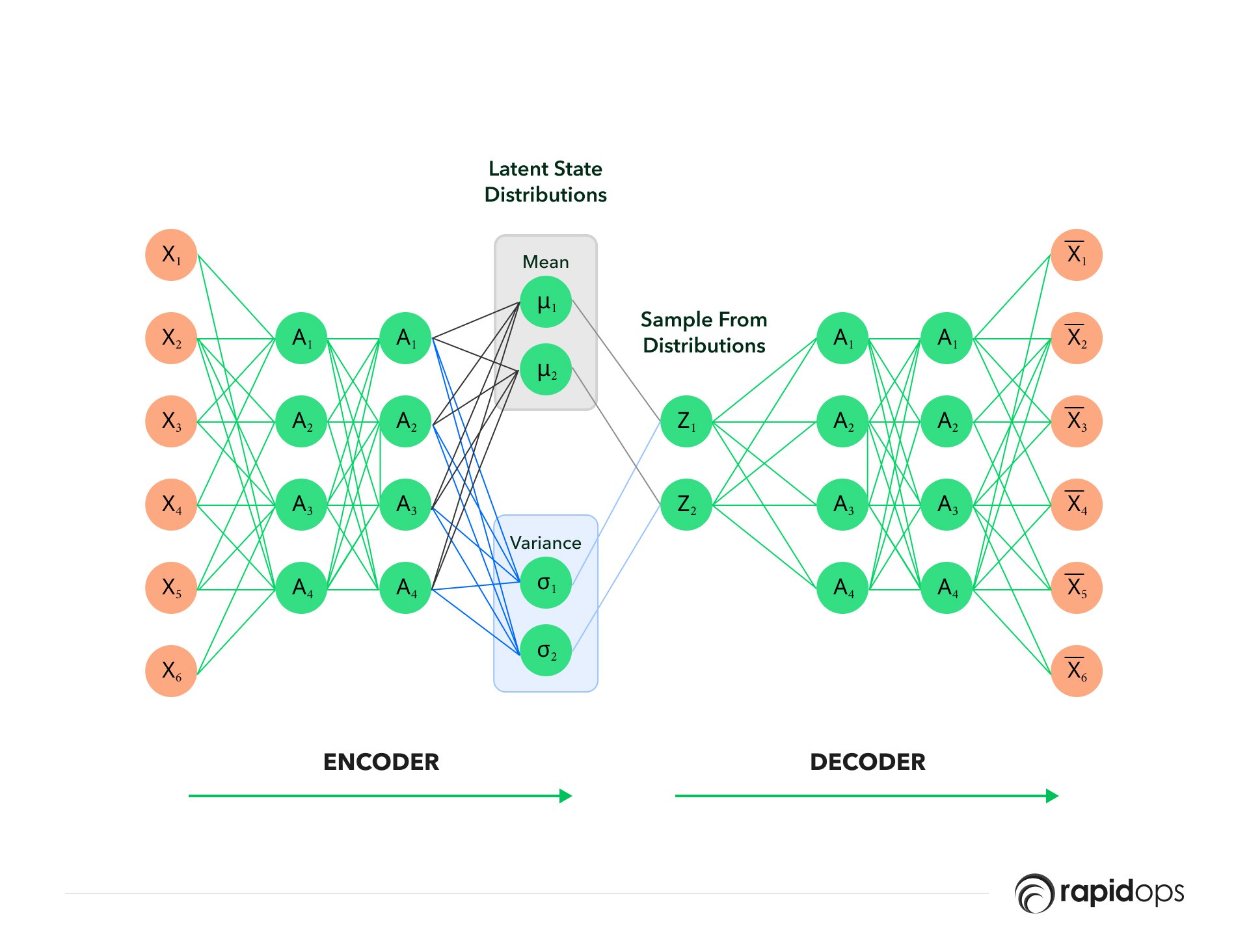

Variational Autoencoders (VAEs)

VAEs are a type of autoencoder with added constraints on the encoded representations of the input. They are designed to generate new data by changing the encoded representations slightly, which in turn, changes the reconstructed data.

Applications and importance of Generative AI in today's world

Generative AI has extensive applications in the digital world. It's used to generate synthetic media (deepfakes), design websites, create artwork, write texts, and even generate video game levels.

It's pivotal in areas where creating large amounts of content is required but practically challenging.

Generative AI can also fill gaps in datasets, create realistic virtual reality environments, and personalize user experiences. In healthcare, it can simulate patient data while maintaining privacy.

Its importance lies in its ability to automate and innovate content creation, enhancing productivity and creativity.

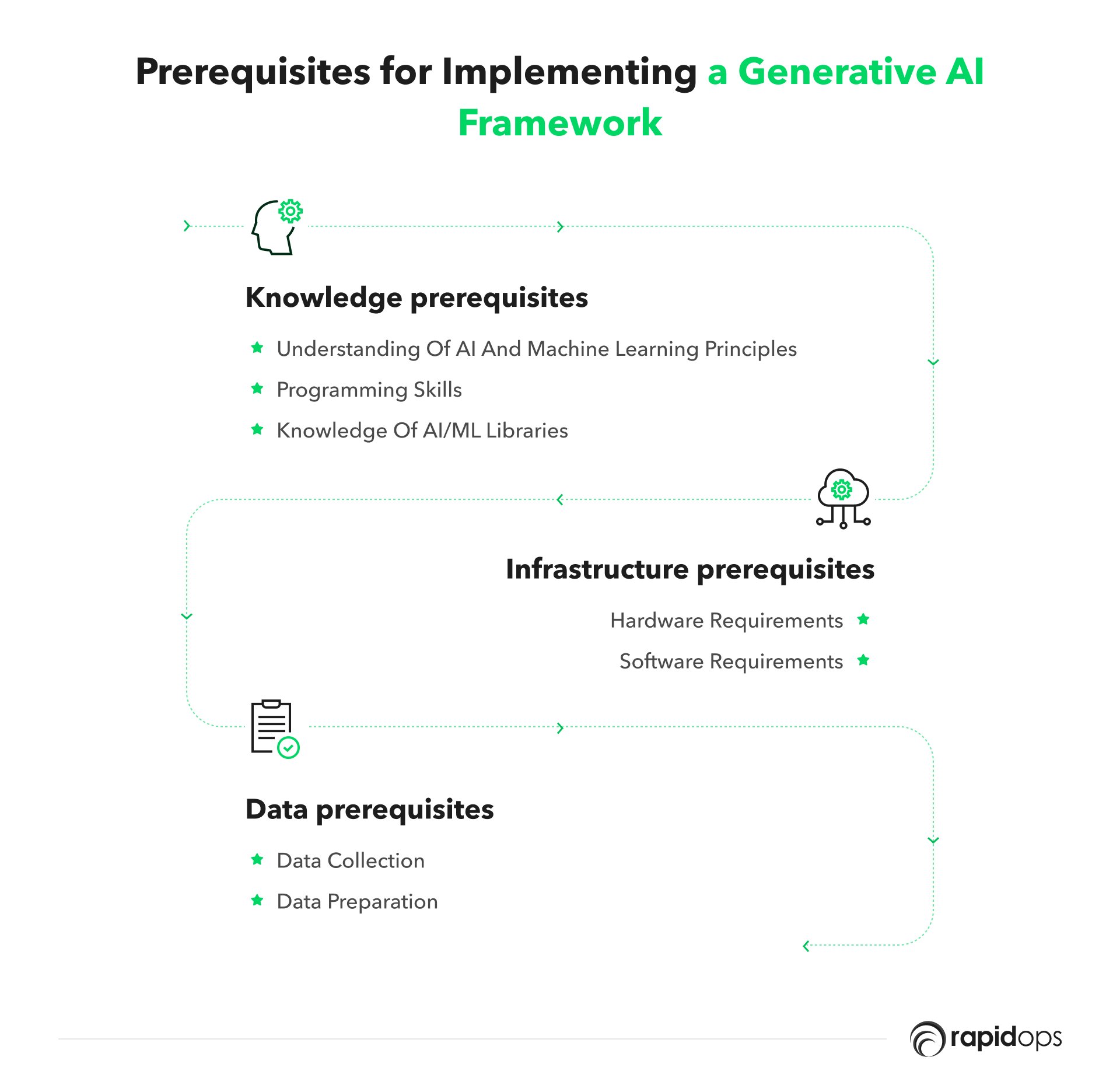

Prerequisites for implementing a generative AI framework

Embarking on a journey to create a Generative AI framework requires more than just an idea or a problem to solve.

Like all sophisticated technological projects, there are prerequisites or foundations, you need to lay for a successful implementation. These prerequisites span knowledge, infrastructure, and data.

1. Knowledge prerequisites

Understanding of AI and machine learning principles

At the heart of Generative AI lies Artificial Intelligence and Machine Learning.

Therefore, a solid understanding of these fields is fundamental. This includes familiarity with the basics of AI, how machine learning algorithms work, and an understanding of both supervised and unsupervised learning.

More specifically, knowledge about neural networks and how they are structured and trained is crucial.

Programming skills

As with most areas of AI, implementing a Generative AI framework requires a level of proficiency in programming. Python is the most commonly used language in AI due to its simplicity, flexibility, and the extensive libraries it offers for AI and Machine Learning.

Thus, understanding Python and being comfortable writing Python scripts is vital.

Knowledge of AI/ML libraries

Libraries are pre-written code that you can use to simplify the programming process.

TensorFlow and PyTorch are two of the most popular libraries used for AI and machine learning tasks. Knowledge of these libraries is essential for creating and training your Generative AI models.

TensorFlow, developed by Google, is especially popular for tasks involving deep learning and neural networks. PyTorch, developed by Facebook, is celebrated for its simplicity and ease of use, especially for prototyping and experimentation.

2. Infrastructure prerequisites

Hardware requirements

The training of Generative AI models can be computationally intensive. Therefore, having a high-performance computer is beneficial. Particularly, a good GPU (Graphics Processing Unit) is often necessary as the parallel computing power of GPUs significantly speeds up the model training process.

Software requirements

Apart from the necessary programming languages and libraries, being familiar with integrated development environments (IDEs) like Jupyter Notebook, PyCharm, or Visual Studio Code is beneficial.

In addition, understanding how to use cloud-based platforms like Google Colab or AWS SageMaker, which offer high computational power and pre-configured environments, can also be very advantageous.

3. Data prerequisites

Data collection

Generative models learn from data, so having a robust dataset is critical. The kind of data you need depends on what you want your model to generate. For instance, if you're training a model to generate text, you need a large corpus of text data.

Data preparation

Once collected, data often needs to be pre-processed before training. This could involve cleaning the data (removing irrelevant information), normalizing it (scaling numerical data), or encoding it (converting categories into numerical form).

The goal is to convert raw data into a form from which the model can easily learn.

Remember that developing a Generative AI framework is a process. While the prerequisites might seem daunting, you can always start with the basics and gradually build up your skills and resources.

Various online courses and resources are available to help you master AI principles, programming skills, and library usage. As for hardware, consider cloud platforms that offer resources as needed.

Lastly, when it comes to data, always ensure it is ethically sourced and relevant to your project. Once these prerequisites are in place, you'll be well-positioned to implement a successful Generative AI framework.

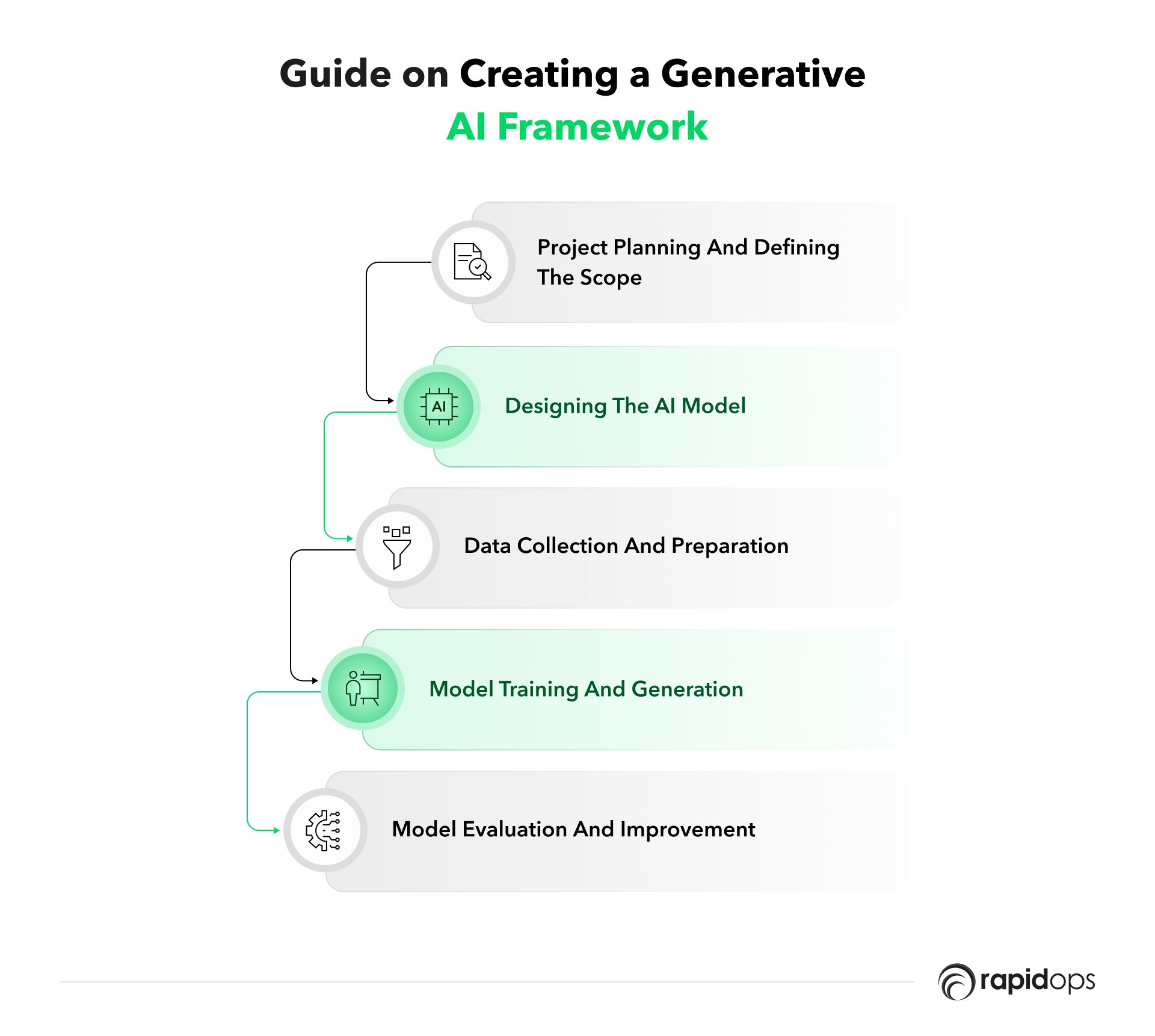

Step-by-step guide on creating a generative AI framework

Building a generative AI framework involves a series of steps that transform your initial idea into a functioning model capable of generating new data.

Each step in the process is crucial and builds upon the previous ones, starting with project planning and ending with model evaluation and improvement.

Step 1: Project planning and defining the scope

The first step in creating a Generative AI framework is planning your project and defining its scope. This involves two key aspects:

Defining the problem statement

What is it that you want your model to generate? It could be anything from text to music to images. It's crucial to be clear about your end goal, as it will guide all subsequent decisions and actions in the project.

Identifying the resources required

Identify the resources you'll need based on the problem statement. This includes hardware requirements (like GPU for training), software libraries (like TensorFlow or PyTorch), and data needed for training. You will also need to consider the skillset required, whether it's available in-house or if external expertise is needed.

Step 2: Designing the AI Model

Deciding on the type of generative model

Based on your project's needs, decide on the type of generative model. For instance, if you want to generate new images, you might opt for a Generative Adversarial Network (GAN). A model like GPT (Generative Pre-training Transformer) might be more suitable if you're dealing with text.

Designing the architecture

Your model's architecture will depend on the chosen type. For instance, a GAN has two parts: a generator and a discriminator. Each part will need its own neural network. These networks will have their own structure (layers and neurons) and parameters (weights and biases).

Step 3: Data Collection and Preparation

The next step is preparing your data for the model.

Methods for collecting data

The method for data collection depends on the nature of your project. If you're creating a text-generation model, you might collect data from books, articles, or the internet.

For an image generation model, you might use existing image datasets or scrape images from the internet. Always ensure your data is ethically sourced and complies with all relevant laws and regulations.

Pre-processing and cleaning the data

Once you have your data, it must be pre-processed and cleaned. This might involve removing irrelevant information, fixing errors, or normalizing values.

Text data might need to be tokenized (breaking text down into smaller pieces, like words or sentences), and numerical data might need to be normalized.

Dividing data into training and testing sets

The final step in data preparation is dividing your data into training and testing sets. The training set is used to train your model, while the testing set is used to evaluate it.

A common ratio is 80:20 (training: testing).

Step 4: Model Training and Generation

Once your data is ready and your model is designed, the next step is to train your model.

Detailed process of training the AI Model

Training involves feeding your data through the model, adjusting the model's parameters based on the output, and repeating this process many times.

For example, the generator creates a data instance in a GAN that the discriminator evaluates. Based on the discriminator's evaluation, the generator's parameters are adjusted to improve its performance.

Fine-tuning and optimizing the model

As your model trains, monitor its performance and make adjustments as necessary. This might involve changing the learning rate, altering the model architecture, or even changing the training data.

Generating new content with the trained model

Once your model is trained and fine-tuned, you can use it to generate new data. This might involve feeding a seed value into your model and having it generate data based on that seed.

Step 5: Model Evaluation and Improvement

The final step in the process is evaluating and improving your model.

Evaluating model performance

There are various ways to evaluate a generative model's performance. One common method is to use the testing set. The model generates data for the testing set, and the output is compared to the true values. Other methods might involve visual inspection (for images) or reading and grading (for text).

Iterating and Improving the Model

You might identify areas where the model could improve based on the evaluation. You then return to previous steps, make adjustments, and repeat the process. This could involve collecting more data, changing the model architecture, or adjusting the training process.

Creating a generative AI framework is complex, but breaking it down into these steps becomes manageable. Remember, creating a generative model is an iterative process.

Don't be discouraged if your first attempt isn't perfect. Each iteration will bring improvements and bring you closer to your goal.

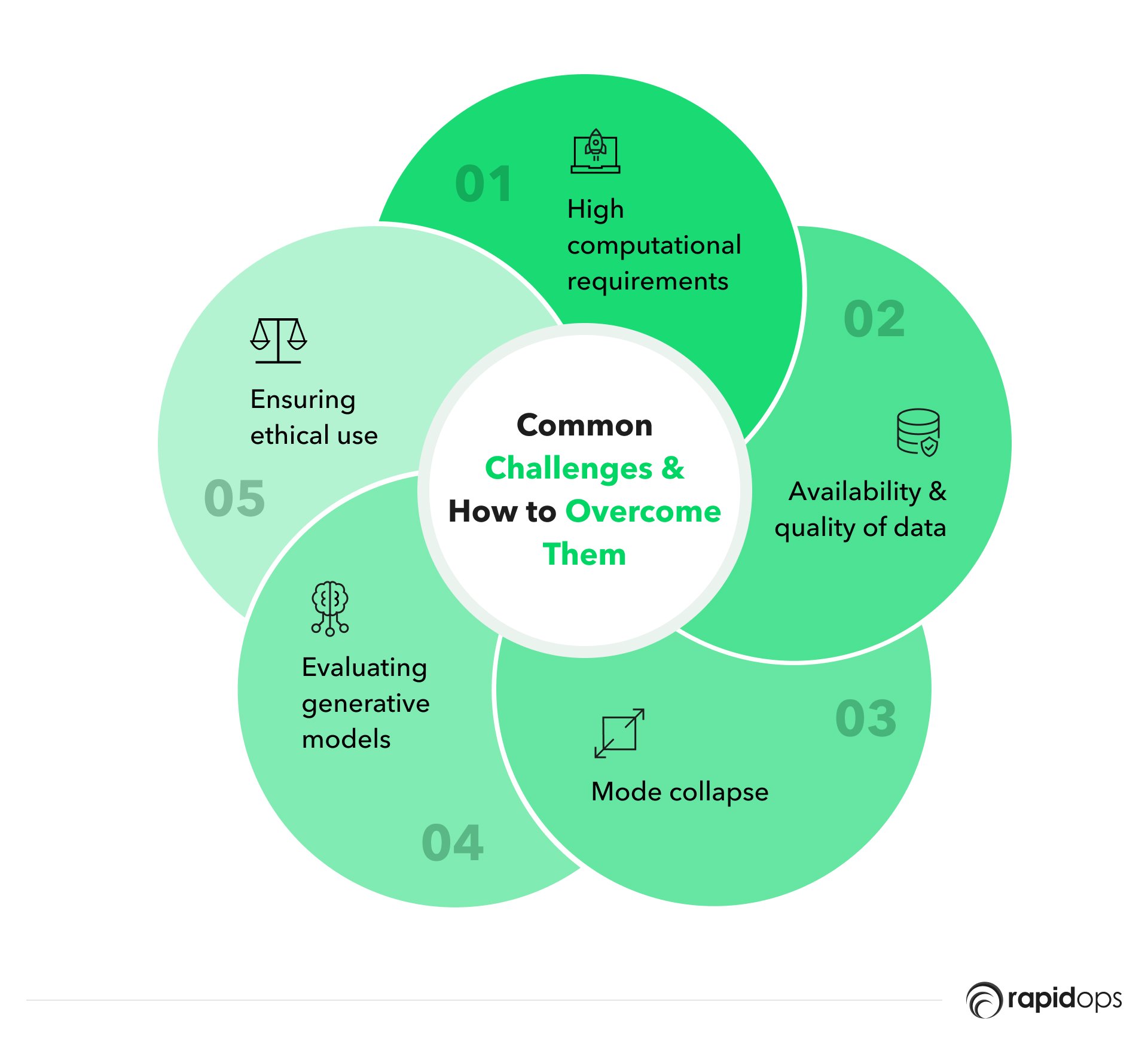

Common challenges and how to overcome them

Developing a Generative AI framework, like any AI endeavor, comes with its fair share of challenges. However, understanding these challenges and knowing how to address them can make the development process smoother and more effective.

1. High computational requirements

Generative models, especially deep learning-based ones like GANs or Transformers, can be computationally intensive. They require a lot of resources, both in terms of memory and computational power. This makes it challenging for individuals or organizations with limited resources.

Solution

One approach to mitigating this issue is to leverage cloud-based solutions that provide access to high-performance GPUs and TPUs.

Platforms such as Google Cloud, AWS, and Azure offer machine learning services that can be utilized for this purpose.

Using these platforms, you can rent the required computational power without investing heavily in hardware.

2. Availability and quality of data

Generative AI models are data-hungry. The training data's quality and diversity directly impact the model's performance. Finding enough quality data can often be a significant challenge.

Solution

While creating a universal solution for data-related issues is hard, a few strategies can help. One is to use data augmentation techniques, which artificially increase the size and diversity of your dataset.

Another approach is to leverage transfer learning, where a pre-trained model trained on a large, diverse dataset is used and fine-tuned for your specific task. There are also numerous publicly available datasets for various domains that you can use as a starting point.

3. Mode collapse

Mode collapse is a problem particularly associated with GANs, where the generator starts to produce limited varieties of samples, or even the same sample, over and over again, regardless of the input.

Solution

Various strategies have been proposed to mitigate mode collapse. One popular solution is to use different forms of GANs, like Wasserstein GANs or Conditional GANs, that are designed to deal with this issue.

Implementing these more sophisticated versions of GANs can help stabilize the training process and improve the variety of the generated samples.

4. Evaluating generative models

Unlike discriminative models, where you can use measures like accuracy or F1-score to evaluate the model, evaluating generative models is more challenging.

It can be difficult to quantify how good the generated data is, particularly if there's no clear 'ground reality' to compare against.

Solution

There are several metrics proposed to evaluate the quality of generative models, including Inception Score (IS), Frechet Inception Distance (FID), and Precision and Recall for Distributions (PRD).

However, evaluation often also involves human judgment, especially when the generated output is intended for human consumption, like music, text, or images.

5. Ensuring ethical use

Generative AI opens up the potential for misuse, including deepfakes, generating fake news, and more. These ethical considerations are a significant challenge in the deployment of generative models.

Solution

Establishing clear ethical guidelines for generative AI use and designing models and systems that promote transparency and accountability is crucial. Furthermore, developers and organizations can engage with regulatory bodies and contribute to the conversation around the responsible use of AI.

Future trends in generative AI

The future of Generative AI is filled with immense possibilities. As we continue to innovate and make technological breakthroughs, we are likely to witness some key trends that will revolutionize various sectors.

1. Improved content generation

Advances in natural language processing

Generative models are at the forefront of advances in natural language processing (NLP). Models like GPT-3 by OpenAI have shown an impressive understanding of human language, being able to generate high-quality text that's almost indistinguishable from that written by humans.

This technology is improving rapidly, and future advancements will likely include the ability to understand context more accurately, handle more complex instructions, and generate content that's even more coherent and tailored to the target audience.

This can revolutionize industries like marketing, journalism, customer service, and even entertainment, offering more personalized and engaging content.

Enhanced image and video generation capabilities

The future will likely see more sophisticated image and video generation capabilities. We can expect improvements in the quality and diversity of generated visuals, making them increasingly indistinguishable from real images and videos.

Advancements in models like GANs are expected to lead to more realistic facial and object generation, improved image completion (generating missing parts of images), and the ability to generate complex scenes from simple descriptions.

This could have significant implications for film, gaming, advertising, and design industries.

2. Personalized AI assistants

Customizable and context-aware virtual assistants

AI assistants are becoming integral to our lives, helping us manage tasks, answer questions, and entertain us. Generative AI will likely be crucial in making these assistants more personalized.

By learning from user interactions and preferences, these models can generate responses and suggestions tailored to the individual user, making them more helpful and engaging.

This includes understanding context, personal preferences, and even the user's emotional state.

Integration with various devices and platforms

AI assistants can be available across various devices and platforms as technology becomes more integrated. This means they'll not just be confined to our phones or smart speakers but also be part of our cars, household appliances, and workplaces.

Generative AI will be at the core of these developments, providing the intelligence that makes these assistants truly helpful and intuitive.

3. More realistic and interactive VR/AR experiences

Real-time generation of immersive virtual and augmented reality worlds

Generative AI will play a key role in developing virtual reality (VR) and augmented reality (AR). These models can be used to create realistic and dynamic virtual worlds where the environment can change in real time based on user actions.

This can make VR/AR experiences more immersive and engaging, opening up new possibilities for gaming, training simulations, and virtual tourism.

Enhanced interactivity and realism in virtual simulations

As generative models become more sophisticated, we can expect an increase in the realism and interactivity of virtual simulations.

These models can generate realistic characters interacting with users more complexly and naturally. They can also create diverse and unpredictable scenarios, making simulations more challenging and engaging.

4. Ethical considerations and regulation

Addressing biases and ethical concerns in Generative AI

As we delegate more decisions and tasks to AI, it's crucial to address the potential biases and ethical concerns that can arise. Generative models learn from data; the outputs can also be biased if this data is biased.

The future will likely see more research and development to address these biases and create fair and respectful models for all individuals and cultures.

Developing guidelines and regulations for responsible use

As generative AI becomes more pervasive, there will be an increasing need for guidelines and regulations to ensure its responsible use. This includes dealing with issues like deepfakes, intellectual property rights, and the potential for misuse in generating misleading or harmful content.

Organizations and governments will likely collaborate to establish these regulations, ensuring that the benefits of generative AI are realized while minimizing potential harms.

Case studies: Successful implementations of generative AI frameworks

To appreciate the vast potential of generative AI, let's delve into specific examples of successful implementation of generative AI in business.

Case Study 1: Facebook AI and translation services

Facebook AI uses generative models to power its automatic translation services. With billions of users worldwide, Facebook needs a robust translation service to ensure its global user base can communicate effectively.

Their AI model is trained on a vast corpus of multilingual text data. Given an input sentence in one language, the model can generate a corresponding sentence in a different language. This powerful translation service showcases how generative AI can break down language barriers and facilitate global communication.

Case Study 2: Autodesk and Dreamcatcher (Revolutionizing design)

Autodesk, the software company behind products like AutoCAD, has used generative AI to create a design technology called Dreamcatcher. This system takes design goals and constraints as inputs and generates designs that meet these criteria.

Designers can input their requirements, including material types, manufacturing methods, and performance criteria, and the system will generate a wide array of design solutions.

These generated designs often include innovative solutions that a human designer might not consider. This case study illustrates how generative AI can significantly enhance creativity and efficiency in design processes.

Case Study 3: NVIDIA and GANs (Generating photorealistic images)

NVIDIA has been a frontrunner in generating photorealistic images using Generative Adversarial Networks (GANs). Their model, known as StyleGAN, has been used to create a website called ThisPersonDoesNotExist.com, which generates images of people who don't exist.

StyleGAN consists of two neural networks: a generator network, which generates images, and a discriminator network, which tries to distinguish between real and generated images.

The competition between these networks results in the generation of incredibly realistic images. This showcases how generative AI can create new content that's virtually indistinguishable from the real thing.

Case Study 4: Jukin Media (Automating content creation)

Jukin Media, a global entertainment company specializing in user-generated content (UGC), harnessed OpenAI's GPT-3 for automated content creation. Their vast library of video content required metadata for efficient searching and categorization. However, manual metadata creation was time-consuming and costly.

To tackle this, Jukin implemented a GPT-3-based system to generate video descriptions and keywords automatically. The system analyses video content, creates relevant metadata, and even suggests potential use cases for different platforms.

The result was a significant reduction in time and resource expenditure and an improved ability to repurpose content for various digital projects. This case study exemplifies how generative AI can improve content management efficiency and scale up operations.

Case Study 5: DeepArt and Prisma (Digital art)

Generative AI has also made significant inroads in the art world. DeepArt and Prisma, two digital art platforms, utilize a type of generative AI called neural style transfer to transform user-uploaded images into unique works of art.

Neural style transfer involves taking the style of one image (typically an artwork) and applying it to another image, creating a new, stylistically transformed image.

Users upload their photos and select a style, and the AI does the rest, generating a unique and personalized piece of art. Both platforms showcase the transformative potential of generative AI in creative digital projects.

It's time to embrace the future with generative AI

Generative AI is a powerful and transformative technology with vast applications across industries, from personalized content to virtual experiences.

Rapidops is a leading AI solutions provider, offering expertise in developing bespoke generative AI models to meet specific business needs.

Embracing generative AI with Rapidops can propel digital projects into the future and unleash innovation, creativity, and efficiency. Contact Rapidops to bring your AI vision to life and stay ahead in this complex and promising field.

Frequently Asked Questions (FAQs)

These examples demonstrate the wide range of applications for Generative AI, and the field continues to advance rapidly, leading to even more innovative uses in various industries.

What does generative AI do?

What does generative AI do?How to build a generative AI solution?

How to build a generative AI solution?What are some examples of generative AI?

What are some examples of generative AI?What is the purpose of generative AI?

What is the purpose of generative AI?What are the prerequisites for implementing a generative AI framework?

What are the prerequisites for implementing a generative AI framework?

Saptarshi Das

Content Editor

9+ years of expertise in content marketing, SEO, and SERP research. Creates informative, engaging content to achieve marketing goals. Empathetic approach and deep understanding of target audience needs. Expert in SEO optimization for maximum visibility. Your ideal content marketing strategist.

What’s Inside

- What is generative AI?

- Prerequisites for implementing a generative AI framework

- Step-by-step guide on creating a generative AI framework

- Common challenges and how to overcome them

- Future trends in generative AI

- Case studies: Successful implementations of generative AI frameworks

- It's time to embrace the future with generative AI

- Frequently Asked Questions (FAQs)

Let’s build the next big thing!

Share your ideas and vision with us to explore your digital opportunities

Similar Stories

- AI

- 4 Mins

- September 2022

- AI

- 9 Mins

- January 2023

Receive articles like this in your mailbox

Sign up to get weekly insights & inspiration in your inbox.