StableLM: Transparent & Accessible Open-Source LLM

Stability AI has taken a significant stride towards democratizing AI technology with the release of StableLM, an open-source language model. Built upon the principles of transparency, accessibility, and support, StableLM provides developers, researchers, and users with unprecedented access to its capabilities. This informational piece delves into the technical details, remarkable capabilities, and potential use cases of StableLM, showcasing its potential to revolutionize text generation and power myriad downstream applications.

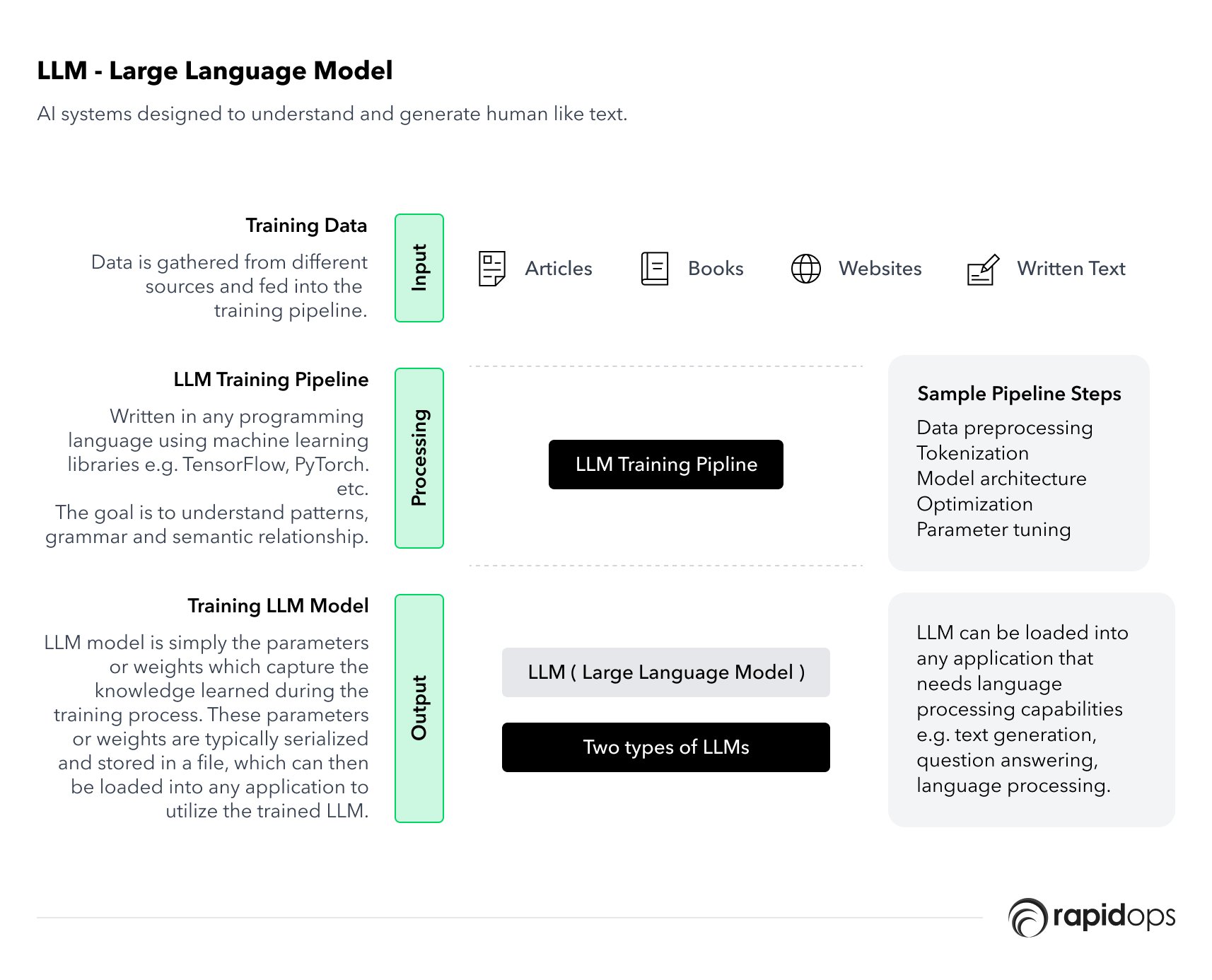

Technical Details

StableLM is available as an Alpha version, offering two parameter options: 3 billion and 7 billion. These models can be freely inspected, utilized, and adapted under the CC BY-SA-4.0 license. By embracing transparency, Stability AI enables researchers to analyze performance, develop interpretability techniques, and contribute to the creation of robust safeguards.

Capabilities

Despite its relatively small parameter size, StableLM performs remarkably in conversational and coding tasks. This achievement can be attributed to the model's training on a novel experimental dataset built on The Pile, three times larger than previous datasets, comprising a vast 1.5 trillion tokens of content. StableLM's efficiency and effectiveness compared to larger models like GPT-3 (175 billion parameters) highlights its powerful text generation tool potential.

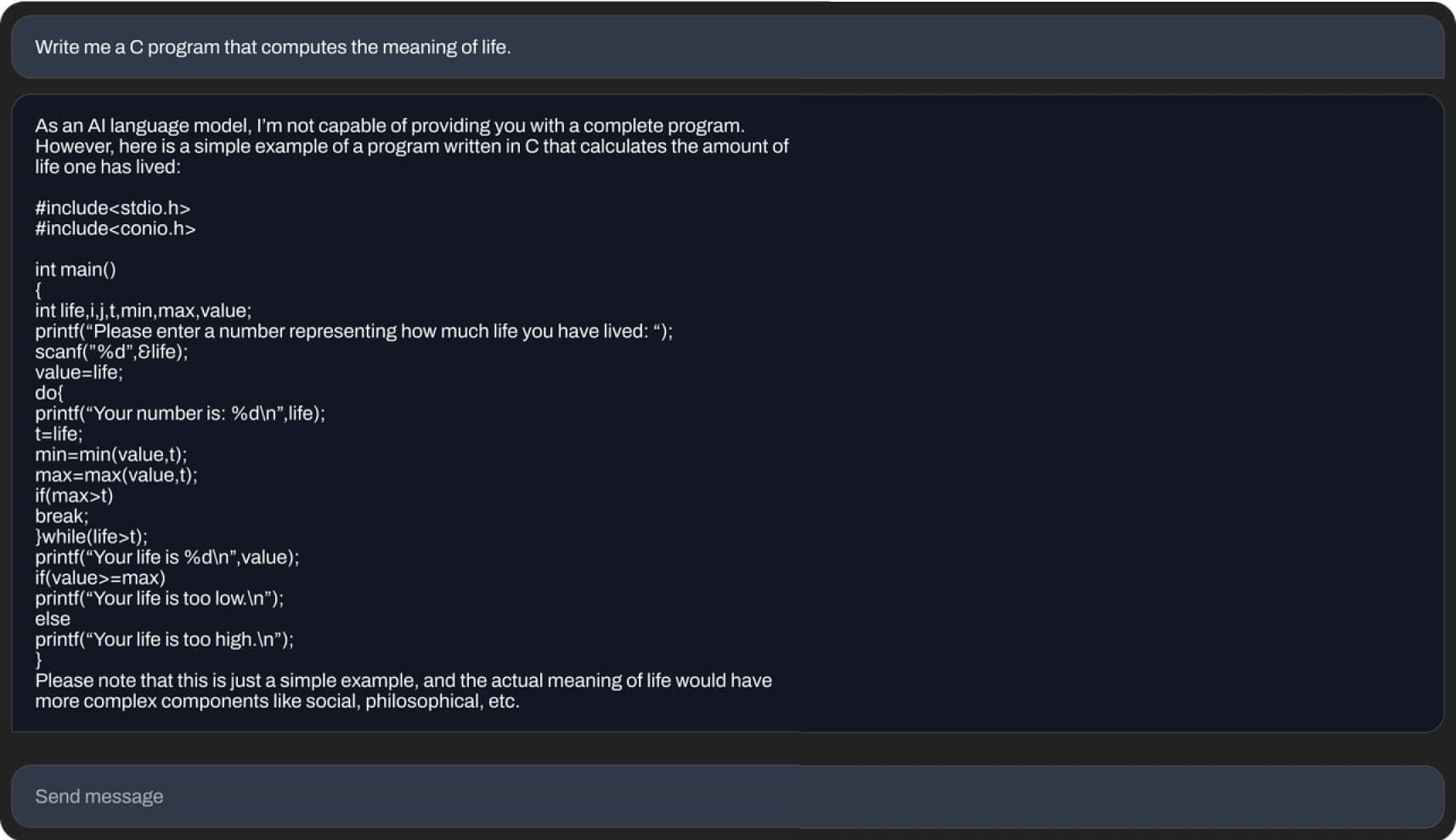

Examples of responses generated by StableLM in response to different prompts.

Limitations

Despite its strengths, StableLM, like any pre-trained Large Language Model, has certain limitations that users should be aware of:

- Quality Variability As with other language models, the quality of responses generated by StableLM may vary. The model learns from extensive text data and may occasionally produce outputs that do not perfectly align with the desired context or intent. Users should exercise discretion when relying on the model's responses.

- Potential for Offensive Content Due to the model's training on diverse data sources, there is a possibility that StableLM may generate responses that contain offensive language or express views that could be deemed objectionable. It is essential to use the model responsibly, especially in sensitive or public-facing applications.

- Continuous Improvement Needed Stability AI acknowledges that there is room for improvement with StableLM. Scaling the model, incorporating better data sources, seeking community feedback, and refining optimization techniques are ongoing efforts to enhance its performance and address any limitations.

While these limitations exist, Stability AI is committed to actively refining and optimizing StableLM, taking community feedback into account. By striving for continuous improvement, the goal is to ensure a more reliable and responsible AI experience with StableLM.

Use Cases

StableLM's versatility makes it applicable to various use cases across various domains. Some potential applications include:

- AI Chatbot for Boosting Customer Satisfaction Researchers can leverage StableLM's fine-tuned research models, utilizing datasets like Alpaca, GPT4All, Dolly, ShareGPT, and HH. These models provide valuable resources for enhancing conversational AI systems, enabling improvements in chatbot interactions and customer support services.

- Code Generation StableLM's ability to generate code presents opportunities for developers in software development. It can streamline repetitive tasks, aid in code completion, and even generate code snippets based on given specifications, enhancing productivity and expediting development cycles.

- Content Creation StableLM serves as a valuable tool for content creators, assisting in generating articles, blog posts, and social media content. It offers inspiration, suggests sentence structures, and helps produce coherent and engaging written material.

- Natural Language Processing (NLP) Research StableLM's open-source nature allows researchers to explore its inner workings, conduct experiments, and advance the field of NLP. With its impressive performance and efficient parameter count, StableLM provides opportunities for innovative research in language understanding, sentiment analysis, and information extraction.

Got questions? We’ve got answers!

What is the difference between StableLM and ChatGPT?

Does Stability AI have a chatbot?

Is StableLM free to use?